After months where the containers were up and running without a problem, they all stopped working as of last night. Usually, a system reboot works and or restarting the entire LXD (snap version, running v4.15).

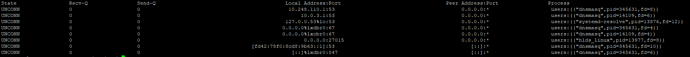

But even when they aren’t working. I can “start” them up just fine, but no IP address is being assigned:

Example container info:

architecture: x86_64

config:

image.architecture: x86_64

image.description: Ubuntu 18.04 LTS minimal (20200506)

image.os: ubuntu

image.release: bionic

volatile.base_image: 572979f0119c180392944f756f3aa6e402ae7c11ec3380fc2e465b2cc76e309d

volatile.eth0.host_name: vethe7b3dc8d

volatile.eth0.hwaddr: 00:16:3e:50:c3:f7

volatile.idmap.base: “0”

volatile.idmap.current: ‘[{“Isuid”:true,“Isgid”:false,“Hostid”:1000000,“Nsid”:0,“Maprange”:1000000000},{“Isuid”:false,“Isgid”:true,“Hostid”:1000000,“Nsid”:0,“Maprange”:1000000000}]’

volatile.idmap.next: ‘[{“Isuid”:true,“Isgid”:false,“Hostid”:1000000,“Nsid”:0,“Maprange”:1000000000},{“Isuid”:false,“Isgid”:true,“Hostid”:1000000,“Nsid”:0,“Maprange”:1000000000}]’

volatile.last_state.idmap: ‘[{“Isuid”:true,“Isgid”:false,“Hostid”:1000000,“Nsid”:0,“Maprange”:1000000000},{“Isuid”:false,“Isgid”:true,“Hostid”:1000000,“Nsid”:0,“Maprange”:1000000000}]’

volatile.last_state.power: RUNNING

volatile.uuid: b31837cd-d4b6-4024-b188-bd50eff94a6d

devices:

eth0:

name: eth0

network: lxdbr0

type: nic

root:

path: /

pool: znc

type: disk

znc:

connect: tcp:127.0.0.1:xxx

listen: tcp:0.0.0.0:xxx

type: proxy

lxdbr0 that is being used managed mode:

config:

ipv4.address: 10.248.110.1/24

ipv4.nat: “true”

ipv6.address: fd42:78f0:8cd8:9b63::1/64

ipv6.nat: “true”

raw.dnsmasq: |

auth-zone=lxd

dns-loop-detect

description: “”

name: lxdbr0

type: bridge

used_by:

- /1.0/instances/znc

managed: true

status: Created

locations:- none

Any idea what might be wrong? Tomp mentioned to check the log files, though I have no idea where the log file is located.

This isn’t the first time btw that containers just stopped working, happened a few times in the past few months. But as I mentioned above, restart usually fixed that. Not this time though.