I notice you have raw dnsmasq, just a hunch, can you try unsetting that:

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

UNCONN 0 0 10.0.3.1:53 0.0.0.0:* users:((“dnsmasq”,pid=14109,fd=6))

UNCONN 0 0 127.0.0.53%lo:53 0.0.0.0:* users:((“systemd-resolve”,pid=13876,fd=12))

UNCONN 0 0 0.0.0.0%lxcbr0:67 0.0.0.0:* users:((“dnsmasq”,pid=14109,fd=4))

UNCONN 0 0 0.0.0.0:27015 0.0.0.0:* users:((“hlds_linux”,pid=13977,fd=8))

And you mean unsetting it with:

sudo nsenter --mount=/run/snapd/ns/lxd.mnt – bash

LD_LIBRARY_PATH=/snap/lxd/current/lib/:/snap/lxd/current/lib/x86_64-linux-gnu/ /snap/lxd/current/bin/dnsmasq --help

I’m not familiar with this.

No not the snap commands, the link I posted showed you how to do it, but its:

lxc network unset lxdbr0 raw.dnsmasq

if I do that, only IPV6’s are assigned to the containers.

±-------------±--------±-----±----------------------------------------------±----------±----------+

| baker | RUNNING | | fd42:78f0:8cd8:9b63:216:3eff:fe93:526c (eth1) | CONTAINER | 0 |

| | | | fd42:78f0:8cd8:9b63:216:3eff:fe69:389b (eth0) | | |

±-------------±--------±-----±----------------------------------------------±----------±----------+

Can you reload LXD now:

sudo systemctl reload snap.lxd.daemon

And then restart your containers.

Just tried, sorry. Only IPV6 still:

Ips:

eth0: inet6 fd42:78f0:8cd8:9b63:216:3eff:fe69:389b vethfaa4b48f

eth0: inet6 fe80::216:3eff:fe69:389b vethfaa4b48f

eth1: inet6 fd42:78f0:8cd8:9b63:216:3eff:fe93:526c vethc3504a1d

eth1: inet6 fe80::216:3eff:fe93:526c vethc3504a1d

lo: inet 127.0.0.1

lo: inet6 ::1

Can you run dhclient inside your container please

Running it doesn’t seem to do anything. It just… “hangs”.

![]()

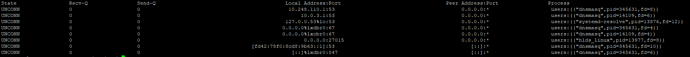

Can you show the output of sudo ss -ulpn on the LXD host please.

You have DHCP service listening.

BTW what is lxcbr0 (as opposed to lxdbr0)?

Can you check the output of sudo iptables-save and sudo nft list ruleset to check if a firewall could be blocking it.

No idea what lxcbr0 is, haven’t used it and is set as unmanaged.

As for iptables, I’m using UFW.

Well in that case can you kill the dnsmasq process listening on that interface to rule it out. Always best to keep things as simple as possible in my experience.

The output of those commands above please.

As for “sudo nft list ruleset” → Command not found.

Ah docker. See

and

Not sure if the first UFW rules did it… But containers are getting an IP again!

Basically docker modifies the iptables rules so that it prevents container’s DHCP requests. But depending on the start up order of docker vs LXD it may work or not without rule modification.

But if you then reload LXD it wipes its own rules and re-adds them which can then cause the docker rules to take effect.

I assume this is in combination with dnsmasq? As it worked fine for many weeks/months before.

Its a well known historical issue (search the forums for docker), its firewall rules prevent containers reaching dnsmasq’s DHCP service. But as I explained it depends on ordering, which can be unpredictable and variable on different systems.

So its been confirmed that its the raw.dnsmasq auth-zone=lxd setting that is causing the problem. See dnsmask process exited prematurely if raw.dnsmasq auth-zone set when using core20 snap · Issue #8905 · lxc/lxd · GitHub issue for more detail.