Hi,

to start from the beginning:

Running:

[root@manager /]# ssh root@lxdnode -i .ssh/lxdnode-key "lxc init local:centos/6/cloud lxc1610 -s node2-lxc-filestorage -p lxc1610"

Will trigger on the lxdnode this process:

38417 ? Ssl 0:00 lxc init local:centos/6/cloud lxc1610 -s node2-lxc-filestorage -p lxc1610

But nothing happens. And i mean right nothing. lxd, started in debugging mode will print right nothing.

Now, when i press CTRL+C on the manager host who called ssh to connect to the lxdnode to start this lxc init command, the lxd debugging log will explode at an instant.

Writing:

DBUG[09-13|17:35:41] Handling user= method=GET url=/1.0 ip=@

DBUG[09-13|17:35:41]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": {

"config": {},

"api_extensions": [

"storage_zfs_remove_snapshots",

"container_host_shutdown_timeout",

"container_stop_priority",

"container_syscall_filtering",

"auth_pki",

"container_last_used_at",

"etag",

"patch",

"usb_devices",

"https_allowed_credentials",

"image_compression_algorithm",

"directory_manipulation",

"container_cpu_time",

"storage_zfs_use_refquota",

"storage_lvm_mount_options",

"network",

"profile_usedby",

"container_push",

"container_exec_recording",

"certificate_update",

"container_exec_signal_handling",

"gpu_devices",

"container_image_properties",

"migration_progress",

"id_map",

"network_firewall_filtering",

"network_routes",

"storage",

"file_delete",

"file_append",

"network_dhcp_expiry",

"storage_lvm_vg_rename",

"storage_lvm_thinpool_rename",

"network_vlan",

"image_create_aliases",

"container_stateless_copy",

"container_only_migration",

"storage_zfs_clone_copy",

"unix_device_rename",

"storage_lvm_use_thinpool",

"storage_rsync_bwlimit",

"network_vxlan_interface",

"storage_btrfs_mount_options",

"entity_description",

"image_force_refresh",

"storage_lvm_lv_resizing",

"id_map_base",

"file_symlinks",

"container_push_target",

"network_vlan_physical",

"storage_images_delete",

"container_edit_metadata",

"container_snapshot_stateful_migration",

"storage_driver_ceph",

"storage_ceph_user_name",

"resource_limits",

"storage_volatile_initial_source",

"storage_ceph_force_osd_reuse",

"storage_block_filesystem_btrfs",

"resources",

"kernel_limits",

"storage_api_volume_rename",

"macaroon_authentication",

"network_sriov",

"console",

"restrict_devlxd",

"migration_pre_copy",

"infiniband",

"maas_network",

"devlxd_events",

"proxy",

"network_dhcp_gateway",

"file_get_symlink",

"network_leases",

"unix_device_hotplug",

"storage_api_local_volume_handling",

"operation_description",

"clustering",

"event_lifecycle",

"storage_api_remote_volume_handling",

"nvidia_runtime",

"container_mount_propagation",

"container_backup",

"devlxd_images",

"container_local_cross_pool_handling",

"proxy_unix",

"proxy_udp",

"clustering_join",

"proxy_tcp_udp_multi_port_handling",

"network_state",

"proxy_unix_dac_properties",

"container_protection_delete",

"unix_priv_drop",

"pprof_http",

"proxy_haproxy_protocol",

"network_hwaddr",

"proxy_nat",

"network_nat_order",

"container_full",

"candid_authentication",

"backup_compression",

"candid_config",

"nvidia_runtime_config",

"storage_api_volume_snapshots",

"storage_unmapped",

"projects",

"candid_config_key",

"network_vxlan_ttl",

"container_incremental_copy",

"usb_optional_vendorid",

"snapshot_scheduling",

"container_copy_project",

"clustering_server_address",

"clustering_image_replication",

"container_protection_shift",

"snapshot_expiry",

"container_backup_override_pool",

"snapshot_expiry_creation",

"network_leases_location",

"resources_cpu_socket",

"resources_gpu",

"resources_numa",

"kernel_features",

"id_map_current",

"event_location",

"storage_api_remote_volume_snapshots",

"network_nat_address",

"container_nic_routes",

"rbac",

"cluster_internal_copy",

"seccomp_notify",

"lxc_features",

"container_nic_ipvlan",

"network_vlan_sriov",

"storage_cephfs",

"container_nic_ipfilter",

"resources_v2",

"container_exec_user_group_cwd",

"container_syscall_intercept",

"container_disk_shift",

"storage_shifted",

"resources_infiniband",

"daemon_storage"

],

"api_status": "stable",

"api_version": "1.0",

"auth": "trusted",

"public": false,

"auth_methods": [

"tls"

],

"environment": {

"addresses": [],

"architectures": [

"x86_64",

"i686"

],

"certificate": "-----BEGIN CERTIFICATE-----\nMII[....]2h8\n-----END CERTIFICATE-----\n",

"certificate_fingerprint": "0ec634dbb5e[....]d1e6af4734cd6819d",

"driver": "lxc",

"driver_version": "3.2.1",

"kernel": "Linux",

"kernel_architecture": "x86_64",

"kernel_features": {

"netnsid_getifaddrs": "true",

"seccomp_listener": "true",

"shiftfs": "false",

"uevent_injection": "true",

"unpriv_fscaps": "true"

},

"kernel_version": "5.2.9-200.fc30.x86_64",

"lxc_features": {

"mount_injection_file": "true",

"network_gateway_device_route": "true",

"network_ipvlan": "true",

"network_l2proxy": "true",

"network_phys_macvlan_mtu": "true",

"seccomp_notify": "true"

},

"project": "default",

"server": "lxd",

"server_clustered": false,

"server_name": "lxdnode",

"server_pid": 29869,

"server_version": "3.17",

"storage": "dir | zfs",

"storage_version": "1 | 0.8.1-1"

}

}

}

DBUG[09-13|17:35:41] Handling method=GET url=/1.0/containers/lxc1607 ip=@ user=

DBUG[09-13|17:35:41] Database error: &errors.errorString{s:"No such object"}

DBUG[09-13|17:35:41]

{

"error": "not found",

"error_code": 404,

"type": "error"

}

This will be printed 3 times ( the whole block ).

After this it will print:

DBUG[09-13|17:35:41] Handling method=GET url="/1.0/containers?recursion=1" ip=@ user=

DBUG[09-13|17:35:41]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": [

{

followed by the whole list of installed lxc containers and their config.

Of which the last one is this lxc1607 ( it was deleted, by i recreated it and hoped the “no such object” problem could be fixed this way:

"architecture": "x86_64",

"config": {

"image.architecture": "amd64",

"image.description": "Centos 6 amd64 (20190913_07:08)",

"image.os": "Centos",

"image.release": "6",

"image.serial": "20190913_07:08",

"image.type": "squashfs",

"volatile.apply_template": "create",

"volatile.base_image": "813b589dbcd05568c6c9d3ce0c56bebf35703da95c47ffd2cd7a0cb2678895c6",

"volatile.eth0.hwaddr": "00:16:3e:fc:f8:ba",

"volatile.idmap.base": "0",

"volatile.idmap.next": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.last_state.idmap": "[]"

},

"devices": {

"root": {

"path": "/",

"pool": "node2-lxc-filestorage",

"type": "disk"

}

},

"ephemeral": false,

"profiles": [

"lxc1607"

],

"stateful": false,

"description": "",

"created_at": "2019-09-13T17:39:20.958987679+02:00",

"expanded_config": {

"image.architecture": "amd64",

"image.description": "Centos 6 amd64 (20190913_07:08)",

"image.os": "Centos",

"image.release": "6",

"image.serial": "20190913_07:08",

"image.type": "squashfs",

"volatile.apply_template": "create",

"volatile.base_image": "813b589dbcd05568c6c9d3ce0c56bebf35703da95c47ffd2cd7a0cb2678895c6",

"volatile.eth0.hwaddr": "00:16:3e:fc:f8:ba",

"volatile.idmap.base": "0",

"volatile.idmap.next": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.last_state.idmap": "[]"

},

"expanded_devices": {

"eth0": {

"host_name": "lxc1607",

"name": "eth0",

"nictype": "bridged",

"parent": "ovsbr",

"type": "nic"

},

"root": {

"path": "/",

"pool": "node2-lxc-filestorage",

"type": "disk"

}

},

"name": "lxc1607",

"status": "Stopped",

"status_code": 102,

"last_used_at": "1970-01-01T01:00:00+01:00",

"location": "none"

}

]

}

DBUG[09-13|17:41:03] Handling method=GET url="/1.0/containers/lxc1607/snapshots?recursion=1" ip=@ user=

DBUG[09-13|17:41:03]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": []

}

I dont know how this happend. The setup worked for 2 months now fine and suddenly lxd init refused to create instances, if its started via ssh.

If its started locally, while logged in into lxdnode calling the identical command it will work as expected:

lxc init local:centos/6/cloud lxc1610 -s node2-lxc-filestorage -p lxc1610

Creating lxc1610

DBUG[09-13|17:52:09] Handling method=GET url=/1.0 ip=@ user=

DBUG[09-13|17:52:09]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": {

"config": {},

"api_extensions": [

"storage_zfs_remove_snapshots",

"container_host_shutdown_timeout",

"container_stop_priority",

"container_syscall_filtering",

"auth_pki",

"container_last_used_at",

"etag",

"patch",

"usb_devices",

"https_allowed_credentials",

"image_compression_algorithm",

"directory_manipulation",

"container_cpu_time",

"storage_zfs_use_refquota",

"storage_lvm_mount_options",

"network",

"profile_usedby",

"container_push",

"container_exec_recording",

"certificate_update",

"container_exec_signal_handling",

"gpu_devices",

"container_image_properties",

"migration_progress",

"id_map",

"network_firewall_filtering",

"network_routes",

"storage",

"file_delete",

"file_append",

"network_dhcp_expiry",

"storage_lvm_vg_rename",

"storage_lvm_thinpool_rename",

"network_vlan",

"image_create_aliases",

"container_stateless_copy",

"container_only_migration",

"storage_zfs_clone_copy",

"unix_device_rename",

"storage_lvm_use_thinpool",

"storage_rsync_bwlimit",

"network_vxlan_interface",

"storage_btrfs_mount_options",

"entity_description",

"image_force_refresh",

"storage_lvm_lv_resizing",

"id_map_base",

"file_symlinks",

"container_push_target",

"network_vlan_physical",

"storage_images_delete",

"container_edit_metadata",

"container_snapshot_stateful_migration",

"storage_driver_ceph",

"storage_ceph_user_name",

"resource_limits",

"storage_volatile_initial_source",

"storage_ceph_force_osd_reuse",

"storage_block_filesystem_btrfs",

"resources",

"kernel_limits",

"storage_api_volume_rename",

"macaroon_authentication",

"network_sriov",

"console",

"restrict_devlxd",

"migration_pre_copy",

"infiniband",

"maas_network",

"devlxd_events",

"proxy",

"network_dhcp_gateway",

"file_get_symlink",

"network_leases",

"unix_device_hotplug",

"storage_api_local_volume_handling",

"operation_description",

"clustering",

"event_lifecycle",

"storage_api_remote_volume_handling",

"nvidia_runtime",

"container_mount_propagation",

"container_backup",

"devlxd_images",

"container_local_cross_pool_handling",

"proxy_unix",

"proxy_udp",

"clustering_join",

"proxy_tcp_udp_multi_port_handling",

"network_state",

"proxy_unix_dac_properties",

"container_protection_delete",

"unix_priv_drop",

"pprof_http",

"proxy_haproxy_protocol",

"network_hwaddr",

"proxy_nat",

"network_nat_order",

"container_full",

"candid_authentication",

"backup_compression",

"candid_config",

"nvidia_runtime_config",

"storage_api_volume_snapshots",

"storage_unmapped",

"projects",

"candid_config_key",

"network_vxlan_ttl",

"container_incremental_copy",

"usb_optional_vendorid",

"snapshot_scheduling",

"container_copy_project",

"clustering_server_address",

"clustering_image_replication",

"container_protection_shift",

"snapshot_expiry",

"container_backup_override_pool",

"snapshot_expiry_creation",

"network_leases_location",

"resources_cpu_socket",

"resources_gpu",

"resources_numa",

"kernel_features",

"id_map_current",

"event_location",

"storage_api_remote_volume_snapshots",

"network_nat_address",

"container_nic_routes",

"rbac",

"cluster_internal_copy",

"seccomp_notify",

"lxc_features",

"container_nic_ipvlan",

"network_vlan_sriov",

"storage_cephfs",

"container_nic_ipfilter",

"resources_v2",

"container_exec_user_group_cwd",

"container_syscall_intercept",

"container_disk_shift",

"storage_shifted",

"resources_infiniband",

"daemon_storage"

],

"api_status": "stable",

"api_version": "1.0",

"auth": "trusted",

"public": false,

"auth_methods": [

"tls"

],

"environment": {

"addresses": [],

"architectures": [

"x86_64",

"i686"

],

"certificate": "-----BEGIN CERTIFICATE-----\nMIIC[...]kdz2h8\n-----END CERTIFICATE-----\n",

"certificate_fingerprint": "0ec634dbb5ecba71d5dc70533c720beeefea828f9d75edbd1e6af4734cd6819d",

"driver": "lxc",

"driver_version": "3.2.1",

"kernel": "Linux",

"kernel_architecture": "x86_64",

"kernel_features": {

"netnsid_getifaddrs": "true",

"seccomp_listener": "true",

"shiftfs": "false",

"uevent_injection": "true",

"unpriv_fscaps": "true"

},

"kernel_version": "5.2.9-200.fc30.x86_64",

"lxc_features": {

"mount_injection_file": "true",

"network_gateway_device_route": "true",

"network_ipvlan": "true",

"network_l2proxy": "true",

"network_phys_macvlan_mtu": "true",

"seccomp_notify": "true"

},

"project": "default",

"server": "lxd",

"server_clustered": false,

"server_name": "lxdnode",

"server_pid": 29869,

"server_version": "3.17",

"storage": "dir | zfs",

"storage_version": "1 | 0.8.1-1"

}

}

}

DBUG[09-13|17:52:09] Handling user= method=GET url=/1.0/storage-pools/node2-lxc-filestorage ip=@

DBUG[09-13|17:52:09]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": {

"config": {

"source": "/opt/storages/node2-lxc-filestorage",

"volatile.initial_source": "/opt/storages/node2-lxc-filestorage"

},

"description": "",

"name": "node2-lxc-filestorage",

"driver": "dir",

"used_by": [

"/1.0/containers/lxc1271",

"/1.0/containers/lxc1409",

"/1.0/containers/lxc1564",

"/1.0/containers/lxc1565",

"/1.0/containers/lxc1570",

"/1.0/containers/lxc1571",

"/1.0/containers/lxc1572",

"/1.0/containers/lxc1573",

"/1.0/containers/lxc1574",

"/1.0/containers/lxc1607",

"/1.0/profiles/lxc1271",

"/1.0/profiles/lxc1409",

"/1.0/profiles/lxc1564",

"/1.0/profiles/lxc1565",

"/1.0/profiles/lxc1570",

"/1.0/profiles/lxc1571",

"/1.0/profiles/lxc1572",

"/1.0/profiles/lxc1573",

"/1.0/profiles/lxc1574",

"/1.0/profiles/lxc1609",

"/1.0/profiles/lxc1610"

],

"status": "Created",

"locations": [

"none"

]

}

}

DBUG[09-13|17:52:09] Handling method=GET url=/1.0/images/aliases/centos%2F6%2Fcloud ip=@ user=

DBUG[09-13|17:52:09]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": {

"description": "",

"target": "813b589dbcd05568c6c9d3ce0c56bebf35703da95c47ffd2cd7a0cb2678895c6",

"name": "centos/6/cloud"

}

}

DBUG[09-13|17:52:09] Handling ip=@ user= method=GET url=/1.0/images/813b589dbcd05568c6c9d3ce0c56bebf35703da95c47ffd2cd7a0cb2678895c6

DBUG[09-13|17:52:09]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": {

"auto_update": false,

"properties": {

"architecture": "amd64",

"description": "Centos 6 amd64 (20190913_07:08)",

"os": "Centos",

"release": "6",

"serial": "20190913_07:08",

"type": "squashfs"

},

"public": false,

"aliases": [

{

"name": "centos/6/cloud",

"description": ""

}

],

"architecture": "x86_64",

"cached": false,

"filename": "lxd.tar.xz",

"fingerprint": "813b589dbcd05568c6c9d3ce0c56bebf35703da95c47ffd2cd7a0cb2678895c6",

"size": 88122432,

"update_source": {

"alias": "centos/6/cloud",

"certificate": "",

"protocol": "simplestreams",

"server": "https://images.linuxcontainers.org"

},

"created_at": "2019-09-13T02:00:00+02:00",

"expires_at": "1970-01-01T01:00:00+01:00",

"last_used_at": "2019-09-13T17:39:21.060281367+02:00",

"uploaded_at": "2019-09-13T12:47:51.163070372+02:00"

}

}

DBUG[09-13|17:52:09] Handling user= method=GET url=/1.0/events ip=@

DBUG[09-13|17:52:09] New event listener: 03537041-316a-4daf-bf87-21a66c29d5fb

DBUG[09-13|17:52:09] Handling method=POST url=/1.0/containers ip=@ user=

DBUG[09-13|17:52:09]

{

"architecture": "",

"config": {},

"devices": {

"root": {

"path": "/",

"pool": "node2-lxc-filestorage",

"type": "disk"

}

},

"ephemeral": false,

"profiles": [

"lxc1610"

],

"stateful": false,

"description": "",

"name": "lxc1610",

"source": {

"type": "image",

"certificate": "",

"fingerprint": "813b589dbcd05568c6c9d3ce0c56bebf35703da95c47ffd2cd7a0cb2678895c6"

},

"instance_type": ""

}

DBUG[09-13|17:52:09] Responding to container create

DBUG[09-13|17:52:09] New task operation: 5623117d-858c-4827-bf2d-dec778a9c53c

DBUG[09-13|17:52:09] Started task operation: 5623117d-858c-4827-bf2d-dec778a9c53c

DBUG[09-13|17:52:09]

{

"type": "async",

"status": "Operation created",

"status_code": 100,

"operation": "/1.0/operations/5623117d-858c-4827-bf2d-dec778a9c53c",

"error_code": 0,

"error": "",

"metadata": {

"id": "5623117d-858c-4827-bf2d-dec778a9c53c",

"class": "task",

"description": "Creating container",

"created_at": "2019-09-13T17:52:09.598852825+02:00",

"updated_at": "2019-09-13T17:52:09.598852825+02:00",

"status": "Running",

"status_code": 103,

"resources": {

"containers": [

"/1.0/containers/lxc1610"

]

},

"metadata": null,

"may_cancel": false,

"err": "",

"location": "none"

}

}

DBUG[09-13|17:52:09] Handling method=GET url=/1.0/operations/5623117d-858c-4827-bf2d-dec778a9c53c ip=@ user=

DBUG[09-13|17:52:09]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": {

"id": "5623117d-858c-4827-bf2d-dec778a9c53c",

"class": "task",

"description": "Creating container",

"created_at": "2019-09-13T17:52:09.598852825+02:00",

"updated_at": "2019-09-13T17:52:09.598852825+02:00",

"status": "Running",

"status_code": 103,

"resources": {

"containers": [

"/1.0/containers/lxc1610"

]

},

"metadata": null,

"may_cancel": false,

"err": "",

"location": "none"

}

}

INFO[09-13|17:52:09] Creating container project=default name=lxc1610 ephemeral=false

INFO[09-13|17:52:09] Created container project=default name=lxc1610 ephemeral=false

DBUG[09-13|17:52:09] Creating DIR storage volume for container "lxc1610" on storage pool "node2-lxc-filestorage"

DBUG[09-13|17:52:09] Mounting DIR storage pool "node2-lxc-filestorage"

DBUG[09-13|17:52:10] Created DIR storage volume for container "lxc1610" on storage pool "node2-lxc-filestorage"

DBUG[09-13|17:52:10] Mounting DIR storage pool "node2-lxc-filestorage"

DBUG[09-13|17:52:10] Success for task operation: 5623117d-858c-4827-bf2d-dec778a9c53c

DBUG[09-13|17:52:10] Handling method=GET url=/1.0/containers/lxc1610 ip=@ user=

DBUG[09-13|17:52:10]

{

"type": "sync",

"status": "Success",

"status_code": 200,

"operation": "",

"error_code": 0,

"error": "",

"metadata": {

"architecture": "x86_64",

"config": {

"image.architecture": "amd64",

"image.description": "Centos 6 amd64 (20190913_07:08)",

"image.os": "Centos",

"image.release": "6",

"image.serial": "20190913_07:08",

"image.type": "squashfs",

"volatile.apply_template": "create",

"volatile.base_image": "813b589dbcd05568c6c9d3ce0c56bebf35703da95c47ffd2cd7a0cb2678895c6",

"volatile.eth0.hwaddr": "00:16:3e:b3:da:29",

"volatile.idmap.base": "0",

"volatile.idmap.next": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.last_state.idmap": "[]"

},

"devices": {

"root": {

"path": "/",

"pool": "node2-lxc-filestorage",

"type": "disk"

}

},

"ephemeral": false,

"profiles": [

"lxc1610"

],

"stateful": false,

"description": "",

"created_at": "2019-09-13T17:52:09.623372307+02:00",

"expanded_config": {

"image.architecture": "amd64",

"image.description": "Centos 6 amd64 (20190913_07:08)",

"image.os": "Centos",

"image.release": "6",

"image.serial": "20190913_07:08",

"image.type": "squashfs",

"volatile.apply_template": "create",

"volatile.base_image": "813b589dbcd05568c6c9d3ce0c56bebf35703da95c47ffd2cd7a0cb2678895c6",

"volatile.eth0.hwaddr": "00:16:3e:b3:da:29",

"volatile.idmap.base": "0",

"volatile.idmap.next": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.last_state.idmap": "[]"

},

"expanded_devices": {

"eth0": {

"host_name": "lxc1610",

"name": "eth0",

"nictype": "bridged",

"parent": "ovsbr",

"type": "nic"

},

"root": {

"path": "/",

"pool": "node2-lxc-filestorage",

"type": "disk"

}

},

"name": "lxc1610",

"status": "Stopped",

"status_code": 102,

"last_used_at": "1970-01-01T01:00:00+01:00",

"location": "none"

}

}

So, i have no idea why lxd init works, if you first login into the node, and then issue the command, and does not work if you run the command on an ssh session ( by the way i also tried to combine it with bash --login -c ).

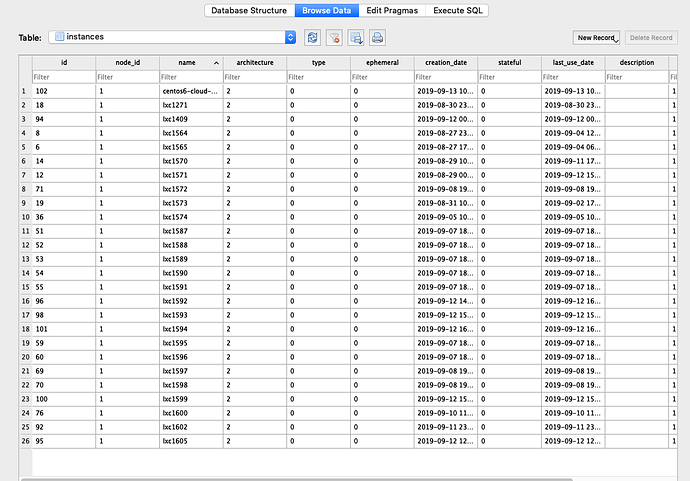

But it seems to me, that something in the lxd database gone quiet wrong.

I already experience this behaviour now already on 3 different hosts out of 10 hosts ( all run with snap 3.17 ) that run since around 1/2 year.

So at some point something with adding/removing containers must go wrong in the database, and i will now start to check out the database in detail, but to me it feels like there is somewhere a bug. So i report it and hope we can find it before it bites others too.

Greetings

Oliver