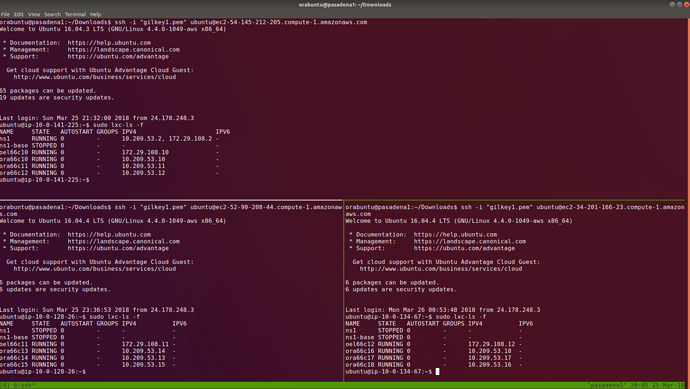

i’m following this tutorial https://linuxacademy.com/blog/linux/multiple-lxd-hosts-can-share-a-discreet-layer-2-container-only-network/ and i’ve built two servers with and have followed each and every single step, when i start containers inside my alpha server it has an IP range, but when i do the same for my bravo server it doesn’t show any ip addresses for the containers, also when i try to test the dns from within the containers, none of the containers over at the bravo host is recognizable. but when i move the containers over to alpha they receive an IP and work normally.

Here is the ifconfig result from Host Alpha

contgre Link encap:Ethernet HWaddr 52:d7:71:72:a0:fe

inet6 addr: fe80::50d7:71ff:fe72:a0fe/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1462 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:38 dropped:0 overruns:0 carrier:38

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

eth0 Link encap:Ethernet HWaddr 06:90:57:e2:9f:a2

inet addr:172.31.28.248 Bcast:172.31.31.255 Mask:255.255.240.0

inet6 addr: fe80::490:57ff:fee2:9fa2/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:9001 Metric:1

RX packets:136539 errors:0 dropped:0 overruns:0 frame:0

TX packets:51218 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:198565472 (198.5 MB) TX bytes:3815865 (3.8 MB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:192 errors:0 dropped:0 overruns:0 frame:0

TX packets:192 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:14456 (14.4 KB) TX bytes:14456 (14.4 KB)

lxdbr0 Link encap:Ethernet HWaddr 52:d7:71:72:a0:fe

inet addr:10.119.106.1 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fdc2:5811:bf6f:3e41::1/64 Scope:Global

inet6 addr: fe80::a844:56ff:fe36:9f5c/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1462 Metric:1

RX packets:132 errors:0 dropped:0 overruns:0 frame:0

TX packets:223 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:9654 (9.6 KB) TX bytes:264725 (264.7 KB)

vethS00AKH Link encap:Ethernet HWaddr fe:73:49:1e:fa:61

inet6 addr: fe80::fc73:49ff:fe1e:fa61/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1462 Metric:1

RX packets:132 errors:0 dropped:0 overruns:0 frame:0

TX packets:214 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:11502 (11.5 KB) TX bytes:262955 (262.9 KB)

and from Host Bravo

contgre Link encap:Ethernet HWaddr 3a:c5:73:f5:f4:b6

inet6 addr: fe80::38c5:73ff:fef5:f4b6/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1462 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:8 dropped:0 overruns:0 carrier:8

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

eth0 Link encap:Ethernet HWaddr 06:5a:e1:27:47:6a

inet addr:172.31.21.195 Bcast:172.31.31.255 Mask:255.255.240.0

inet6 addr: fe80::45a:e1ff:fe27:476a/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:9001 Metric:1

RX packets:135921 errors:0 dropped:0 overruns:0 frame:0

TX packets:53664 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:198233389 (198.2 MB) TX bytes:3898083 (3.8 MB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:192 errors:0 dropped:0 overruns:0 frame:0

TX packets:192 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:14456 (14.4 KB) TX bytes:14456 (14.4 KB)

vethPBKGEJ Link encap:Ethernet HWaddr fe:7e:9c:f0:4a:7c

inet6 addr: fe80::fc7e:9cff:fef0:4a7c/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1462 Metric:1

RX packets:32 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8856 (8.8 KB) TX bytes:648 (648.0 B)

vethR5YHS6 Link encap:Ethernet HWaddr fe:fe:ed:c1:64:dc

inet6 addr: fe80::fcfe:edff:fec1:64dc/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1462 Metric:1

RX packets:30 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:8172 (8.1 KB) TX bytes:648 (648.0 B)

I’m running LXD/LXC 2.0.11 on each of them

Also i would like to note that i’m running these instances up on AWS and have configured two different network interfaces, one for each. please let me know what i can do to debug/fix this. I’m new to LXC/LXD, thanks.