I wonder if the common theme between your setup (ZFS on LUKS) and mine (ZFS on LVM) is that device mapper is being used as the underlying storage layer.

So QEMU with io_uring doesn’t work on LVM (and by extension devicemapper), even directly.

@stgraber how do you want proceed with this? Clearly we are going to need to disable io_uring entirely for LVM. But that won’t fix people using other storage pools ontop of devicemapper backed layers (like LVM or LUKS)?

We could introduce a storage pool level setting to disable io_uring, but that wouldn’t work for externally attached disks. So might also need a disk level setting also. Or we just remove io_uring support entirely until its more fully supported?

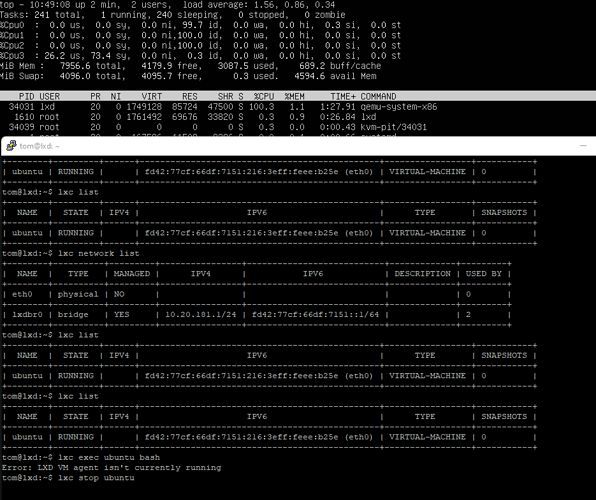

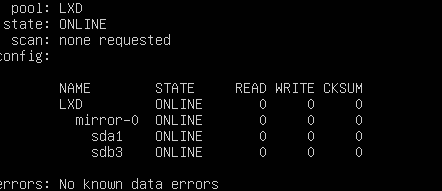

ZFS Mirror without LUKS encryption.

Same behavior. No IPv4 and I can’t stop the VM**. I don’t see any disk i/o

Is that running in a VM, so not native ZFS disks right?

Yes, running in Hyper-V VM with nesting enabled.

2 virtual disks (VHDX) with both 1 OS partition and 1 ZFS partition in mirror, but without LUKS encryption.

What is the underlying storage?

Virtual dynamic block device (VHDX) on NVMe storage. Testing it on my PC.

My production servers are bare-metal.

Just seems like QEMU ontop of io_uring doesn’t like being on anything other than real physical disks.

We’ve tried to account for that by detecting use of loop backed storage pools and disk images, but we cannot detect stacked storage layers/VM layers.

That is possible. I don’t have a physical server available right now to test it without LUKS, because they are in use without VM’s

I’ll leave this with @stgraber on how we proceed, but given that io_uring or QEMU + io_uring support is rather haphazard at the moment I suspect we’ll need one or more settings to control io_uring at various levels, with my preference being to defaulting to off until such time that it matures.

There is no workaround for this? I can’t start production VM’s right now.

There is no manual option for disabling it currently I’m afraid.

Using a loop backed storage pool will always disable it.

Have created issue to track:

I realise that its not much use for the problem right now (as you can’t switch the latest/stable channel to the LTS channels), but if you need a more convervative release channel for LXD for your production workloads then 4.0/stable may suit you better as the feature release latest/stable tends to move quite fast and gets all of the new features.

Please see

Yeah, I know. These kind of bugs are not very common. So I accept it for now. Hopefully it will be possible to fix this today. Otherwise I have to migrate. Thanks Thomas!

I tried the Focal HWE kernel and that seems to work with LVM and ZFS atop LVM.

sudo apt install --install-recommends linux-generic-hwe-20.04

Then reboot.

It works in Hyper-V with ZFS. I will test it now on my bare-metal server with LUKS.

It does not accept my LUKS password anymore after the upgrade to the HWE enabled kernel and I dont know why. I will reinstall the machine and put everything back. Unfortunately don’t have a header backup.