Hi.

Firstly, I am just reporting my experience (with two different servers using incus 6.6) as I am not sure if this is still the expected behavior or not with this version of Incus:

incus launch images:debian/12 vdeb --vm

Inside the vm, install zfs after adding the requisite repositories:

root@vdeb:~# apt install -y linux-headers-amd64 zfsutils-linux zfs-dkms zfs-zed

The compiling and install proceeds (be patient - it’s not fast) but right at the end you will see the zfs module doesn’t load - you’ll see this error or one like it:

modprobe: ERROR: could not insert 'zfs': Key was rejected by service

The ZFS modules are not loaded.

Try running '/sbin/modprobe zfs' as root to load them.

Rebooting does not fix this. I believe you can disable secure boot to get this to work but to me that defeats the purpose of having secure boot. So I wanted to do this more robustly. The solution is hinted at above but I struggled a little to get it all to work so I thought I would report my full working solution that I obtained via google (Debian-secure-boot) in the hope it’s useful to anyone for any module they need to get self-signed to work with secure-boot.

Issue the following command inside the vm. It will prompt you for a password to protect the keys it’s about to use to sign modules for secure boot. After that poweroff the vm (do not reboot):

root@vdeb:~# mokutil --import /var/lib/dkms/mok.pub

input password:

input password again:

root@vdeb:~# poweroff #shut it down, do not restart it via 'reboot'

Now re-start the vm but WITH A CONSOLE attached (this is the best way to do this because as you will see, you need that console access rather quickly, and I found it best to do at start):

incus start obiwan:vdeb --console=vga

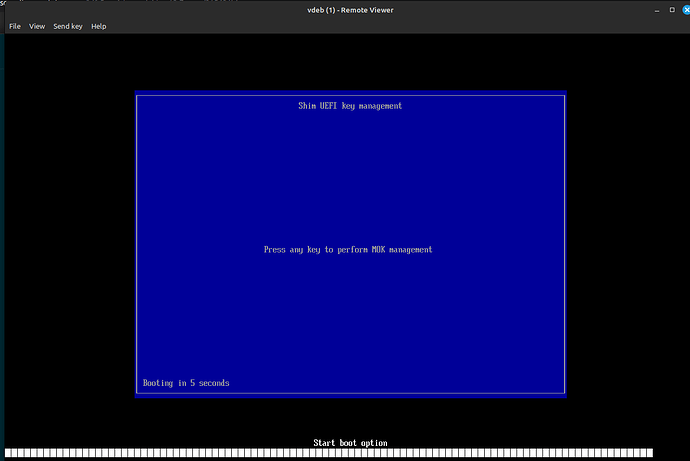

Pay attention to the console, because you only have a few seconds to select the option to add the signed key.

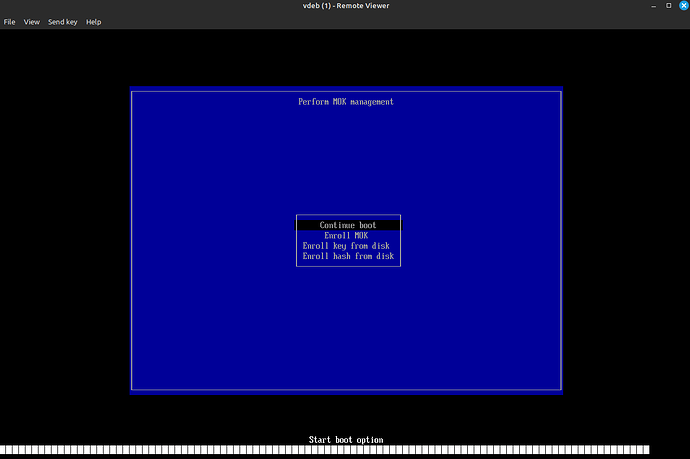

Quickly press a key in the console to enter the MOK management options. You’ll see this:

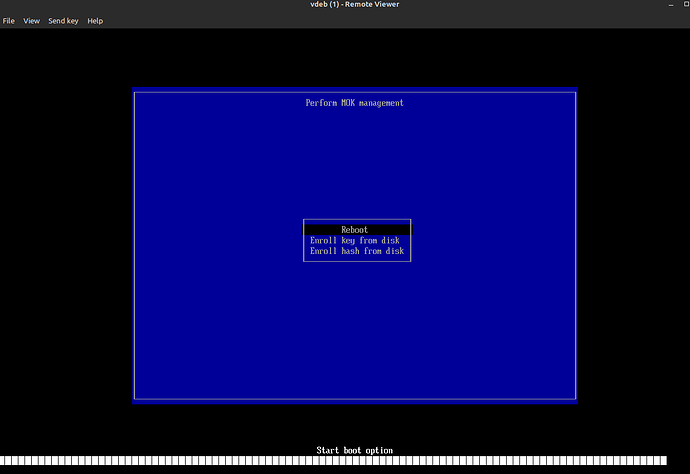

Select ‘Enroll MOK’ and follow the simple prompts. It allows you to view the fingerprints and of course to add the key, at which time it asks for the MOK password you used above. Do all that then it gives you this screen, just select reboot:

If you miss the window for ‘pressing a key’ then just let the vm re-boot, enter it via the agent and repeat the ‘mokoutil --import’ command to regenerate the boot option and then try again. It’s a little tricky but there is enough time once you get used to it.

Once you add your key and reboot, enter the vm as normal (you can use the incus-agent or ssh now because we are done with the console for this purpose), you will see the zfs module has been activated:

incus exec obiwan:vdeb bash

root@vdeb:~# zfs --version

zfs-2.1.11-1

zfs-kmod-2.1.11-1

root@vdeb:~#

V/R

Andrew