Thanks for trying to help. Don’t mean to ambush the forum

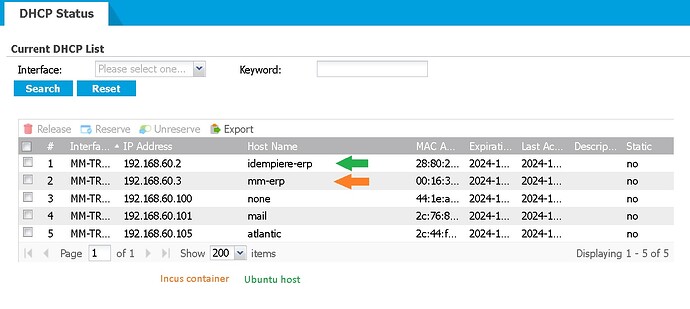

Goal is to have the container get it’s ip address from the router.

Is that possible?

I would like to use the router firewall to allow access to the container. If that isn’t possible, I can tweak iptables in the container for access.

Here’s my /etc/netplan config.

network:

version: 2

renderer: NetworkManager

ethernets:

eno1:

dhcp4: no

bridges:

br0:

dhcp4: yes

interfaces:

- eno1

I feel like I’m getting close, but I need to assign/modify the “id” network, or create a new network, then assign it to the container.

current network assigned to container

bret@idempiere-erp:~$ incus network show id

config:

ipv4.address: 10.1.60.110/24

ipv4.nat: “true”

ipv6.address: fd42:5129:77c1:1dc0::1/64

ipv6.nat: “true”

description: “”

name: id

type: bridge

used_by:

- /1.0/instances/mm-erp

- /1.0/profiles/default

managed: true

status: Created

locations:

- none

project: default

bret@idempiere-erp:~$

current interfaces on server

bret@idempiere-erp:~$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br0 state UP group default qlen 1000

link/ether 28:80:23:a7:1a:68 brd ff:ff:ff:ff:ff:ff

altname enp3s0f0

3: eno2: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 28:80:23:a7:1a:69 brd ff:ff:ff:ff:ff:ff

altname enp3s0f1

4: eno3: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 28:80:23:a7:1a:6a brd ff:ff:ff:ff:ff:ff

altname enp3s0f2

5: eno4: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 28:80:23:a7:1a:6b brd ff:ff:ff:ff:ff:ff

altname enp3s0f3

6: lxcbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 00:16:3e:00:00:00 brd ff:ff:ff:ff:ff:ff

inet 10.0.3.1/24 brd 10.0.3.255 scope global lxcbr0

valid_lft forever preferred_lft forever

7: bret-network: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 00:16:3e:85:03:da brd ff:ff:ff:ff:ff:ff

inet 10.231.70.1/24 scope global bret-network

valid_lft forever preferred_lft forever

inet6 fd42:e095:b6ec:edf4::1/64 scope global

valid_lft forever preferred_lft forever

8: id: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:16:3e:69:39:2e brd ff:ff:ff:ff:ff:ff

inet 10.1.60.110/24 scope global id

valid_lft forever preferred_lft forever

inet6 fd42:5129:77c1:1dc0::1/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::216:3eff:fe69:392e/64 scope link

valid_lft forever preferred_lft forever

9: incusbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 00:16:3e:d0:fd:66 brd ff:ff:ff:ff:ff:ff

inet 10.176.150.1/24 scope global incusbr0

valid_lft forever preferred_lft forever

inet6 fd42:1e8d:25e1:5d58::1/64 scope global

valid_lft forever preferred_lft forever

10: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 28:80:23:a7:1a:68 brd ff:ff:ff:ff:ff:ff

inet 192.168.60.2/24 brd 192.168.60.255 scope global dynamic noprefixroute br0

valid_lft 165157sec preferred_lft 165157sec

inet6 fe80::2a80:23ff:fea7:1a68/64 scope link

valid_lft forever preferred_lft forever

12: veth51309bff@if11: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master id state UP group default qlen 1000

link/ether 6e:67:42:c8:51:25 brd ff:ff:ff:ff:ff:ff link-netnsid 0

Any suggestions welcome