Hi:

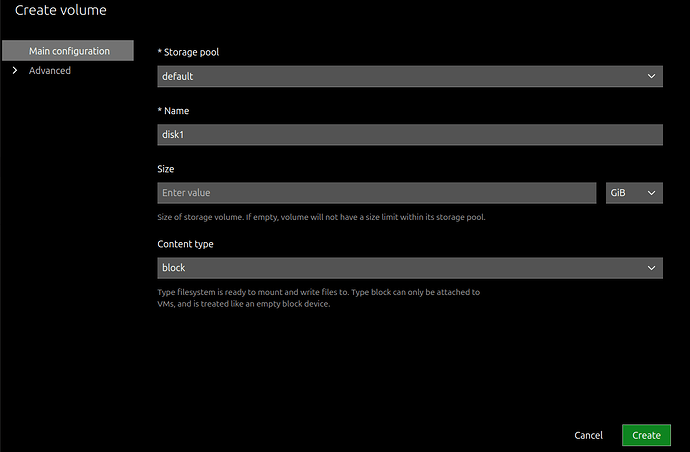

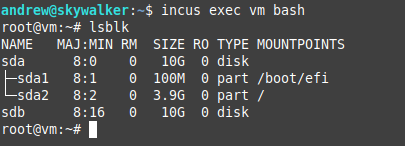

I just want to confirm an expected behavior in case I am misunderstanding. I normally create all my instances from the CLI, but today I was adding storage to a new method I am toying with and I used the gui (my memory on storage syntax causes me to re-do commands a lot so I thought let’s try something different…). So, using the gui, I created and attached a block storage volume (disk1) from my default (zfs) pool (backed by multi TB nvme storage) to an existing virtual-machine (‘vm’) and during the creation of the block storage volume I was pleasantly surprised to see an option to NOT fix the size of the disk. I tried it and,well, it seems to work.

I just want to sanity check before I do too much with these:

-

I wasn’t aware that an attached disk can have a dynamic size, is this correct or am I misunderstanding the output (or heading for a storage-full error train-wreck down the line)?

-

As I add data, my expectation is thus the disk block storage size will dynamically grow up to the limit of the actual zpool, yes without intervention on my part? Kinda like how my containers just grow without me worrying about that. Very cool if true for attached storage too.

-

Is having an ‘unlimited size’ for a block storage vs pre-fixing it likely to significantly impact performance; i.e. does it have a noticeable impact on observed read/write speeds?

-

Will incus TRIM the disk as I delete files? (not a big deal). In actual fact, my storage is flash but incus doesn’t really know that, so technically I would need DEALLOCATE not TRIM if I understand this correctly? As I say, not a biggie, I am just curious.

I use the excellent copy --refresh function to create failover hot-backups of vms/containers that are important to me and it’s the single biggest contributor to me having decent uptime. I am aware I can separately copy any of a VM (vm), container (c1) and, say a default-pool block storage volume (default:disk1) from srv1: to srv2:, but I can’t figure out the syntax to live-copy an actual vm AND it’s associated attached disk(s) using --refresh. Is this possible? Can you help untangle the syntax for me for a hypothetical srv1:vm with attached storage volume disk1 to be copy --refreshed to srv2: please?

Sorry for the long list. Not urgent - everything runs, I am just experimenting with an overhaul for my operating basis.

THANK YOU for brilliant Incus!

Andrew