I did some comparisons for the btrfs, lvm and zfs storage drivers using the incus-benchmark tool.

The Incus host is an Ubuntu virtual machine (with nested virtualization) running on an m3 Macbook Pro. I do admit this may not be a favourable scenario for all storage drivers.

All storage pools were created with the default settings.

incus storage pool create btrfs btrfs

incus storage pool create lvm lvm

incus storage pool create zfs zfs

Observations

Before getting into the benchmark, some things I observe while trying out the storage drivers.

- btrfs has no visibility on the quota set from within the container instance.

df -hreports the global size. - zfs can only restore the most recent snapshot, you need to delete newer snapshots before a restore is allowed.

- lvm is also a solid option, even though zfs and btrfs are the ones getting all the attention.

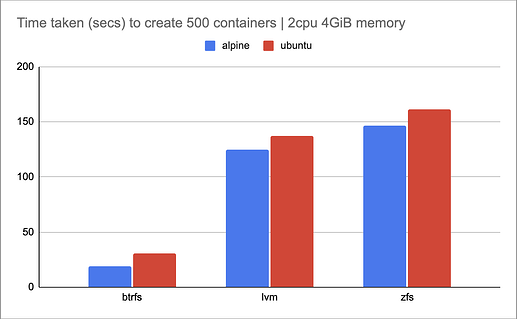

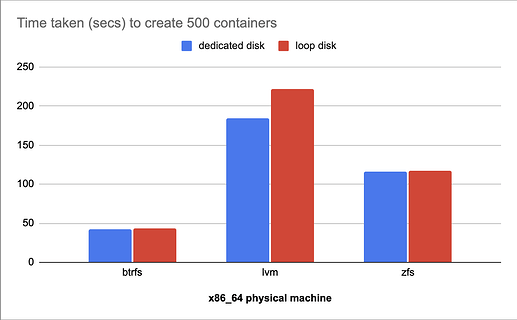

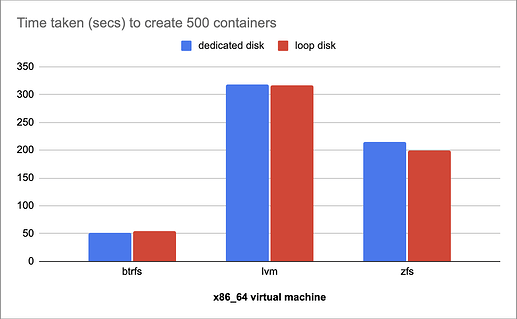

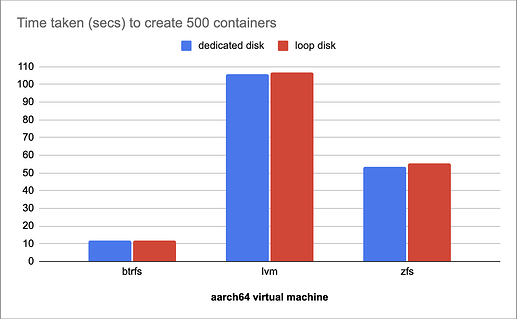

Speed (creation of containers)

The clear winner is btrfs and it is not even close, and lvm beats zfs.

The virtual machine was initially configured to use 2 cpus and 4 GiB memory.

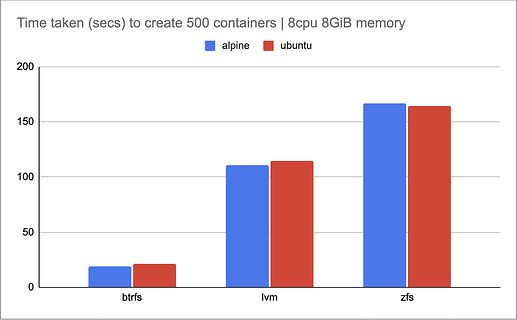

I assumed (wrongly) that the storage drivers would take advantage of multiple cpus and more resources, so I bumped the virtual machine to 8cpus and 8GiB memory.

Even though the performance of btrfs and lvm is slightly better, the results are similar.

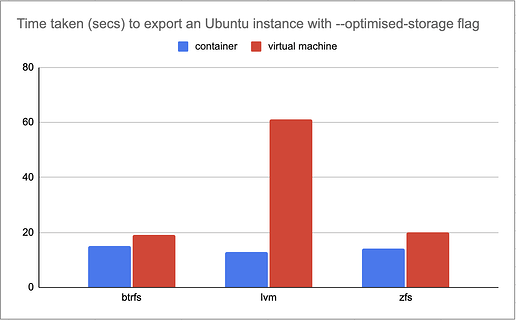

Speed (instance export)

I compared exporting a plain ubuntu instance with --optimized-storage for both a container and a virtual machine. Though I am aware lvm has no support for optimised storage export.

They all performed similar for containers. But for virtual machines, zfs and btrfs are still comparable with lvm lagging far behind.

Disk usage

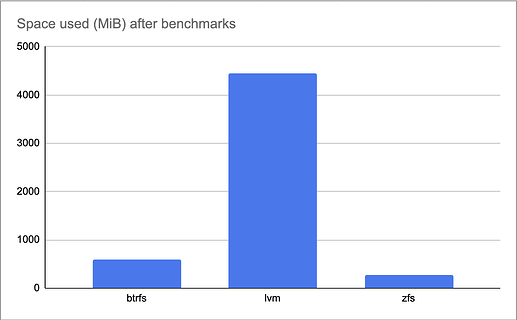

And lastly, I compared the disk usage after the benchmarks are done to see which of the drivers are optimal with disk usage.

In this category zfs shines, btrfs is a distant second at more than twice the usage of zfs. As for lvm, this is a contest too much for it to handle.

The numbers

Expand the details for full benchmark results.

# exporting with --optimized-storage | virtual machines

=======================================================

btrfs: time: 19s archive_size: 264m

lvm: time: 61s archive_size: 274m

zfs: time: 20s archive_size: 270m

# exporting with --optimized-storage | containers

=================================================

btrfs: time: 15s archive_size: 163m

lvm: time: 13s archive_size: 162m

zfs: time: 14s archive_size: 166m

# benchmark: cpu 2, memory 4GiB | 500 containers

================================================

btrfs: ubuntu: 30.375s alpine: 19.112s

lvm: ubuntu: 137.585s alpine: 124.836s

zfs: ubuntu: 161.012s alpine: 146.246s

# benchmark: cpu 8, memory 8GiB | 500 containers

================================================

btrfs: ubuntu: 21.006s alpine: 19.050s

lvm: ubuntu: 115.015s alpine: 110.528s

zfs: ubuntu: 164.790s alpine: 166.859s

# disk usage after benchmarks

=============================

$ incus storage info btrfs

info:

description: ""

driver: btrfs

name: btrfs

space used: 588.69MiB

total space: 30.00GiB

used by:

images:

- d7d17321ac277c94a3a49dcef4a2a10ab7e44d48e14e6accb6dead0fc1dde65d

- ec70399387b8877539b36e40787ca0426aa870bd6c514da7b209f97cbdfa8291

$ incus storage info lvm

info:

description: ""

driver: lvm

name: lvm

space used: 4.45GiB

total space: 29.93GiB

used by:

images:

- d7d17321ac277c94a3a49dcef4a2a10ab7e44d48e14e6accb6dead0fc1dde65d

- ec70399387b8877539b36e40787ca0426aa870bd6c514da7b209f97cbdfa8291

$ incus storage info zfs

info:

description: ""

driver: zfs

name: zfs

space used: 264.93MiB

total space: 28.58GiB

used by:

images:

- d7d17321ac277c94a3a49dcef4a2a10ab7e44d48e14e6accb6dead0fc1dde65d

- ec70399387b8877539b36e40787ca0426aa870bd6c514da7b209f97cbdfa8291