Naah. I give up

I deployed a 3-node Ceph cluster. It works fine.

[root@ceph01 ~]# ceph -s

cluster:

id: 47fb8a70-6bfc-425d-9934-a2b77155d36c

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph01

mgr: ceph02(active), standbys: ceph01

osd: 6 osds: 6 up, 6 in

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 7.2 GiB used, 41 GiB / 48 GiB avail

pgs: 1 active+clean

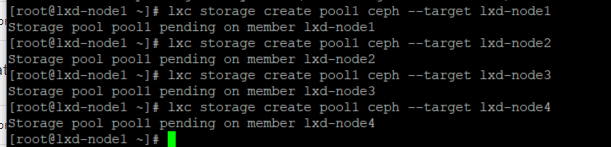

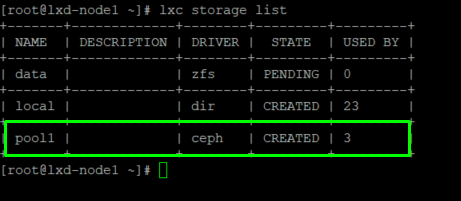

Then I tried to create a storage pool from an LXC server.

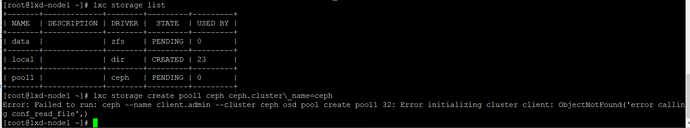

lxc storage create pool1 ceph ceph.cluster\_name=ceph

But I constantly get the same error.

**Error: Failed to create storage pool 'ceph': Failed to run: ceph --name client.admin --cluster ceph osd pool create lxd 32: Error initializing cluster client: ObjectNotFound('error calling conf_read_file',)**

Depite the fact my LXD server can ping a Ceph cluster node (one of 3 nodes).

[root@node4 ~]# ping ceph

PING ceph (10.0.1.125) 56(84) bytes of data.

64 bytes from ceph (10.0.1.125): icmp_seq=1 ttl=64 time=0.284 ms

64 bytes from ceph (10.0.1.125): icmp_seq=2 ttl=64 time=0.339 ms

64 bytes from ceph (10.0.1.125): icmp_seq=3 ttl=64 time=0.490 ms

64 bytes from ceph (10.0.1.125): icmp_seq=4 ttl=64 time=0.314 ms

What am I doing wrong?

stgraber

August 21, 2020, 3:34am

2

Can you show your /etc/ceph/ceph.conf?

LXD ships an older version of Ceph within the snap currently so some options in there may confuse it.

Yeah, I can. This is the node I’m trying to connect.

[global]

fsid = 47fb8a70-6bfc-425d-9934-a2b77155d36c

mon_initial_members = ceph01

mon_host = 10.0.1.153

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 10.0.1.0/24

Is there any mistake? Cannot quite understand what’s the problem.

stgraber

August 21, 2020, 4:27pm

5

Hmm, no, that looks correct.

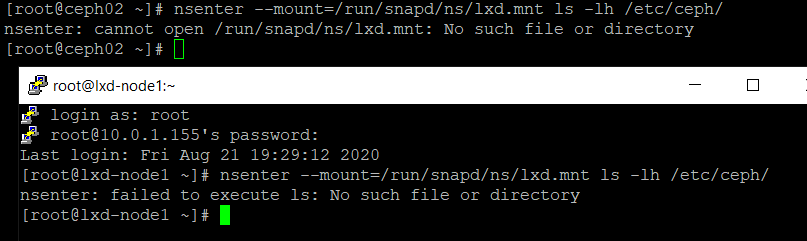

nsenter --mount=/run/snapd/ns/lxd.mnt ls -lh /etc/ceph/

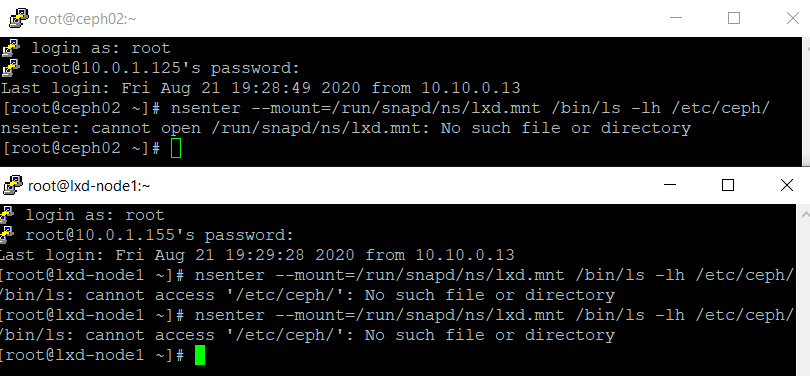

Nah..none of the server succeded

stgraber

August 21, 2020, 4:34pm

7

Hmm, what LXD version is that?

4.4

Ah, I’m running CentOS 8.2 btw.

stgraber

August 21, 2020, 4:43pm

10

Ah that may explain some of this

Try:

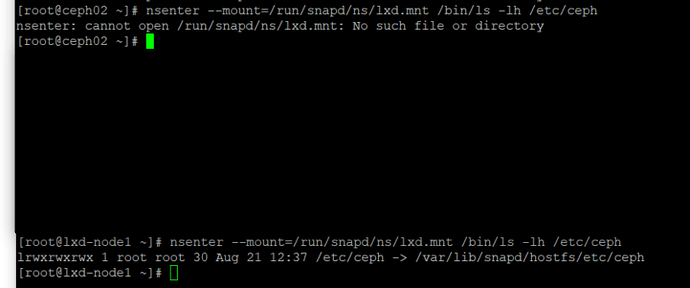

nsenter --mount=/run/snapd/ns/lxd.mnt /bin/ls -lh /etc/ceph

stgraber

August 21, 2020, 6:07pm

14

nsenter --mount=/run/snapd/ns/lxd.mnt /bin/ls -lh /etc/ceph/

stgraber

August 21, 2020, 6:58pm

16

Oh, okay so it may be a very simple problem

Do you have a /etc/ceph/ceph.conf and /etc/ceph/ceph.client.admin.keyring on every one of your LXD nodes? If not, then that’s the issue, you need those two files in there so LXD can access Ceph.

You can simply copy those two from one of your Ceph cluster nodes.

stgraber

August 21, 2020, 11:14pm

19

In general to be able to connect to ceph, you need the machine connecting to it to also have the ceph.conf and a keyring. I usually go as far as installing ceph-common so I can run the ceph status and related commands on them too.