Required information

- Distribution: Ubuntu

- Distribution version: 20.04

- The output of “lxc info” or if that fails:

root@iic-worker-203-gpu:~# lxc info

config:

cluster.https_address: 10.102.32.203:8443

core.https_address: 10.102.32.203:8443

core.trust_password: true

api_extensions:

- storage_zfs_remove_snapshots

- container_host_shutdown_timeout

- container_stop_priority

- container_syscall_filtering

- auth_pki

- container_last_used_at

- etag

- patch

- usb_devices

- https_allowed_credentials

- image_compression_algorithm

- directory_manipulation

- container_cpu_time

- storage_zfs_use_refquota

- storage_lvm_mount_options

- network

- profile_usedby

- container_push

- container_exec_recording

- certificate_update

- container_exec_signal_handling

- gpu_devices

- container_image_properties

- migration_progress

- id_map

- network_firewall_filtering

- network_routes

- storage

- file_delete

- file_append

- network_dhcp_expiry

- storage_lvm_vg_rename

- storage_lvm_thinpool_rename

- network_vlan

- image_create_aliases

- container_stateless_copy

- container_only_migration

- storage_zfs_clone_copy

- unix_device_rename

- storage_lvm_use_thinpool

- storage_rsync_bwlimit

- network_vxlan_interface

- storage_btrfs_mount_options

- entity_description

- image_force_refresh

- storage_lvm_lv_resizing

- id_map_base

- file_symlinks

- container_push_target

- network_vlan_physical

- storage_images_delete

- container_edit_metadata

- container_snapshot_stateful_migration

- storage_driver_ceph

- storage_ceph_user_name

- resource_limits

- storage_volatile_initial_source

- storage_ceph_force_osd_reuse

- storage_block_filesystem_btrfs

- resources

- kernel_limits

- storage_api_volume_rename

- macaroon_authentication

- network_sriov

- console

- restrict_devlxd

- migration_pre_copy

- infiniband

- maas_network

- devlxd_events

- proxy

- network_dhcp_gateway

- file_get_symlink

- network_leases

- unix_device_hotplug

- storage_api_local_volume_handling

- operation_description

- clustering

- event_lifecycle

- storage_api_remote_volume_handling

- nvidia_runtime

- container_mount_propagation

- container_backup

- devlxd_images

- container_local_cross_pool_handling

- proxy_unix

- proxy_udp

- clustering_join

- proxy_tcp_udp_multi_port_handling

- network_state

- proxy_unix_dac_properties

- container_protection_delete

- unix_priv_drop

- pprof_http

- proxy_haproxy_protocol

- network_hwaddr

- proxy_nat

- network_nat_order

- container_full

- candid_authentication

- backup_compression

- candid_config

- nvidia_runtime_config

- storage_api_volume_snapshots

- storage_unmapped

- projects

- candid_config_key

- network_vxlan_ttl

- container_incremental_copy

- usb_optional_vendorid

- snapshot_scheduling

- snapshot_schedule_aliases

- container_copy_project

- clustering_server_address

- clustering_image_replication

- container_protection_shift

- snapshot_expiry

- container_backup_override_pool

- snapshot_expiry_creation

- network_leases_location

- resources_cpu_socket

- resources_gpu

- resources_numa

- kernel_features

- id_map_current

- event_location

- storage_api_remote_volume_snapshots

- network_nat_address

- container_nic_routes

- rbac

- cluster_internal_copy

- seccomp_notify

- lxc_features

- container_nic_ipvlan

- network_vlan_sriov

- storage_cephfs

- container_nic_ipfilter

- resources_v2

- container_exec_user_group_cwd

- container_syscall_intercept

- container_disk_shift

- storage_shifted

- resources_infiniband

- daemon_storage

- instances

- image_types

- resources_disk_sata

- clustering_roles

- images_expiry

- resources_network_firmware

- backup_compression_algorithm

- ceph_data_pool_name

- container_syscall_intercept_mount

- compression_squashfs

- container_raw_mount

- container_nic_routed

- container_syscall_intercept_mount_fuse

- container_disk_ceph

- virtual-machines

- image_profiles

- clustering_architecture

- resources_disk_id

- storage_lvm_stripes

- vm_boot_priority

- unix_hotplug_devices

- api_filtering

- instance_nic_network

- clustering_sizing

- firewall_driver

- projects_limits

- container_syscall_intercept_hugetlbfs

- limits_hugepages

- container_nic_routed_gateway

- projects_restrictions

- custom_volume_snapshot_expiry

- volume_snapshot_scheduling

- trust_ca_certificates

- snapshot_disk_usage

- clustering_edit_roles

- container_nic_routed_host_address

- container_nic_ipvlan_gateway

- resources_usb_pci

- resources_cpu_threads_numa

- resources_cpu_core_die

- api_os

- container_nic_routed_host_table

- container_nic_ipvlan_host_table

- container_nic_ipvlan_mode

- resources_system

- images_push_relay

- network_dns_search

- container_nic_routed_limits

- instance_nic_bridged_vlan

- network_state_bond_bridge

- usedby_consistency

- custom_block_volumes

- clustering_failure_domains

- resources_gpu_mdev

- console_vga_type

- projects_limits_disk

- network_type_macvlan

- network_type_sriov

- container_syscall_intercept_bpf_devices

- network_type_ovn

- projects_networks

- projects_networks_restricted_uplinks

- custom_volume_backup

- backup_override_name

- storage_rsync_compression

- network_type_physical

- network_ovn_external_subnets

- network_ovn_nat

- network_ovn_external_routes_remove

- tpm_device_type

- storage_zfs_clone_copy_rebase

- gpu_mdev

- resources_pci_iommu

- resources_network_usb

- resources_disk_address

- network_physical_ovn_ingress_mode

- network_ovn_dhcp

- network_physical_routes_anycast

- projects_limits_instances

- network_state_vlan

- instance_nic_bridged_port_isolation

- instance_bulk_state_change

- network_gvrp

- instance_pool_move

- gpu_sriov

- pci_device_type

- storage_volume_state

- network_acl

- migration_stateful

- disk_state_quota

- storage_ceph_features

- projects_compression

- projects_images_remote_cache_expiry

- certificate_project

- network_ovn_acl

- projects_images_auto_update

- projects_restricted_cluster_target

- images_default_architecture

- network_ovn_acl_defaults

- gpu_mig

- project_usage

- network_bridge_acl

- warnings

- projects_restricted_backups_and_snapshots

- clustering_join_token

- clustering_description

- server_trusted_proxy

- clustering_update_cert

- storage_api_project

- server_instance_driver_operational

- server_supported_storage_drivers

- event_lifecycle_requestor_address

- resources_gpu_usb

- clustering_evacuation

- network_ovn_nat_address

- network_bgp

- network_forward

- custom_volume_refresh

- network_counters_errors_dropped

- metrics

- image_source_project

- clustering_config

- network_peer

- linux_sysctl

- network_dns

- ovn_nic_acceleration

- certificate_self_renewal

- instance_project_move

- storage_volume_project_move

- cloud_init

- network_dns_nat

- database_leader

- instance_all_projects

- clustering_groups

- ceph_rbd_du

- instance_get_full

- qemu_metrics

- gpu_mig_uuid

- event_project

- clustering_evacuation_live

- instance_allow_inconsistent_copy

- network_state_ovn

- storage_volume_api_filtering

- image_restrictions

- storage_zfs_export

- network_dns_records

- storage_zfs_reserve_space

- network_acl_log

- storage_zfs_blocksize

- metrics_cpu_seconds

- instance_snapshot_never

- certificate_token

- instance_nic_routed_neighbor_probe

- event_hub

- agent_nic_config

- projects_restricted_intercept

- metrics_authentication

- images_target_project

- cluster_migration_inconsistent_copy

- cluster_ovn_chassis

- container_syscall_intercept_sched_setscheduler

- storage_lvm_thinpool_metadata_size

api_status: stable

api_version: "1.0"

auth: trusted

public: false

auth_methods:

- tls

environment:

addresses:

- 10.102.32.203:8443

architectures:

- x86_64

- i686

certificate: |

-----BEGIN CERTIFICATE-----

MIICKDCCAa2gAwIBAgIQPi2SwUguTgGpHLrxy1T2MDAKBggqhkjOPQQDAzBAMRww

GgYDVQQKExNsaW51eGNvbnRhaW5lcnMub3JnMSAwHgYDVQQDDBdyb290QGlpYy13

b3JrZXItMjAzLWdwdTAeFw0yMTA2MDcwODQzMzRaFw0zMTA2MDUwODQzMzRaMEAx

HDAaBgNVBAoTE2xpbnV4Y29udGFpbmVycy5vcmcxIDAeBgNVBAMMF3Jvb3RAaWlj

LXdvcmtlci0yMDMtZ3B1MHYwEAYHKoZIzj0CAQYFK4EEACIDYgAE6GnbhFAXm/uI

b8AsSLAKpSHAdJIDCavgQDy8COQ4H4oLrYLAGcDIhiekM8IdcqjrwDhWKEyjuFpn

3GyZF3VzAM6VlRsMYPrJjdU8JL05pFkWTuYKZBIggzTndlSkX0kwo2wwajAOBgNV

HQ8BAf8EBAMCBaAwEwYDVR0lBAwwCgYIKwYBBQUHAwEwDAYDVR0TAQH/BAIwADA1

BgNVHREELjAsghJpaWMtd29ya2VyLTIwMy1ncHWHBH8AAAGHEAAAAAAAAAAAAAAA

AAAAAAEwCgYIKoZIzj0EAwMDaQAwZgIxANwoIM8smxsxZqrnVGah9ZykI/R016OO

eC8EPSMGKJFkC40Ekday/w0/MM2pMs37KAIxAPpSxX8qHIw8aWRS1hGlFh+/TNSW

MuGOHB1CD5j8/EDT6DRAl7RubS3OmVrQzHv++Q==

-----END CERTIFICATE-----

certificate_fingerprint: 5d0551db4936203f6d90cafd47e7257ff7e4608920cd6203348c3f474eafe443

driver: lxc | qemu

driver_version: 4.0.12 | 6.1.1

firewall: xtables

kernel: Linux

kernel_architecture: x86_64

kernel_features:

idmapped_mounts: "false"

netnsid_getifaddrs: "true"

seccomp_listener: "true"

seccomp_listener_continue: "true"

shiftfs: "false"

uevent_injection: "true"

unpriv_fscaps: "true"

kernel_version: 5.4.0-73-generic

lxc_features:

cgroup2: "true"

core_scheduling: "true"

devpts_fd: "true"

idmapped_mounts_v2: "true"

mount_injection_file: "true"

network_gateway_device_route: "true"

network_ipvlan: "true"

network_l2proxy: "true"

network_phys_macvlan_mtu: "true"

network_veth_router: "true"

pidfd: "true"

seccomp_allow_deny_syntax: "true"

seccomp_notify: "true"

seccomp_proxy_send_notify_fd: "true"

os_name: Ubuntu

os_version: "20.04"

project: default

server: lxd

server_clustered: true

server_event_mode: full-mesh

server_name: iic-worker-203-gpu

server_pid: 185115

server_version: 5.0.0

storage: zfs

storage_version: 0.8.3-1ubuntu12.7

storage_supported_drivers:

- name: btrfs

version: 5.4.1

remote: false

- name: cephfs

version: 15.2.14

remote: true

- name: dir

version: "1"

remote: false

- name: lvm

version: 2.03.07(2) (2019-11-30) / 1.02.167 (2019-11-30) / 4.41.0

remote: false

- name: zfs

version: 0.8.3-1ubuntu12.7

remote: false

- name: ceph

version: 15.2.14

remote: true

Issue description

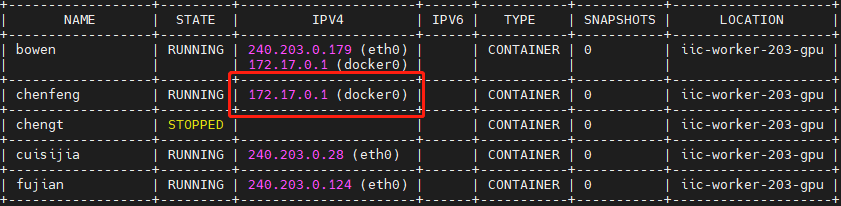

My container got no ipv4,after I reboot it. And I can not use ssh to connect my container port. HELP!!!

Information to attach

- Any relevant kernel output (

dmesg) - Container log (

lxc info NAME --show-log)

root@iic-worker-203-gpu:~# lxc info chenfeng --show-log

Name: chenfeng

Status: RUNNING

Type: container

Architecture: x86_64

Location: iic-worker-203-gpu

PID: 261156

Created: 2021/12/04 23:39 CST

Last Used: 2022/04/28 10:12 CST

Resources:

Processes: 124

Disk usage:

root: 449.00GiB

CPU usage:

CPU usage (in seconds): 7

Memory usage:

Memory (current): 314.24MiB

Memory (peak): 315.55MiB

Network usage:

eth0:

Type: broadcast

State: DOWN

Host interface: veth97584cad

MAC address: 00:16:3e:e4:31:a8

MTU: 1450

Bytes received: 698B

Bytes sent: 1.35kB

Packets received: 2

Packets sent: 10

IP addresses:

lo:

Type: loopback

State: UP

MTU: 65536

Bytes received: 1.58kB

Bytes sent: 1.58kB

Packets received: 10

Packets sent: 10

IP addresses:

inet: 127.0.0.1/8 (local)

inet6: ::1/128 (local)

docker0:

Type: broadcast

State: UP

MAC address: 02:42:11:62:d3:75

MTU: 1500

Bytes received: 0B

Bytes sent: 0B

Packets received: 0

Packets sent: 0

IP addresses:

inet: 172.17.0.1/16 (global)

Log:

lxc chenfeng 20220428021220.281 WARN conf - conf.c:lxc_map_ids:3592 - newuidmap binary is missing

lxc chenfeng 20220428021220.282 WARN conf - conf.c:lxc_map_ids:3598 - newgidmap binary is missing

lxc chenfeng 20220428021220.283 WARN conf - conf.c:lxc_map_ids:3592 - newuidmap binary is missing

lxc chenfeng 20220428021220.283 WARN conf - conf.c:lxc_map_ids:3598 - newgidmap binary is missing

lxc chenfeng 20220428021220.285 WARN cgfsng - cgroups/cgfsng.c:fchowmodat:1252 - No such file or directory - Failed to fchownat(40, memory.oom.group, 1000000000, 0, AT_EMPTY_PATH | AT_SYMLINK_NOFOLLOW )

lxc chenfeng 20220428021902.566 WARN conf - conf.c:lxc_map_ids:3592 - newuidmap binary is missing

lxc chenfeng 20220428021902.566 WARN conf - conf.c:lxc_map_ids:3598 - newgidmap binary is missing

- Container configuration (

lxc config show NAME --expanded)

root@iic-worker-203-gpu:~# lxc config show chenfeng --expanded

architecture: x86_64

config:

image.architecture: amd64

image.description: Ubuntu focal amd64 (20210606_07:42)

image.name: ubuntu-focal-amd64-default-20210606_07:42

image.os: ubuntu

image.release: focal

image.serial: "20210606_07:42"

image.variant: default

volatile.base_image: 2b97dfd0c6e5cb8243f67860a75fbdeab3acccde05cd6782cabe65f2c79e6ef4

volatile.eth0.host_name: veth97584cad

volatile.eth0.hwaddr: 00:16:3e:e4:31:a8

volatile.idmap.base: "0"

volatile.idmap.current: '[{"Isuid":true,"Isgid":false,"Hostid":1000000,"Nsid":0,"Maprange":1000000000},{"Isuid":false,"Isgid":true,"Hostid":1000000,"Nsid":0,"Maprange":1000000000}]'

volatile.idmap.next: '[{"Isuid":true,"Isgid":false,"Hostid":1000000,"Nsid":0,"Maprange":1000000000},{"Isuid":false,"Isgid":true,"Hostid":1000000,"Nsid":0,"Maprange":1000000000}]'

volatile.last_state.idmap: '[{"Isuid":true,"Isgid":false,"Hostid":1000000,"Nsid":0,"Maprange":1000000000},{"Isuid":false,"Isgid":true,"Hostid":1000000,"Nsid":0,"Maprange":1000000000}]'

volatile.last_state.power: RUNNING

volatile.uuid: 3d6c1c00-0292-459b-a623-ed3f8d56748e

devices:

eth0:

name: eth0

network: lxdfan0

type: nic

gpu:

type: gpu

proxy0:

bind: host

connect: tcp:240.203.0.107:22

listen: tcp:10.102.32.203:60029

type: proxy

root:

path: /

pool: pool1

size: 1024GB

type: disk

ephemeral: false

profiles:

- default1T

stateful: false

- Main daemon log (at /var/log/lxd/lxd.log or /var/snap/lxd/common/lxd/logs/lxd.log)

lxd.log - Output of the client with --debug

- Output of the daemon with --debug (alternatively output of

lxc monitorwhile reproducing the issue)