@amikhalitsyn

Sorry for being naive, in earlier post.

Here is my cat /proc/1/mountinfo

24 30 0:22 / /sys rw,nosuid,nodev,noexec,relatime shared:7 - sysfs sysfs rw

25 30 0:23 / /proc rw,nosuid,nodev,noexec,relatime shared:12 - proc proc rw

26 30 0:5 / /dev rw,nosuid,relatime shared:2 - devtmpfs udev rw,size=1056604824k,nr_inodes=264151206,mode=755,inode64

27 26 0:24 / /dev/pts rw,nosuid,noexec,relatime shared:3 - devpts devpts rw,gid=5,mode=620,ptmxmode=000

28 30 0:25 / /run rw,nosuid,nodev,noexec,relatime shared:5 - tmpfs tmpfs rw,size=211332328k,mode=755,inode64

30 1 253:0 / / rw,relatime shared:1 - ext4 /dev/mapper/ubuntu--vg-ubuntu--lv rw

31 24 0:6 / /sys/kernel/security rw,nosuid,nodev,noexec,relatime shared:8 - securityfs securityfs rw

32 26 0:27 / /dev/shm rw,nosuid,nodev shared:4 - tmpfs tmpfs rw,inode64

33 28 0:28 / /run/lock rw,nosuid,nodev,noexec,relatime shared:6 - tmpfs tmpfs rw,size=5120k,inode64

34 24 0:29 / /sys/fs/cgroup rw,nosuid,nodev,noexec,relatime shared:9 - cgroup2 cgroup2 rw

35 24 0:30 / /sys/fs/pstore rw,nosuid,nodev,noexec,relatime shared:10 - pstore pstore rw

36 24 0:31 / /sys/fs/bpf rw,nosuid,nodev,noexec,relatime shared:11 - bpf bpf rw,mode=700

37 25 0:32 / /proc/sys/fs/binfmt_misc rw,relatime shared:13 - autofs systemd-1 rw,fd=30,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=15335

38 26 0:33 / /dev/hugepages rw,relatime shared:14 - hugetlbfs hugetlbfs rw,pagesize=2M

39 26 0:20 / /dev/mqueue rw,nosuid,nodev,noexec,relatime shared:15 - mqueue mqueue rw

40 24 0:7 / /sys/kernel/debug rw,nosuid,nodev,noexec,relatime shared:16 - debugfs debugfs rw

41 24 0:12 / /sys/kernel/tracing rw,nosuid,nodev,noexec,relatime shared:17 - tracefs tracefs rw

42 24 0:34 / /sys/fs/fuse/connections rw,nosuid,nodev,noexec,relatime shared:18 - fusectl fusectl rw

43 24 0:21 / /sys/kernel/config rw,nosuid,nodev,noexec,relatime shared:19 - configfs configfs rw

65 28 0:35 / /run/credentials/systemd-sysusers.service ro,nosuid,nodev,noexec,relatime shared:20 - ramfs none rw,mode=700

89 30 8:2 / /boot rw,relatime shared:30 - ext4 /dev/sda2 rw

92 30 7:0 / /snap/core20/1738 ro,nodev,relatime shared:45 - squashfs /dev/loop0 ro,errors=continue

95 30 7:1 / /snap/core20/1778 ro,nodev,relatime shared:47 - squashfs /dev/loop1 ro,errors=continue

98 30 7:2 / /snap/lxd/22923 ro,nodev,relatime shared:49 - squashfs /dev/loop2 ro,errors=continue

101 30 7:3 / /snap/lxd/23541 ro,nodev,relatime shared:51 - squashfs /dev/loop3 ro,errors=continue

104 30 7:4 / /snap/snapd/17883 ro,nodev,relatime shared:53 - squashfs /dev/loop4 ro,errors=continue

107 30 7:5 / /snap/snapd/17950 ro,nodev,relatime shared:55 - squashfs /dev/loop5 ro,errors=continue

110 28 0:36 / /run/rpc_pipefs rw,relatime shared:57 - rpc_pipefs sunrpc rw

113 37 0:37 / /proc/sys/fs/binfmt_misc rw,nosuid,nodev,noexec,relatime shared:59 - binfmt_misc binfmt_misc rw

586 28 0:25 /snapd/ns /run/snapd/ns rw,nosuid,nodev,noexec,relatime - tmpfs tmpfs rw,size=211332328k,mode=755,inode64

609 586 0:4 mnt:[4026534947] /run/snapd/ns/lxd.mnt rw - nsfs nsfs rw

741 30 0:55 / /var/snap/lxd/common/lxd/disk_nfs rw,relatime shared:327 - nfs 192.168.54.185:/ifs/docker/cisco-143.15 rw,vers=3,rsize=131072,wsize=524288,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,mountaddr=192.168.54.185,mountvers=3,mountport=300,mountproto=udp,local_lock=none,addr=192.168.54.185

614 28 0:44 / /run/user/1000 rw,nosuid,nodev,relatime shared:325 - tmpfs tmpfs rw,size=211332324k,nr_inodes=52833081,mode=700,uid=1000,gid=1000,inode64

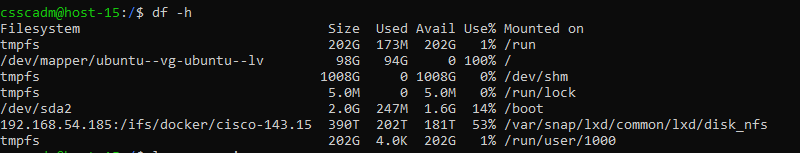

Setup: I am using lxd vm on top of ubuntu 22.04 host with nfs as main storage. zfs pools are residing on nfs.

Problem: There was a huge gap between du and df and it kept on increasing. Finally when df did hit 100% disk usage , containers become unresponsive. Though the host itself seemed to be fine. Then I restarted the host, which apparently fixed the du vs df anamoly. but could not start lxd containers, as it appears that appropriate volumes are missing in zpool.

root@host-15:/# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

cssc-it-service-1 1.36T 652K 1.36T - 496G 0% 0% 1.00x ONLINE -

and

root@host-15:/# zfs list

NAME USED AVAIL REFER MOUNTPOINT

cssc-it-service-1 652K 1.32T 24K legacy

cssc-it-service-1/containers 24K 1.32T 24K legacy

cssc-it-service-1/custom 24K 1.32T 24K legacy

cssc-it-service-1/deleted 120K 1.32T 24K legacy

cssc-it-service-1/deleted/containers 24K 1.32T 24K legacy

cssc-it-service-1/deleted/custom 24K 1.32T 24K legacy

cssc-it-service-1/deleted/images 24K 1.32T 24K legacy

cssc-it-service-1/deleted/virtual-machines 24K 1.32T 24K legacy

cssc-it-service-1/images 24K 1.32T 24K legacy

cssc-it-service-1/virtual-machines 24K 1.32T 24K legacy

but

there should at least 4 lxc vm, as being shown there in lxc list.

If I try to start them, , it is showing error

Error: Failed to run: zfs get -H -p -o value volmode cssc-it-service-1/virtual-machines/computational-nis-server.block: exit status 1 (cannot open 'cssc-it-service-1/virtual-machines/computational-nis-server.block': dataset does not exist

while lxc info shows

Error: open /var/snap/lxd/common/lxd/logs/computational-nis-server/qemu.log: no such file or directory

Please guide me to resoolve this issue?

[Do you want me to create a new thread, or this will work?]