I am currently performing this on a virtual box VM because I am trying to remember the setup. Anyways I have a network that’s behind a NAT router, it doubles as a DHCP router that gives IP addresses on 192.168.4.X subnet.

I want to setup incus so it would grab the IP addresses from the router 192.168.4.1 (GATEWAY) but instead it keeps grabbing its own on a completely different subnet 10.120.12.X or whatever.

How do I set it up to grab on the 192.168.4.X subnet in incus?

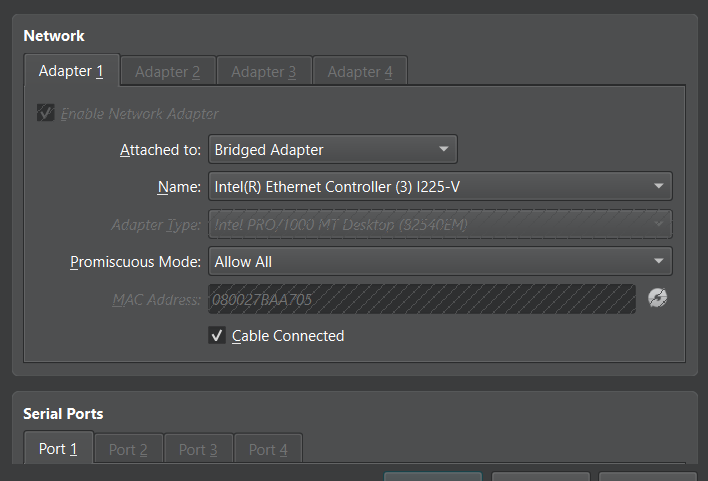

I have promiscuous mode set to allow all.

How did you assign network to instance? Did you use bridge or physic port?

I guess you just use incus managed network, that’s why instances alway in 10.x.x.x subnet.

If what I guessed is true, here is how you can let instances use your lan subnet which is 192.168.4.X. First create a bridge, let’s call it br0. Before let instances use br0, here is some information you need to know, by default, instance will use profile “default”, and will get a nic called “eth0”, eth0 is the port connects to network, in default case, connect to incusbr0. If you only need to let instances connect to lan, just overwrite eth0 or add a new nic to instances. Now tell instance to use br0: incus config device add instance_name nic name=eth0 nictype=bridged parent=br0, restart instance network or instance itself, and it will connect to your lan.

If in other case, post complete network configuration.

Here is what I get when running:

incus config device add nginx nic name=enp0s3 nictype=bridged parent=incusbr0

Error: Invalid devices: Device validation failed for “nic”: Failed loading device “nic”: Unsupported device type

This is my setup:

Would you like to use clustering? (yes/no) [default=no]: no

Do you want to configure a new storage pool? (yes/no) [default=yes]: yes

Name of the new storage pool [default=default]: default

Name of the storage backend to use (dir, lvm, lvmcluster) [default=dir]: dir

Where should this storage pool store its data? [default=/var/lib/incus/storage-pools/default]: /var/lib/incus/storage-pools/default

Would you like to create a new local network bridge? (yes/no) [default=yes]: yes

What should the new bridge be called? [default=incusbr0]: incusbr0

What IPv4 address should be used? (CIDR subnet notation, “auto” or “none”) [default=auto]: auto

What IPv6 address should be used? (CIDR subnet notation, “auto” or “none”) [default=auto]: none

Would you like the server to be available over the network? (yes/no) [default=no]: yes

Address to bind to (not including port) [default=all]: all

Port to bind to [default=8443]: 8443

Would you like stale cached images to be updated automatically? (yes/no) [default=yes]: yes

Would you like a YAML “init” preseed to be printed? (yes/no) [default=no]: no

It’s the wrong way to add instances to managed network. Here is the right way:

incus config device add nginx nic name=enp0s3 network=incusbr0

May I ask why do you add instance to incusbr0, because it’s in incusbr0 by default.

Did I misguided you in my first post? If so please tell me which part. I can explain it in detail.

it was just default. I might be understanding something cause I ran the command

incus config device add nginx nic name=enp0s3 network=incusbr0

Error: Invalid devices: Device validation failed for “nic”: Failed loading device “nic”: Unsupported device type

It failed. So when I ran incus admin init, it gave me the various options including create a new bridge. That bridge is automatically called incusbr0.

Are you saying I should create a second bridge in addition to this one created at the init command?

No, what you wanted is connect you instances to lan, so you need to create an unmanaged bridge in host(virtual box VM), and connect instances to the unmanaged bridge.

If you use incus to create bridge, then it’s managed bridge, it will perform NAT in all circumstance.

And sorry about I wrote the wrong command, the nic need a name, so it should be:

incus config device add nginx enp0s3 nic name=enp0s3 network=incusbr0

I am still getting error:

ncus config device add nginx enp0s3 nic name=enp0s3 network=incusbr0

Error: Failed add validation for device “enp0s3”: Instance DNS name “nginx” conflict between “enp0s3” and “eth0” because both are connected to same network

I have the VM connection setup like this:

you can see the error, why do you want to add 2 port both connect to incusbr0?

If you want to connect nginx instance to lan, create a linux bridge(br0) in your host(virtual box VM), then add nginx instance to it:

incus config device add nginx enp0s3 nic name=enp0s3 nictype=bridged parent=br0

Just passing…

You need to connect your Incus instance to a bridge on the host that is on the network with the dhcp server. Not the Incus bridge incusbr0 but a real bridge that is connected to your network and that you have demonstrated to be in working order. Typically one might name this bridge br0 but it could have any name.

Example:

$ incus init images:alpine/3.21 mytest

$ incus network attach br0 mytest eth0

$ incus start mytest

$ incus exec mytest ip address show dev eth0

Your instance has to, of course, be configured to use dhcp (the Alpine image I’ve used as an example does this by default). Your host has a bridge called br0 (adjust for your env) and it gets attached to eth0 inside the instance (depending on the image you use, this may also be different). The exec will display the allocated IP if all is well.

That works for me on my system.

2 Likes