(Please note as a new user I can ONLY add one image to a post, so I’ve put all my images together in one large image at the bottom and referenced them by number where they need to be)

ok, so here’s the scenario:

I’m running ubuntu server 20.04, the primary drive where my OS and the LXD/LXC snap package installed is almost full, so I’ve added a second 1TB drive to the system.

I’ve split that drive into two partitions each 500gb in size.

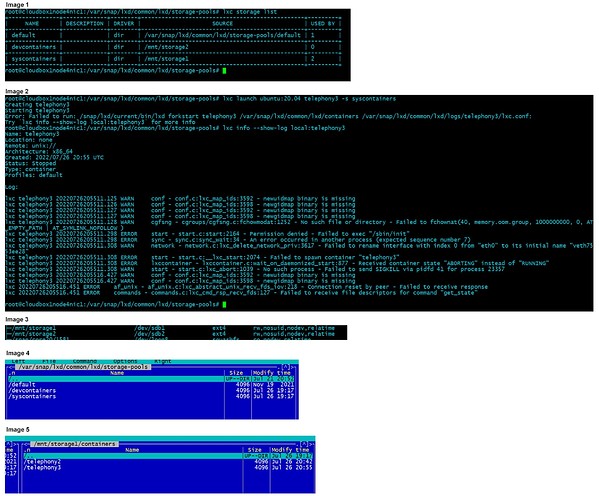

I’ve created 2 dir based storage pools in these 2 partitions as follows:

(IMAGE 1 GOES HERE)

Creating a container in the default pool works, as it always has, with no problems.

With my newly created pools however, I keep hitting the same issue time and time again:

(IMAGE 2 GOES HERE)

I’ve poured over quite a few posts and cries for help here and in askabuntu among other places, which while not the same gave me a few ideas to try, namely that the partitions had “noexec” on them. They did originally, but I’ve since removed that flag, and now exec is allowed, but still container creation keeps failing.

(IMAGE 3 GOES HERE)

I also thought that maybe something was wrong with the way LXD had configured the storage pools, as it has created these folders in it’s snap folder:

(IMAGE 4 GOES HERE)

Which appear to be empty (I suspect it may have wanted to make symlinks)

I have since ruled that out however, because when I look in the folders on the second disk I see that LXD has indeed created the root file systems for the containers:

(IMAGE 5 GOES HERE)

Which I suspect it would not have done, if it had been using these empty folders.

Right now, I’m totally out of ideas as to what I’m doing wrong.

(IMAGES)

so it was better than nothing the way I did it. (Windows Key + shift + S)

so it was better than nothing the way I did it. (Windows Key + shift + S)