Howdy,

I would like to set up a Debian-based VM in Incus, install Incus within it, and use that instance as a virtual server running LXC containers. I have two ethernet ports on my machine and a wireless card, all of which I would like for the VM to ‘own.’ The machine runs 64-bit MX Linux 23.1 with KDE.

I am using this tutorial from @trevor, hoping to set up the ‘bridge network adapter’ as the parent of the physical eth0, but let the VM have the two other, actual devices. I expect to pass the other two into one of the nested LXC containers, running something like OpenWRT, as physical nictypes, as well.

I created a project, a storage volume, a profile, a network, and an instance; respectively, anvil, anvil, anvil, anvilbr0, and kixikur. Here is an excerpt from that profile:

...

eth0:

name: eth0

nictype: bridged

parent: anvilbr0

type: nic

eth1:

name: eth1

nictype: physical

parent: eth1

type: nic

root:

path: /

pool: anvil

type: disk

wlan0:

name: wlan0

nictype: physical

parent: wlan0

type: nic

...

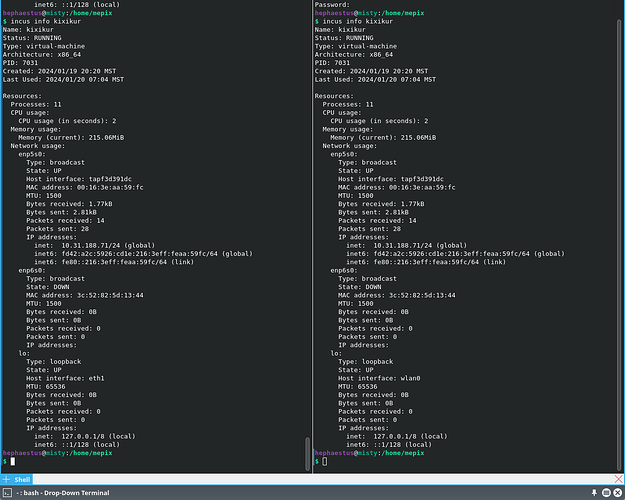

But, to me, the networks in the instance don’t look right.

In the instance, it looks like

lo changes host interface every second or so, and where are the rest of the interfaces? From inside the instance:

root@kixikur:~# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host noprefixroute

valid_lft forever preferred_lft forever

2: enp5s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:16:3e:aa:59:fc brd ff:ff:ff:ff:ff:ff

inet 10.31.188.71/24 metric 1024 brd 10.31.188.255 scope global dynamic enp5s0

valid_lft 3515sec preferred_lft 3515sec

inet6 fd42:a2c:5926:cd1e:216:3eff:feaa:59fc/64 scope global mngtmpaddr noprefixroute

valid_lft forever preferred_lft forever

inet6 fe80::216:3eff:feaa:59fc/64 scope link

valid_lft forever preferred_lft forever

3: enp6s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 3c:52:82:5d:13:44 brd ff:ff:ff:ff:ff:ff

From outside the instance:

$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether a0:36:9f:43:ac:f3 brd ff:ff:ff:ff:ff:ff

inet 192.168.0.10/24 brd 192.168.0.255 scope global dynamic noprefixroute eth0

valid_lft 3061sec preferred_lft 3061sec

inet6 fe80::6f64:ffa3:5d7f:d1da/64 scope link noprefixroute

valid_lft forever preferred_lft forever

5: anvilbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:16:3e:b1:4a:af brd ff:ff:ff:ff:ff:ff

inet 10.31.188.1/24 scope global anvilbr0

valid_lft forever preferred_lft forever

inet6 fd42:a2c:5926:cd1e::1/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::216:3eff:feb1:4aaf/64 scope link

valid_lft forever preferred_lft forever

6: incusbr-1001: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 00:16:3e:ff:ed:0b brd ff:ff:ff:ff:ff:ff

inet 10.148.13.1/24 scope global incusbr-1001

valid_lft forever preferred_lft forever

inet6 fd42:d3c5:22b0:6cd4::1/64 scope global

valid_lft forever preferred_lft forever

7: incusbr0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 00:16:3e:1a:c0:0e brd ff:ff:ff:ff:ff:ff

inet 10.58.164.1/24 scope global incusbr0

valid_lft forever preferred_lft forever

inet6 fd42:acda:9d30:547::1/64 scope global

valid_lft forever preferred_lft forever

10: tapf3d391dc: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master anvilbr0 state UP group default qlen 1000

link/ether c2:77:60:c4:c1:0a brd ff:ff:ff:ff:ff:ff

If I understood the tutorial, then the instance should only have one interface, corresponding to the anvilbr0, but it has two enpXsX instead. And, the host still uses eth0, yet now has the tap interface. I’m not familiar with tap interfaces, or probably most networking concepts. Having read documentation and blogs, and watched presentations and tutorials, I thought this would make more sense to me than it does. I appreciate your help.

Best,

UpsetMOSFET

EDIT: I should also mention that apt update doesn’t work in the instance. It looks like that’s because it has no internet; every repository only loads to zero percent. Something about networks.

EDIT2: I did say, “something like OpenWRT.” I won’t be using OpenWRT, itself, as @stgraber indicated in this post that it would be bad for specifically what I want to do. I’m hoping DietPi with Pihole+Unbound will do the trick for me, maybe with Shorewall. Open to suggestions there, too.

Yeah, debugging with @mcondarelli has showed that OpenWRT does actively rename interfaces both on startup and shutdown, so that’s definitely a bit of a problem.

EDIT3: It occurs to me that this may require the user running Incus to have superuser permission, not just to be a member of incus-admin. What effect does this have?