Hi.

According to the URL[ Linux Containers - LXD - Has been moved to Canonical ], it seems that Cephfs can also create a storage pool.

If you can create a storage pool with cephfs rather than RBD, please tell me the guidelines.

Thank you

Hi.

According to the URL[ Linux Containers - LXD - Has been moved to Canonical ], it seems that Cephfs can also create a storage pool.

If you can create a storage pool with cephfs rather than RBD, please tell me the guidelines.

Thank you

You need to setup your Ceph cluster for cephfs and create a filesystem.

Ceph is still in the early days of supporting multiple cephfs on one cluster, so most will just use the default fs and set LXD to use a sub-path of that.

lxc storage create my-cephfs cephfs source=name-of-fs/some-path works fine once you have a suitable Ceph deployment (mds setup, the two rbd pools created and a fs setup using them both).

You’ll also want to opt into the snapshot feature of cephfs if you intend to use LXD custom storage volume snapshots.

Thank you @stgraber

In my test ceph storage, MDSS is configured and cephfs can be used.

When creating a storage pool, cephfs cannot be used as a storage backend driver.

The LXD version I am using is 4.1.

Is it necessary to configure in LXD to use cephfs as a storage backend driver?

Can you show lxc version? Just to make sure everything is as expected?

I don’t see how ceph could be marked as available when cephfs isn’t.

Dear @stgraber

When I installed lxd using snap, it said 4.1 was installed, so I thought the lxd and lxc versions would be 4.1. Sorry to say that i’m using 4.1 without checking it correctly.

see the below picture

ceph is nautilus.

Please let me know how to upgrade the lxc and lxd versions to the latest.

I changed to latest / stable using snap switch and snap refreshed, but the lxd and lxc versions were not upgraded.

Thank you

I tried upgrading the lxd and lxc versions, but failed to do, so I created a Ubuntu 20.04 vm.

An error appears as shown below. What should I do?

Please tell me how to upgrade lxd and lxc version. I want to try it once.

For upgrading, run the lxd.migrate command.

For the other failure, it’s saying you don’t have a ceph fs instance called lxd-cephfs.

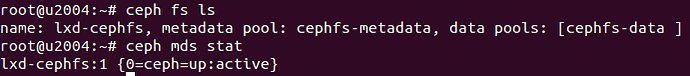

ceph fs ls would probably confirm that.

Thank you @stgraber

I used the lxd.migrate command during the day.

However, there was no result for over an hour, so I ended up in the middle.

Does the lxd.migrate command take a long time?

When I run lxd.migrate in lxd cluster environment, do I need to do the next node when one node is completed?

Since two nodes are running at the same time, one node was not executed with the message “Cannot connect to the server”.

You said “don’t have a ceph fs instance called lxd-cephfs.” I don’t know what this means.

In a cluster environment you need to run lxd.migrate on all nodes at the same time.

It’s a bit of a stressful process but I’ve done it a few times myself and things worked.

You can’t do one node at a time as the node will not be able to join back the cluster until all nodes are on the same version… The only way you could do one node at a time is if the snap version was identical to the deb version, which unfortunately it isn’t.

Thank you for answer about lxd.migrate. @stgraber

You haven’t told me how to fix cephfs stroage yet.

The following error occurred when creating cephfs storage.

source = name / path, where does name and path mean respectively?

I don’t understand the error message. “It is cephfs, but cephfs do not exist.”

Please tell me how to solve this problem.

What does:

ceph --name client.admin --cluster ceph fs get lxd-cephfs show you?

Thank you @stgraber

That’s odd, that’s the command that LXD reports as having failed…

Can you try reloading LXD and see if that unblocks things somehow?

systemctl reload snap.lxd.daemon

Nope, all you need is the right config in /etc/ceph.

I usually still install ceph-common on all my nodes just so I can easily hit ceph status on any of them to see if things are working, but LXD doesn’t need that.

There are ubuntu 18.04 and ubuntu 20.04 as ceph clients. 18.04 installed the client module with ceph-ansible, and 20.04 installed and used ceph-common and ceph-fuse.

I get the same error on 2 clients.

If you tell me which version of ceph you are using, I will install one and test it the same.

root@ceph:/etc/ceph# cat ceph.conf

— Please do not change this file directly since it is managed by Ansible and will be overwritten —

[global]

cluster network = 10.61.87.0/24

fsid = cb33c0ea-adc3-4224-9319-e25b59ea03b9

mon host = [v2:10.61.87.10:3300,v1:10.61.87.10:6789]

mon initial members = ceph

osd pool default crush rule = -1

osd pool default min size = 1

osd pool default size = 1

public network = 10.61.87.0/24

[osd]

osd memory target = 4294967296

root@ceph:/etc/ceph# cat ceph.client.admin.keyring

[client.admin]

key = AQBQschegu3FGRAA7B8/blobgdB6KSCh47L8Tg==

caps mds = “allow *”

caps mgr = “allow *”

caps mon = “allow *”

caps osd = “allow *”

The content of client.admin is the same as ceph.client.admin.keyring.

Thank you for continuing to follow up on this issue.

The ceph version does not matter, the LXD snap bundles its own copy of ceph.

The snap is based on Ubuntu 18.04 so the version of ceph bundled is 12.2.12