I think the created default bridge to connect VMs lxdbr0’s speed is 1000Mb/s, how can I increase its speed to 10Gb/s? Since I am not connecting to any physical network adapter, the speed should be much higher considering the packets are only confined to the single server

The speed is just an arbitrary value reported by the kernel, it doesn’t actually matter for communications.

Actually here the kernel reports the speed as UNKNOWN (per ethtool) which seems like the better approach.

thanks for the reply, I was asking the questions because I saw a speed of 190MB/s on a windows virtual machine.

but after some play around, I find I can reach a speed of 300MB/s between two Linux VMs. but that is over 1Gb/s, but still far from 10Gb/s. I suspect it is an memory issue: I do not have enough memory allocated to the VMs.

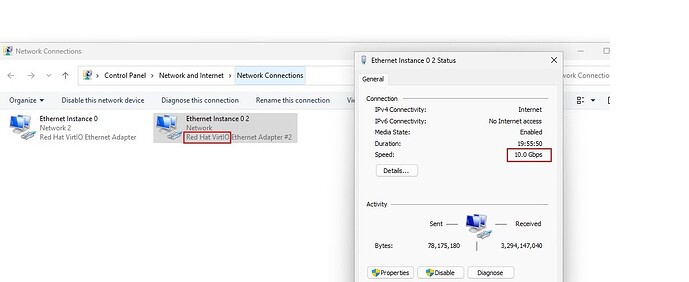

I also found this link How to increase the network bandwidth - Canonical LXD documentation. after doing the configuration , the windows VM network adapter changes to 10Gb/s

will report back when I added more memory

More memory may help, but giving more CPUs (default is just 1) is what will come with the biggest improvement as each CPU given to a VM comes with 1 transmit and 1 receive queue for the network devices.

On modern servers with 8 CPUs, I can usually get over 40Gbps to a VM, if you need something faster than that, you usually end up having to use SR-IOV or similar to get hardware acceleration.

thanks for the feedback.

On modern servers with 8 CPUs, I can usually get over 40Gbps to a VM

with what tool you got the measurement of this speed? iperf3 ftp sftp ?

I tried with different config number of CPUs, the speed does not change that much when tested with iperf3:

danny@home:~$ lxc config show -e test

architecture: x86_64

config:

image.architecture: amd64

image.description: ubuntu 22.04 LTS amd64 (release) (20231211)

image.label: release

image.os: ubuntu

image.release: jammy

image.serial: "20231211"

image.type: disk-kvm.img

image.version: "22.04"

limits.cpu: "2"

limits.memory: 16GiB

| VM’s number of CPUs | iperf3 speed |

|---|---|

| 2 | 13.7Gb/s |

| 4 | 16.4Gb/s |

| 8 | 15.6Gb/s |

| 16 | 17.0Gb/s |

when testing with sftp or ftp, I can only get around 300MB/s(2.4Gb/s), that is far from the the iperf3 performance, when checking the memory, it is completely consumed:

top - 09:23:50 up 1 day, 20:10, 9 users, load average: 7.98, 6.02, 5.48

Tasks: 510 total, 4 running, 506 sleeping, 0 stopped, 0 zombie

%Cpu(s): 39.8 us, 18.6 sy, 0.0 ni, 40.8 id, 0.8 wa, 0.0 hi, 0.1 si, 0.0 st

MiB Mem : 32031.0 total, 235.2 free, 3857.6 used, 27938.1 buff/cache

MiB Swap: 2048.0 total, 2010.5 free, 37.5 used. 10992.7 avail Mem

I think this is an issue of sftp or ftp

root@test02:~# iperf3 -c 10.22.45.4

Connecting to host 10.22.45.4, port 5201

[ 5] local 10.22.45.3 port 47770 connected to 10.22.45.4 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 2.37 GBytes 20.4 Gbits/sec 0 3.01 MBytes

[ 5] 1.00-2.00 sec 2.69 GBytes 23.1 Gbits/sec 0 3.01 MBytes

[ 5] 2.00-3.00 sec 3.08 GBytes 26.4 Gbits/sec 0 3.01 MBytes

[ 5] 3.00-4.00 sec 3.01 GBytes 25.9 Gbits/sec 0 3.01 MBytes

[ 5] 4.00-5.00 sec 3.04 GBytes 26.1 Gbits/sec 0 3.01 MBytes

[ 5] 5.00-6.00 sec 2.54 GBytes 21.8 Gbits/sec 135 3.01 MBytes

[ 5] 6.00-7.00 sec 2.62 GBytes 22.5 Gbits/sec 0 3.01 MBytes

[ 5] 7.00-8.00 sec 2.54 GBytes 21.9 Gbits/sec 0 3.01 MBytes

[ 5] 8.00-9.00 sec 2.50 GBytes 21.4 Gbits/sec 181 3.01 MBytes

[ 5] 9.00-10.00 sec 2.62 GBytes 22.5 Gbits/sec 0 3.01 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 27.0 GBytes 23.2 Gbits/sec 316 sender

[ 5] 0.00-10.04 sec 27.0 GBytes 23.1 Gbits/sec receiver

iperf Done.

root@test02:~#

To get much higher than this, I’d probably need to switch over to using jumbo frames as the test above is on good old 1500 MTU.

Stéphane, did you need to adjust “txqueuelen” (as per Ubuntu article) on the host and containers to get these speeds?

Nope, those were stock speeds with no changes on host or guest.