My VPS Hobby Mail Server with Hetzner aged and decided to noob dive into LXD and put it on my root Server. Making this post in hopes to cut someone else’s research, try & error Time spend down.

Might be relieved to know that we do not need to touch Netplan configs.

Suggestions are welcome, my next step is to actually install a Mail Server in a container and see it running for a while without problems.

You will not be able to ssh or ping from Host to Container or from Container to Host.

LXD 3.9 Hetzner: Single Public IP Setup with Macvlan on Ubuntu 18.04

$ sudo lxd init

Would you like to use LXD clustering? (yes/no) [default=no]:

Do you want to configure a new storage pool? (yes/no) [default=yes]:

Name of the new storage pool [default=default]:

Name of the storage backend to use (btrfs, ceph, dir, lvm, zfs) [default=zfs]:

Create a new ZFS pool? (yes/no) [default=yes]:

Would you like to use an existing block device? (yes/no) [default=no]:

Size in GB of the new loop device (1GB minimum) [default=100GB]:

Would you like to connect to a MAAS server? (yes/no) [default=no]:

Would you like to create a new local network bridge? (yes/no) [default=yes]: no

Would you like to configure LXD to use an existing bridge or host interface? (yes/no) [default=no]:

Would you like LXD to be available over the network? (yes/no) [default=no]:

Would you like stale cached images to be updated automatically? (yes/no) [default=yes] no

Would you like a YAML “lxd init” preseed to be printed? (yes/no) [default=no]:

Follow Simos Macvlan howto https://blog.simos.info/configuring-public-ip-addresses-on-cloud-servers-for-lxd-containers/

Replace parent with your hosts interface

$ lxc profile create macvlan

$ lxc profile device add macvlan eth0 nic nictype=macvlan parent=enp4s0

lxc launch --profile default --profile macvlan ubuntu-minimal:18.04 c1

Creating c1

Starting c1

$ lxc stop c1

Optain the MAC via Hetzner Robot and replace the placeholder MAC below

$ lxc config override c1 eth0 hwaddr=00:AA:BB:CC:DD:FF

$ lxc start c1

$ lxc exec c1 – bash

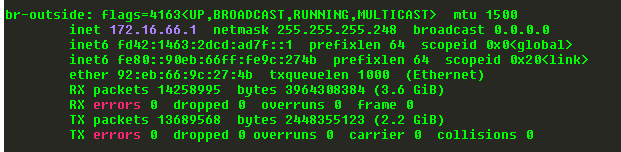

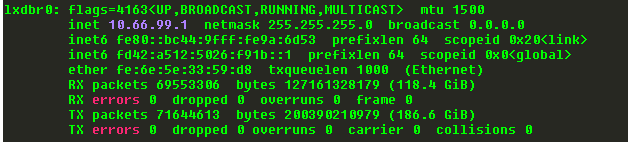

$ ifconfig