Hi,

To introduce myself, I’ve only been using “incus” for the first time for a few days, or rather a few hours.

I’ve been doing network administration for about twenty years as a hobby, using LXC and QEMU for VMs. I work primarily as a web developer.

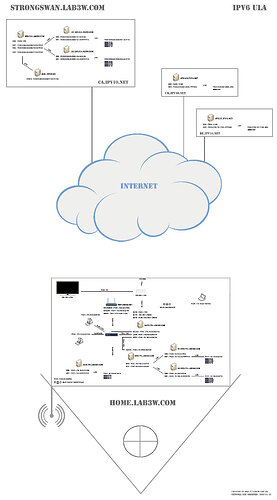

I followed Stéphane Robert’s ![]() documentation on installing incus.

documentation on installing incus.

I’m trying to create a very simple bridge :

root@hst-fr:~ # cat /etc/os-release

PRETTY_NAME="Debian GNU/Linux 13 (trixie)"

NAME="Debian GNU/Linux"

VERSION_ID="13"

VERSION="13 (trixie)"

VERSION_CODENAME=trixie

DEBIAN_VERSION_FULL=13.1

ID=debian

HOME_URL="https://www.debian.org/"

SUPPORT_URL="https://www.debian.org/support"

BUG_REPORT_URL="https://bugs.debian.org/"

root@hst-fr:~ # incus --version

6.18

root@hst-fr:~ # ifconfig

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 147.79.115.130 netmask 255.255.255.0 broadcast 147.79.115.255

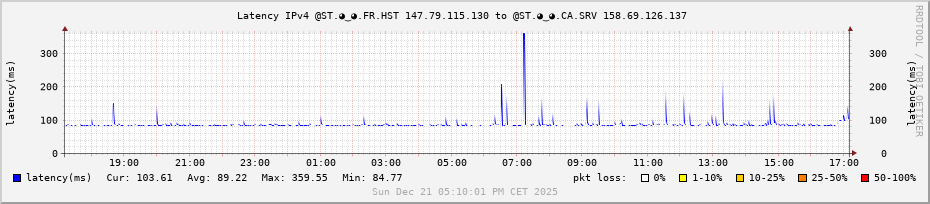

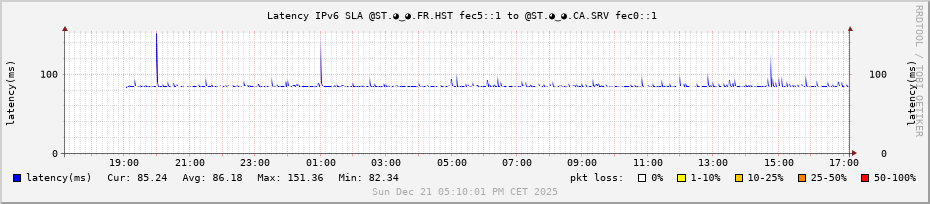

inet6 fec5::1 prefixlen 120 scopeid 0x40<site>

inet6 fe80::a6e8:d4ff:feb5:9455 prefixlen 64 scopeid 0x20<link>

inet6 2a02:4780:28:5295::1 prefixlen 48 scopeid 0x0<global>

ether a4:e8:d4:b5:94:55 txqueuelen 1000 (Ethernet)

RX packets 4493781 bytes 1120255785 (1.0 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6975455 bytes 1205368093 (1.1 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

incusbr0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.175.0.254 netmask 255.255.255.0 broadcast 10.175.0.255

inet6 fc00:4780:28:5295::fd prefixlen 112 scopeid 0x0<global>

inet6 fe80::1266:6aff:fe56:2685 prefixlen 64 scopeid 0x20<link>

ether 10:66:6a:56:26:85 txqueuelen 1000 (Ethernet)

RX packets 10247 bytes 626020 (611.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 6241 bytes 35618616 (33.9 MiB)

TX errors 0 dropped 11 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 743 bytes 284007 (277.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 743 bytes 284007 (277.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

My configuration :

root@hst-fr:~ # incus profile list

+---------+-----------------------+---------+

| NAME | DESCRIPTION | USED BY |

+---------+-----------------------+---------+

| default | Default Incus profile | 2 |

+---------+-----------------------+---------+

root@hst-fr:~ # incus profile show default

config: {}

description: Default Incus profile

devices:

eth0:

name: eth0

network: incusbr0

type: nic

root:

path: /

pool: default

type: disk

name: default

used_by:

- /1.0/instances/bdc

- /1.0/instances/web

project: default

View my network via “incus” :

root@hst-fr:~ # incus network list

+----------+----------+---------+-----------------+---------------------------+-------------+---------+---------+

| NAME | TYPE | MANAGED | IPV4 | IPV6 | DESCRIPTION | USED BY | STATE |

+----------+----------+---------+-----------------+---------------------------+-------------+---------+---------+

| eth0 | physical | NO | | | | 0 | |

+----------+----------+---------+-----------------+---------------------------+-------------+---------+---------+

| incusbr0 | bridge | YES | 10.175.0.254/24 | fc00:4780:28:5295::fd/112 | | 3 | CREATED |

+----------+----------+---------+-----------------+---------------------------+-------------+---------+---------+

| lo | loopback | NO | | | | 0 | |

+----------+----------+---------+-----------------+---------------------------+-------------+---------+---------+

After configuring the Bridge’s IPv4 and IPv6 addresses with the following CLI command :

root@hst-fr:~ # incus network set incusbr0 ipv4.address=10.175.0.254/24 ipv6.address=fc00:4780:28:5295::fd/112

I found out how to change the “ipv4.dhcp.ranges” of the DNSMasq.

CLI command incus :

root@hst-fr:~ # incus network set incusbr0 ipv4.dhcp.ranges="10.175.0.100-10.175.0.110"

I notice that the above command does not modify the configuration file ; this must be intentional.

Therefore, in the network incus configuration file :

root@hst-fr:~ # incus network edit incusbr0

### This is a YAML representation of the network.

### Any line starting with a '# will be ignored.

###

### A network consists of a set of configuration items.

###

### An example would look like:

### name: mybr0

### config:

### ipv4.address: 10.62.42.1/24

### ipv4.nat: true

### ipv6.address: fd00:56ad:9f7a:9800::1/64

### ipv6.nat: true

### managed: true

### type: bridge

###

### Note that only the configuration can be changed.

config:

dns.nameservers: 2606:4700:4700::1111, 1.1.1.1, 2001:4860:4860::8888, 8.8.8.8

ipv4.address: 10.175.0.254/24

ipv4.dhcp: "true"

ipv4.dhcp.gateway: 10.175.0.254

ipv4.dhcp.ranges: 10.175.0.111-10.175.0.115

ipv4.dhcp.routes: 0.0.0.0/0,10.175.0.254

ipv4.firewall: "false"

ipv4.nat: "true"

ipv4.routing: "true"

ipv6.address: fc00:4780:28:5295::fd/112

ipv6.dhcp: "false"

ipv6.firewall: "false"

ipv6.nat: "true"

ipv6.routing: "true"

description: ""

name: incusbr0

type: bridge

used_by:

- /1.0/profiles/default

- /1.0/instances/web

- /1.0/instances/bdc

managed: true

status: Created

locations:

- none

project: default

I have 2 containers:

root@hst-fr:~ # incus list

+------+---------+---------------------+------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+------+---------+---------------------+------+-----------+-----------+

| bdc | RUNNING | 10.175.0.114 (eth0) | | CONTAINER | 0 |

+------+---------+---------------------+------+-----------+-----------+

| web | RUNNING | 10.175.0.115 (eth0) | | CONTAINER | 0 |

+------+---------+---------------------+------+-----------+-----------+

Otherwise, in the following command ;

root@hst-fr:~ # incus network list-leases incusbr0

+-------------+-------------------+---------------------------------------+---------+

| HOSTNAME | MAC ADDRESS | IP ADDRESS | TYPE |

+-------------+-------------------+---------------------------------------+---------+

| bdc | 10:66:6a:b1:0a:21 | 10.175.0.114 | DYNAMIC |

+-------------+-------------------+---------------------------------------+---------+

| bdc | 10:66:6a:b1:0a:21 | fc00:4780:28:5295:1266:6aff:feb1:a21 | DYNAMIC |

+-------------+-------------------+---------------------------------------+---------+

| incusbr0.gw | | 10.175.0.254 | GATEWAY |

+-------------+-------------------+---------------------------------------+---------+

| incusbr0.gw | | fc00:4780:28:5295::fd | GATEWAY |

+-------------+-------------------+---------------------------------------+---------+

| web | 10:66:6a:80:dd:71 | 10.175.0.115 | DYNAMIC |

+-------------+-------------------+---------------------------------------+---------+

| web | 10:66:6a:80:dd:71 | fc00:4780:28:5295:1266:6aff:fe80:dd71 | DYNAMIC |

+-------------+-------------------+---------------------------------------+---------+

- On IPv6 (dynamic type; which I did not request); I see that incus has taken the IPv6 address of my bridge (the IPv6::/64 block) to create EUI-64 type addresses (linked to the MAC address); which is done on LLUs (Link-Local Unicast; the

fe80::). - In the containers; I can neither ping the bridge (IPv4 address:

10.175.0.254); nor1.1.1.1; and yet I see the link in state UP. - In the containers; I do not see the IPv6 Addresses - I do see the LLUs normally -

fe80::and the EUI-64. - In containers; By manually configuring an IPv6 ULA; I am able to ping the Gateway address “

fc00:4780:28:5295::fd” and on the Internet. - In containers; on IPv4 routes I wonder why I see CloudFlare and Google DNS in the routes - imagine; if I had to declare the IP addresses of all the websites in the world one by one.

Example :

root@hst-fr:~ # incus exec bdc -- bash

root@hst-fr.bdc:~ # ip -4 address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

30: eth0@if31: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link-netnsid 0

inet 10.175.0.114/24 metric 1024 brd 10.175.0.255 scope global dynamic eth0

valid_lft 2612sec preferred_lft 2612sec

root@hst-fr.bdc:~ # root@hst-fr.bdc:~ # ip -4 route show

default via 10.175.0.254 dev eth0 proto dhcp src 10.175.0.114 metric 1024

1.1.1.1 via 10.175.0.254 dev eth0 proto dhcp src 10.175.0.114 metric 1024

8.8.8.8 via 10.175.0.254 dev eth0 proto dhcp src 10.175.0.114 metric 1024

10.175.0.0/24 dev eth0 proto kernel scope link src 10.175.0.114 metric 1024

10.175.0.254 dev eth0 proto dhcp scope link src 10.175.0.114 metric 1024

root@hst-fr.bdc:~ # ping -c2 10.175.0.254

PING 10.175.0.254 (10.175.0.254) 56(84) bytes of data.

From 10.175.0.114 icmp_seq=1 Destination Host Unreachable

From 10.175.0.114 icmp_seq=2 Destination Host Unreachable

--- 10.175.0.254 ping statistics ---

2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1015ms

root@hst-fr.bdc:~ # ping 1.1.1.1 -c2

PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data.

From 10.175.0.114 icmp_seq=1 Destination Host Unreachable

From 10.175.0.114 icmp_seq=2 Destination Host Unreachable

--- 1.1.1.1 ping statistics ---

2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1026ms

Connectivity IPv4 - KO ![]()

root@hst-fr.bdc:~ # ip -6 address show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 state UNKNOWN qlen 1000

inet6 ::1/128 scope host proto kernel_lo

valid_lft forever preferred_lft forever

35: eth0@if36: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 state UP qlen 1000

inet6 fe80::1266:6aff:feb1:a21/64 scope link proto kernel_ll

valid_lft forever preferred_lft forever

root@hst-fr.bdc:~ # ip -6 route show

fe80::/64 dev eth0 proto kernel metric 256 pref medium

I add an IPv6 address and the route - OK :

root@hst-fr.bdc:~ # ip -6 address add fc00:4780:28:5295::bdc/112 dev eth0

root@hst-fr.bdc:~ # ip -6 route add default via fc00:4780:28:5295::fd

root@hst-fr.bdc:~ # ip -6 route show

fc00:4780:28:5295::/112 dev eth0 proto kernel metric 256 pref medium

fe80::/64 dev eth0 proto kernel metric 256 pref medium

default via fc00:4780:28:5295::fd dev eth0 metric 1024 pref medium

root@hst-fr.bdc:~ # ping -6 -c2 2606:4700:4700::1111

PING 2606:4700:4700::1111 (2606:4700:4700::1111) 56 data bytes

64 bytes from 2606:4700:4700::1111: icmp_seq=1 ttl=53 time=1.89 ms

64 bytes from 2606:4700:4700::1111: icmp_seq=2 ttl=53 time=2.78 ms

--- 2606:4700:4700::1111 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 1.893/2.338/2.783/0.445 ms

Otherwise ; I am asking for your help to know how to configure a static IPv4 address for your containers from the DHCP included in incus.

I want to configure an IPv4 address and an IPv6 address; I am looking for the solution.

I tried these 2 commands to assign an IPv4 address; but they return an error.

root@hst-fr:~ # incus config device set bdc eth0 ipv4.address=10.175.0.2/24

Error: Device from profile(s) cannot be modified for individual instance. Override device or modify profile instead

root@hst-fr:~ # incus config device override bdc eth0 ipv4.address=10.175.0.2/24

Error: Invalid devices: Device validation failed for "eth0": Device IP address "10.175.0.2/24" not within network "incusbr0" subnet

For my own learning ; I wanted to familiarize myself with new network commands and tools such as “systemd-networkd.service” and “systemd-resolved.service”:

root@hst-fr.bdc:~ # networkctl status

● Interfaces: 1, 35

State: routable

Online state: online

Address: 10.175.0.114 on eth0

fe80::1266:6aff:feb1:a21 on eth0

Gateway: 10.175.0.254 on eth0

DNS: 1.1.1.1

8.8.8.8

Search Domains: incus

Nov 12 21:41:56 bdc systemd-networkd[134]: Failed to increase receive buffer size for general netlink socket, ignoring: Operation not permitted

Nov 12 21:41:56 bdc systemd-networkd[134]: lo: Link UP

Nov 12 21:41:56 bdc systemd-networkd[134]: lo: Gained carrier

Nov 12 21:41:56 bdc systemd-networkd[134]: eth0: Link UP

Nov 12 21:41:56 bdc systemd-networkd[134]: eth0: Gained carrier

Nov 12 21:41:56 bdc systemd-networkd[134]: Unable to load sysctl monitor BPF program, ignoring: Operation not permitted

Nov 12 21:41:56 bdc systemd-networkd[134]: eth0: Configuring with /etc/systemd/network/eth0.network.

Nov 12 21:41:56 bdc systemd[1]: Started systemd-networkd.service - Network Configuration.

Nov 12 21:41:56 bdc systemd-networkd[134]: eth0: DHCPv4 address 10.175.0.114/24, gateway 10.175.0.254 acquired from 10.175.0.254

Nov 12 21:41:57 bdc systemd-networkd[134]: eth0: Gained IPv6LL

root@hst-fr.bdc:~ # systemctl status systemd-networkd.service

● systemd-networkd.service - Network Configuration

Loaded: loaded (/usr/lib/systemd/system/systemd-networkd.service; enabled; preset: enabled)

Drop-In: /run/systemd/system/systemd-networkd.service.d

└─zzz-lxc-ropath.conf

/run/systemd/system/service.d

└─zzz-lxc-service.conf

Active: active (running) since Wed 2025-11-12 21:41:56 UTC; 8min ago

Invocation: 02d5605b75c74c76a3d35bb894045677

TriggeredBy: ● systemd-networkd.socket

● systemd-networkd-varlink.socket

Docs: man:systemd-networkd.service(8)

man:org.freedesktop.network1(5)

Main PID: 134 (systemd-network)

Status: "Processing requests..."

Tasks: 1 (limit: 38490)

FD Store: 0 (limit: 512)

Memory: 1.8M (peak: 2.4M)

CPU: 45ms

CGroup: /system.slice/systemd-networkd.service

└─134 /usr/lib/systemd/systemd-networkd

Nov 12 21:41:56 bdc systemd-networkd[134]: Failed to increase receive buffer size for general netlink socket, ignoring: Operation not permitted

Nov 12 21:41:56 bdc systemd-networkd[134]: lo: Link UP

Nov 12 21:41:56 bdc systemd-networkd[134]: lo: Gained carrier

Nov 12 21:41:56 bdc systemd-networkd[134]: eth0: Link UP

Nov 12 21:41:56 bdc systemd-networkd[134]: eth0: Gained carrier

Nov 12 21:41:56 bdc systemd-networkd[134]: Unable to load sysctl monitor BPF program, ignoring: Operation not permitted

Nov 12 21:41:56 bdc systemd-networkd[134]: eth0: Configuring with /etc/systemd/network/eth0.network.

Nov 12 21:41:56 bdc systemd[1]: Started systemd-networkd.service - Network Configuration.

Nov 12 21:41:56 bdc systemd-networkd[134]: eth0: DHCPv4 address 10.175.0.114/24, gateway 10.175.0.254 acquired from 10.175.0.254

Nov 12 21:41:57 bdc systemd-networkd[134]: eth0: Gained IPv6LL

And “systemd-resolved.service” :

root@hst-fr.bdc:~ # systemctl status systemd-resolved.service

● systemd-resolved.service - Network Name Resolution

Loaded: loaded (/usr/lib/systemd/system/systemd-resolved.service; enabled; preset: enabled)

Drop-In: /run/systemd/system/systemd-resolved.service.d

└─zzz-lxc-ropath.conf

/run/systemd/system/service.d

└─zzz-lxc-service.conf

Active: active (running) since Wed 2025-11-12 21:41:56 UTC; 9min ago

Invocation: 27354d912bba4fdb9d26a80c4f35847e

TriggeredBy: ● systemd-resolved-monitor.socket

● systemd-resolved-varlink.socket

Docs: man:systemd-resolved.service(8)

man:org.freedesktop.resolve1(5)

https://systemd.io/WRITING_NETWORK_CONFIGURATION_MANAGERS

https://systemd.io/WRITING_RESOLVER_CLIENTS

Main PID: 117 (systemd-resolve)

Status: "Processing requests..."

Tasks: 1 (limit: 38490)

Memory: 3.1M (peak: 3.5M)

CPU: 65ms

CGroup: /system.slice/systemd-resolved.service

└─117 /usr/lib/systemd/systemd-resolved

Nov 12 21:41:56 bdc systemd-resolved[117]: Positive Trust Anchors:

Nov 12 21:41:56 bdc systemd-resolved[117]: . IN DS 20326 8 2 e06d44b80b8f1d39a95c0b0d7c65d08458e880409bbc683457104237c7f8ec8d

Nov 12 21:41:56 bdc systemd-resolved[117]: . IN DS 38696 8 2 683d2d0acb8c9b712a1948b27f741219298d0a450d612c483af444a4c0fb2b16

Nov 12 21:41:56 bdc systemd-resolved[117]: Negative trust anchors: home.arpa 10.in-addr.arpa 16.172.in-addr.arpa 17.172.in-addr.arpa 18.172.in-addr.arpa 19.172.in-addr.arpa 20.172.in-addr.arpa 21.172.in-addr.arpa 22.172.in-addr.arpa 23.172.in-addr.arpa 24.172.in-addr.arpa 25.172.in-addr.arpa 26.172.in-addr.arpa 27.172.in-addr.arpa 28.172.in-addr.arpa 29.172.in-addr.arpa 30.172.in-addr.arpa 31.172.in-addr.arpa 170.0.0.192.in-addr.arpa 171.0.0.192.in-addr.arpa 168.192.in-addr.arpa d.f.ip6.arpa ipv4only.arpa resolver.arpa corp home internal intranet lan local private test

Nov 12 21:41:56 bdc systemd-resolved[117]: Using system hostname 'bdc'.

Nov 12 21:41:56 bdc systemd-resolved[117]: Failed to install memory pressure event source, ignoring: Read-only file system

Nov 12 21:41:56 bdc systemd[1]: Started systemd-resolved.service - Network Name Resolution.

And “incus” of course :

root@hst-fr:~ # systemctl status incus.service

● incus.service - Incus - Daemon

Loaded: loaded (/usr/lib/systemd/system/incus.service; indirect; preset: enabled)

Active: active (running) since Mon 2025-11-10 10:49:35 CET; 2 days ago

Invocation: 2b56708ed26d4cf082432314d254d503

TriggeredBy: ● incus.socket

Main PID: 18032 (incusd)

Tasks: 22

Memory: 1.1G (peak: 1.5G)

CPU: 2min 3.826s

CGroup: /system.slice/incus.service

├─18032 incusd --group incus-admin --logfile /var/log/incus/incusd.log

└─36026 dnsmasq --keep-in-foreground --strict-order --bind-interfaces --except-interface=lo --pid-file= --no-ping --interface=incusbr0 --dhcp-rapid-commit --no-negcache --quiet-dhcp --quiet-dhcp6 --quiet-ra --listen-address=10.175.0.254 --dhcp-no-override --dhcp-authoritative --dhcp-leasefile=/var/lib/incus/networks/incusbr0/dnsmasq.leases --dhcp-hostsfile=/var/lib/incus/networks/incusbr0/dnsmasq.hosts --dhcp-option-force=3,10.175.0.254 --dhcp-option-force=6,1.1.1.1,8.8.8.8 --dhcp-option-force=121,0.0.0.0/0,10.175.0.254 --dhcp-range 10.175.0.111,10.175.0.115,1h --listen-address=fc00:4780:28:5295::fd --enable-ra --dhcp-range ::,constructor:incusbr0,ra-only "--dhcp-option-force=option6:dns-server,[2606:4700:4700::1111,2001:4860:4860::8888]" -s incus --interface-name _gateway.incus,incusbr0 -S /incus/ --conf-file=/var/lib/incus/networks/incusbr0/dnsmasq.raw -u incus -g incus

Nov 12 22:41:49 hst-fr dnsmasq-dhcp[36026]: DHCP, sockets bound exclusively to interface incusbr0

Nov 12 22:41:49 hst-fr dnsmasq[36026]: using only locally-known addresses for incus

Nov 12 22:41:49 hst-fr dnsmasq[36026]: reading /etc/resolv.conf

Nov 12 22:41:49 hst-fr dnsmasq[36026]: using nameserver 89.116.146.10#53

Nov 12 22:41:49 hst-fr dnsmasq[36026]: using nameserver 1.1.1.1#53

Nov 12 22:41:49 hst-fr dnsmasq[36026]: using nameserver 8.8.4.4#53

Nov 12 22:41:49 hst-fr dnsmasq[36026]: using only locally-known addresses for incus

Nov 12 22:41:49 hst-fr dnsmasq[36026]: read /etc/hosts - 12 names

Nov 12 22:41:49 hst-fr dnsmasq-dhcp[36026]: read /var/lib/incus/networks/incusbr0/dnsmasq.hosts/bdc.eth0

Nov 12 22:41:49 hst-fr dnsmasq-dhcp[36026]: read /var/lib/incus/networks/incusbr0/dnsmasq.hosts/web.eth0

See you soon.

Romain (O.Romain.Jaillet-ramey)

ZW3B’s LAB3W : The Web’s Laboratory ; Engineering of the Internet.

Founder ZW3B.FR | TV | EU | COM | NET | BLOG | APP and IP❤10.ws more.