Hello,

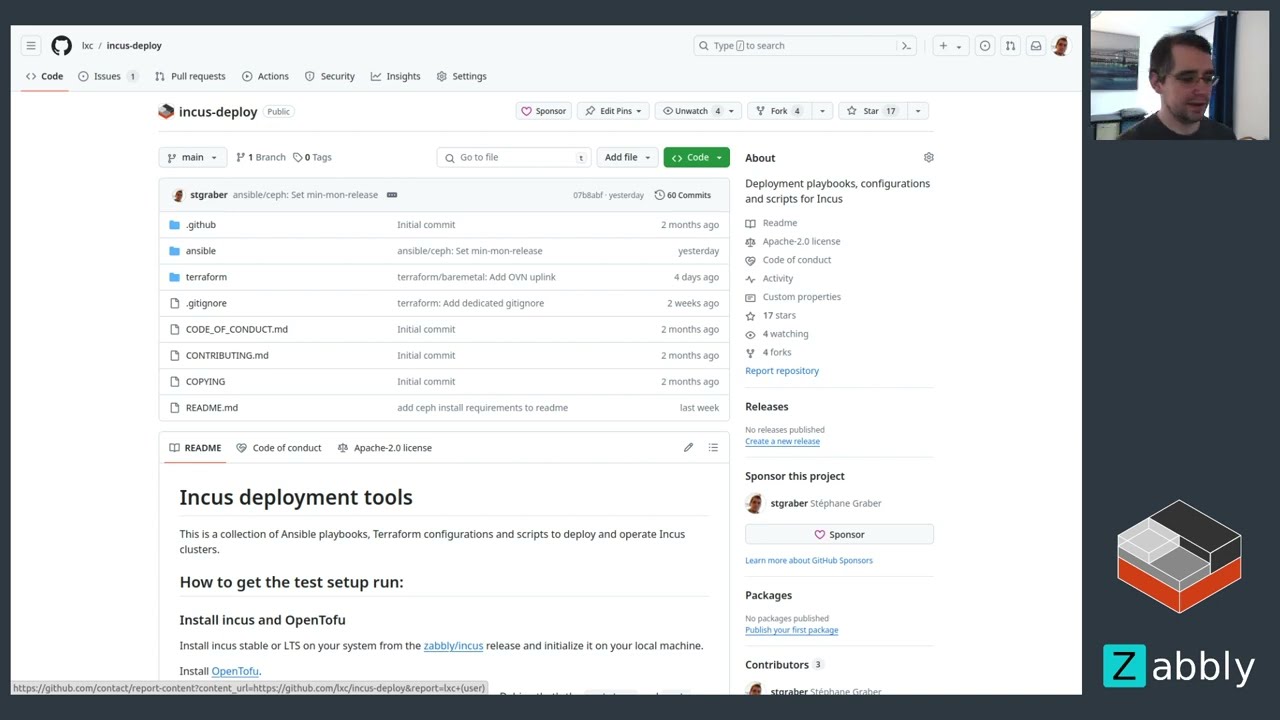

For those following my live streams, you’ll already know about my recent work on an Ansible & Terraform based deployment tool aimed at making it very easy to deploy and maintain Incus clusters.

While it’s still the early days of this effort, it’s now able to deploy a full Incus cluster including Ceph storage and OVN networking, effectively replicating the environment one may have built using MicroCloud over on the LXD side.

This is obviously quite a different take on it as this uses stock packages for everything, no snaps and no specific distro requirements (though it’s only been tested on Ubuntu 22.04 and adding others will need some work). It’s also a lot more customizable and can be integrated in someone’s existing deployment tooling.

The repository for this has now been moved over to the LXC organization.

And here is a full demo of it building a 5 machines cluster:

What the screencast above shows is basically:

- Using Terraform on an Incus system to create a new project (

dev-incus-deploy), then create an extra network (br-ovn-test) to be used for OVN ingress and then 5 virtual machines acting as our clustered servers, each of those gets 5 disks attached for used by a mix of local and Ceph storage. - Quickly looking at the Ansible configuration to show what networks and storage pools will be created on the resulting cluster.

- Running Ansible to deploy everything on the systems.

- Then entering one of the servers and throw a few instances at the cluster to make sure everything is behaving.

For actual deployments, the Terraform step would be replaced by either slightly different Terraform against a bare metal provisioning tool or just be done by hand but it makes it easy to experiment with.

Next steps:

- Add support for Ubuntu 20.04 LTS

- Add support for clustered LVM as an alternative to Ceph

- Add the ability to deploy Grafana, Prometheus and Loki (monitoring stack)

- Add the ability to deploy OpenFGA and Keycloak (authorization stack)

- Improve the Terraform/Ansible integration so we can do a full test deployment without having to manually tweak the Ansible inventory

I’d also like to repeat that I’m no expert in Ansible or Terraform, pretty far from that, so anyone who’s interested in contributing to this effort is most welcome to do so!

The goal is really to keep this both very easy to get started with while offering sufficient options that production users don’t need to re-invent the wheel and are motivated to contribute back anything that will be useful to the wider Incus community.