Hello,

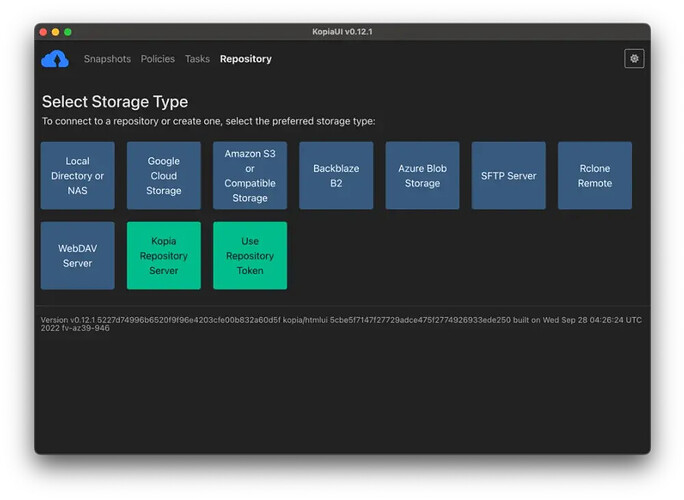

I use Kopia to back up my important files to a Hetzner storage box in Europe for 3.5€.

What should I back up for Incus, considering I use custom volumes?

Following this guide, do I just need to tarball the /var/lib/incus directory and send it to my storage box? If that’s it, it’s magic!![]()

folder size

my containers

root@incus:~# incus list

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| adguardhome | RUNNING | 10.0.0.12 (eth0) | fd42:f836:2ae:e691:216:3eff:fee6:87a2 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| caddy | RUNNING | 10.0.0.31 (eth0) | fd42:f836:2ae:e691:216:3eff:febe:ec5a (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| dns-technitium-authority | RUNNING | 10.0.0.17 (eth0) | fd42:f836:2ae:e691:216:3eff:fe5d:6c84 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| dns-technitium-recursive | RUNNING | 10.0.0.15 (eth0) | fd42:f836:2ae:e691:216:3eff:fead:c571 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| filebrowser | RUNNING | 10.0.0.147 (eth0) | fd42:f836:2ae:e691:216:3eff:fe95:fc79 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| filebrowser-local | RUNNING | 10.0.0.148 (eth0) | fd42:f836:2ae:e691:216:3eff:fe30:803b (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| goaccess | RUNNING | 10.0.0.178 (eth0) | fd42:f836:2ae:e691:216:3eff:fe49:4005 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| jump2-ssh | RUNNING | 10.0.0.54 (eth0) | fd42:f836:2ae:e691:216:3eff:fe34:56d4 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| mkdocs | RUNNING | 10.0.0.14 (eth0) | fd42:f836:2ae:e691:216:3eff:fe3f:c170 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| myspeed | RUNNING | 10.0.0.13 (eth0) | fd42:f836:2ae:e691:216:3eff:fec4:fab6 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| nextjs | RUNNING | 10.0.0.170 (eth0) | fd42:f836:2ae:e691:216:3eff:fe2b:8c74 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| pagefind | STOPPED | | | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| prestashop | RUNNING | 10.0.0.248 (eth0) | fd42:f836:2ae:e691:216:3eff:fe31:cbcf (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

| sftp-publii | RUNNING | 10.0.0.77 (eth0) | fd42:f836:2ae:e691:216:3eff:fedb:7292 (eth0) | CONTAINER | 0 |

+--------------------------+---------+-------------------+----------------------------------------------+-----------+-----------+

my storages

root@incus:~# incus storage volume list tank

+-----------+--------------------------+-------------+--------------+---------+

| TYPE | NAME | DESCRIPTION | CONTENT-TYPE | USED BY |

+-----------+--------------------------+-------------+--------------+---------+

| container | adguardhome | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | caddy | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | dns-technitium-authority | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | dns-technitium-recursive | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | filebrowser | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | filebrowser-local | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | goaccess | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | jump2-ssh | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | mkdocs | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | myspeed | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | nextjs | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | pagefind | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | prestashop | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| container | sftp-publii | | filesystem | 1 |

+-----------+--------------------------+-------------+--------------+---------+

| custom | filebrowser | | filesystem | 3 |

+-----------+--------------------------+-------------+--------------+---------+

| custom | publii | | filesystem | 3 |

+-----------+--------------------------+-------------+--------------+---------+

| custom | sftpbox | | filesystem | 0 |

+-----------+--------------------------+-------------+--------------+---------+

| custom | webdata | | filesystem | 3 |

+-----------+--------------------------+-------------+--------------+---------+