ben

August 27, 2022, 3:40pm

1

I’m trying to use the whole of 4 disks for lvm storage but I’m in quite a pickle (totally new to me)

lxc storage create default lvm source=/dev/vg/all lvm.vg.force_reuse=true

Error: Failed to run: pvcreate /dev/vg/all: Failed to clear hint file.

wipefs -a /dev/vg/all

getting nervous …

umount /dev/vg/all

lsof /home and a bunch of googling showed me that I needed to login as root instead

umount /dev/vg/all

wipefs --all --force /dev/vg/all

lxc storage create default lvm source=/dev/vg/all lvm.vg.force_reuse=true

I’m not doing this right am I?

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 62M 1 loop /snap/core20/1593

loop1 7:1 0 62M 1 loop /snap/core20/1611

loop2 7:2 0 102.4M 1 loop /snap/lxd/23270

loop3 7:3 0 47M 1 loop /snap/snapd/16292

sda 8:0 0 1.8T 0 disk

├─sda1 8:1 0 511M 0 part /boot/efi

├─sda2 8:2 0 29.3G 0 part /

├─sda3 8:3 0 512M 0 part [SWAP]

├─sda4 8:4 0 1.8T 0 part

│ └─vg-all 253:0 0 7.3T 0 lvm /home

└─sda5 8:5 0 2M 0 part

sdb 8:16 0 1.8T 0 disk

└─vg-all 253:0 0 7.3T 0 lvm /home

sdc 8:32 0 1.8T 0 disk

└─vg-all 253:0 0 7.3T 0 lvm /home

sdd 8:48 0 1.8T 0 disk

└─vg-all 253:0 0 7.3T 0 lvm /home

ben

August 27, 2022, 4:04pm

2

partprobe -s /dev/vg/all

don’t think nuking that is a good idea …

ben

August 27, 2022, 4:16pm

3

How I initially set up the lv

reinstall on soyoustart

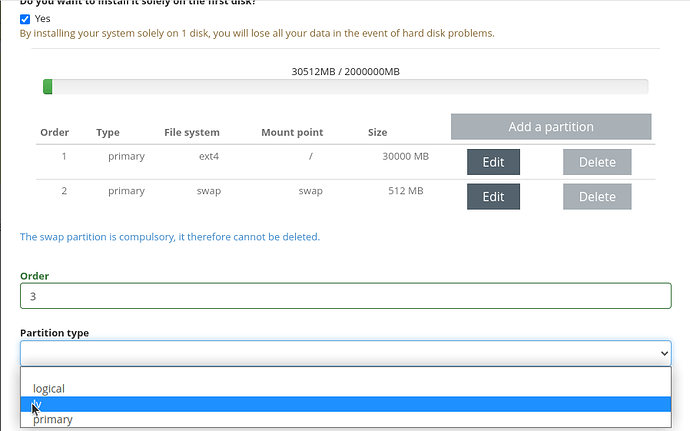

choose: Ubuntu Server 20.04 LTS “Focal Fossa” (64bits) [x] Custom installation = true

[x] Do you want to install it solely on the first disk? = true

edit primary partition to 30000MB (30GB) for the host system, ram & extra messing about space

add another partition:

order = 3

partition type = lv

file system = ext4

mount point = /home

size = [x] Use the remaining space = true

then just let the installation happen …

lsblk

--- Logical volume ---

LV Path /dev/vg/all

LV Name all

VG Name vg

LV UUID z1CGuq-U8vZ-r5hz-oaFW-pZEv-d8oo-rKkWAU

LV Write Access read/write

LV Creation host, time rescue-install-ca, 2022-08-25 17:44:52 +0000

LV Status available

# open 1

LV Size <7.25 TiB

Current LE 1899971

Segments 4

Allocation inherit

Read ahead sectors auto

- currently set to 256

Block device 253:0

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 62M 1 loop /snap/core20/1593

loop1 7:1 0 67.8M 1 loop /snap/lxd/22753

loop2 7:2 0 47M 1 loop /snap/snapd/16292

loop3 7:3 0 62M 1 loop /snap/core20/1611

sda 8:0 0 1.8T 0 disk

├─sda1 8:1 0 511M 0 part /boot/efi

├─sda2 8:2 0 29.3G 0 part /

├─sda3 8:3 0 512M 0 part [SWAP]

├─sda4 8:4 0 1.8T 0 part

│ └─vg-all 253:0 0 7.3T 0 lvm /home

└─sda5 8:5 0 2M 0 part

sdb 8:16 0 1.8T 0 disk

└─vg-all 253:0 0 7.3T 0 lvm /home

sdc 8:32 0 1.8T 0 disk

└─vg-all 253:0 0 7.3T 0 lvm /home

sdd 8:48 0 1.8T 0 disk

└─vg-all 253:0 0 7.3T 0 lvm /home

df -h

Filesystem Size Used Avail Use% Mounted on

udev 32G 0 32G 0% /dev

tmpfs 6.3G 1.6M 6.3G 1% /run

/dev/sda2 29G 2.3G 25G 9% /

tmpfs 32G 0 32G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 32G 0 32G 0% /sys/fs/cgroup

/dev/mapper/vg-all 1.8T 52K 1.7T 1% /home

/dev/loop0 62M 62M 0 100% /snap/core20/1593

/dev/loop1 68M 68M 0 100% /snap/lxd/22753

/dev/loop2 47M 47M 0 100% /snap/snapd/16292

/dev/sda1 511M 5.3M 506M 2% /boot/efi

tmpfs 6.3G 0 6.3G 0% /run/user/1000

/dev/loop3 62M 62M 0 100% /snap/core20/1611

Correct for LXD to use as a giant LVM pool?

tomp

August 27, 2022, 5:22pm

4

You create the volume group manually using what ever configuration you choose and once done tell LXD to use it using:

lxc storage create mypool lvm source=<volume group name>

tomp

August 27, 2022, 5:23pm

5

You should not create any logical volumes on it, just leave it as an empty volume group for LXD to consume.

ben

August 27, 2022, 5:25pm

6

lxc storage create default lvm source=/dev/vg/all lvm.vg.force_reuse=true

ben

August 27, 2022, 5:30pm

7

so I should not expand it first?

just let it consume the lv

then after can I do:

wipefs --all --force /dev/sdb

wipefs --all --force /dev/sdc

wipefs --all --force /dev/sdd

pvcreate /dev/sdb /dev/sdc /dev/sdd

vgextend vg /dev/sdb /dev/sdc /dev/sdd

lvextend -l +100%FREE /dev/vg/all

to gain the space on the other disks?

tomp

August 27, 2022, 6:01pm

8

There should be no lvs at all, only an empty VG.

ben

August 27, 2022, 6:13pm

10

In my host when I add a partition just pick primary instead of lv or logical? this is probably why I’m confused

tomp

August 27, 2022, 9:43pm

11

I am not familiar with the control panel you are using there.

But if you are setting up a custom volume group spanning several disks then I would normally do the following:

Create a primary partition on each drive.

Mark that partition as a LVM physical volume using pvcreate.

Create an LVM volume group using vgcreate that includes the PVs I just created.

Instruct LXD to use said volume group using the command I mentioned above.

1 Like

tomp

August 27, 2022, 9:57pm

12

I had a quick search for “LVM for beginners” (as you said this was a new subject for you) and it suggested this:

You basically only need steps 1 and 2 from this guide and then create the storage pool ontop.

In the LVM tab on this page Linux Containers - LXD - Has been moved to Canonical there is an example of how to instruct LXD to use an existing custom volume group:

Use the existing LVM volume group called my-pool for pool2:lxc storage create pool2 lvm source=my-pool

1 Like