p4pe

February 3, 2021, 3:28pm

1

Hello everyone, I’m a newbie whith LXC and I’m starting to “play” with them.

For my scenario I want to have the Openvswitch instead of the Linuxbridge, I found this topic in stackoverflow https://stackoverflow.com/questions/34021070/lxc-with-open-vswitch .

Following the steps, I change the config file of my container (named: c1), but now I can not start the container.

The config file (/var/lib/lxc/c1):

# Template used to create this container: /usr/share/lxc/templates/lxc-ubuntu

# Parameters passed to the template:

# Template script checksum (SHA-1): c2e4e142c5a5b7d033c25e2207bd4c500368e0b7

# For additional config options, please look at lxc.container.conf(5)

# Uncomment the following line to support nesting containers:

#lxc.include = /usr/share/lxc/config/nesting.conf

# (Be aware this has security implications)

# Common configuration

lxc.include = /usr/share/lxc/config/ubuntu.common.conf

# Container specific configuration

lxc.rootfs = /var/lib/lxc/c1/rootfs

lxc.rootfs.backend = dir

lxc.utsname = c1

lxc.arch = amd64

# Network configuration

lxc.network.type = veth

#lxc.network.link = lxcbr0

lxc.network.flags = up

lxc.network.hwaddr = 00:16:3e:c0:f3:ad

lxc.network.script.up = /etc/lxc/ifup # Interface up configuration

lxc.network.script.down = /etc/lxc/ifdown # Interface down configuration

lxc.network.veth.pair = lxc0

#lxc.network.hwaddr = 00:16:3e:15:b3:62

lxc.network.ipv4 = 192.168.100.10

The default.conf file (/etc/lxc)

lxc.network.type = veth

lxc.network.link = mybrdige

lxc.network.flags = up

lxc.network.hwaddr = 00:16:3e:xx:xx:xx

I’m kindly asking you for some input

Thank you

stgraber

February 3, 2021, 3:36pm

2

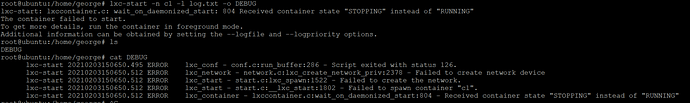

Log output suggests your script may be the problem?

p4pe

February 3, 2021, 4:03pm

4

Maybe yes, but which script?

I have these two scripts.

#!/bin/bash

BRIDGE=switch0

ovs-vsctl --may-exist add-br $BRIDGE

ovs-vsctl --if-exists del-port $BRIDGE $5

ovs-vsctl --may-exist add-port $BRIDGE $5

# cat /etc/lxc/ifdown

#!/bin/bash

ovsBr=switch0

ovs-vsctl --if-exists del-port ${ovsBr} $5

p4pe

February 3, 2021, 5:39pm

5

@stgraber I change lxc.network.link = switch0 which is the name of my ovsbridge (both ins default.conf and config file of c1.

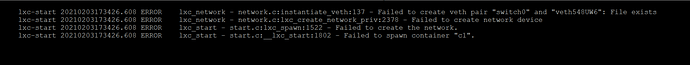

And now I have this errors!

stgraber

February 3, 2021, 5:48pm

6

What version of LXC is that?

stgraber

February 3, 2021, 6:43pm

8

Any chance you can use something a bit more modern?

4.0.x has proper openvswitch support, I have no idea if 2.0 even had any openvswitch support.

stgraber

February 3, 2021, 6:44pm

9

2.0.11 is what you’d get through apt on Ubuntu 16.04 LTS, that’s still supported but only for major security issues at this point. 4.0 as is shipped with 20.04 LTS should give you a much better experience.

p4pe

February 3, 2021, 6:50pm

10

So, your suggestion is to have a fresh install with Ubuntu 20.04 if Im right.

stgraber

February 3, 2021, 7:15pm

11

That’d certainly be best. Ubuntu 16.04 as you’re using it is going to be end of life in just a couple of months.

Ubuntu 20.04 will get you a modern kernel, LXC and openvswitch so should be considerably better suited for what you’re doing.

p4pe

February 3, 2021, 8:08pm

12

I think that I have progress @stgraber .

I have a fresh 20.04 installation.

Created a container :

sudo lxc-start -n c2 -d --logfile=logs

Created ovs bridge and assigned IP to it:

# ovs-vsctl add-br switch0

# ip add add 192.168.100.1/24 dev switch0

Show ovs-bridge (the Container is attached to the bridge)

sudo ovs-vsctl show

8cd3e1a6-eaa9-4341-b5c8-677e381c8306

Bridge switch0

Port vethlQXvGc

Interface vethlQXvGc

Port switch0

Interface switch0

type: internal

ovs_version: "2.13.1"

But the veth interface did not assigned with an IP

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 32:0d:08:dc:37:7c brd ff:ff:ff:ff:ff:ff

inet 83.212.89.157/24 brd 83.212.89.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fd00:de:ad:14:300d:8ff:fedc:377c/64 scope global dynamic mngtmpaddr

valid_lft 2591888sec preferred_lft 604688sec

inet6 2001:648:2810:1014:300d:8ff:fedc:377c/64 scope global dynamic mngtmpaddr

valid_lft 2591888sec preferred_lft 604688sec

inet6 fe80::300d:8ff:fedc:377c/64 scope link

valid_lft forever preferred_lft forever

3: ovs-system: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether ba:1b:2b:ac:e4:dd brd ff:ff:ff:ff:ff:ff

4: switch0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 72:73:46:75:11:45 brd ff:ff:ff:ff:ff:ff

inet 192.168.100.1/24 scope global switch0

valid_lft forever preferred_lft forever

7: vethlQXvGc@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master ovs-system state UP group default qlen 1000

link/ether fe:5b:04:5d:1a:64 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::fc5b:4ff:fe5d:1a64/64 scope link

valid_lft forever preferred_lft forever

Inside the container

sudo lxc-attach -n c2

root@c2:/# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:16:3e:28:ca:c7 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::216:3eff:fe28:cac7/64 scope link

valid_lft forever preferred_lft forever

root@c2:/#

Why my container did not “took” an IP?

stgraber

February 3, 2021, 8:15pm

13

Right, looks like you managed to create an OVS bridge and have LXC connect the container to it properly. That bridge is otherwise empty so it’s normal that the DHCP client in the container wouldn’t get an IP.

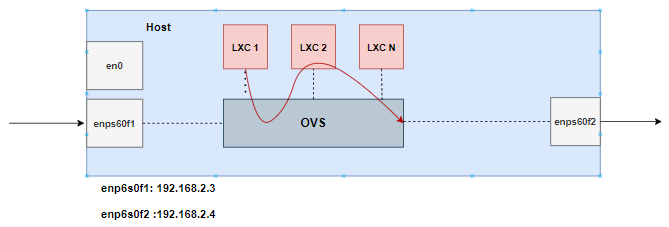

If your intent is to bridge to the outside network, then yeah, you’ll need to add an external interface to your bridge. Doing this will normally prevent you from having normal network configuration on that interface though, so you’ll need to also configure your OS to use the bridge interface for its external networking.

p4pe

February 3, 2021, 8:50pm

14

You are right if I bind the physical interface with the ovs-bridge I will lost connectivity.

But I have limited knowledge as I am at an entry level.

The 192.168.2.0/24 is an internal network, the host has also the en0 interface which has routable ip.

So, how do you believe that I should proceed?

Thank you for your time and your help @stgraber