Sure!

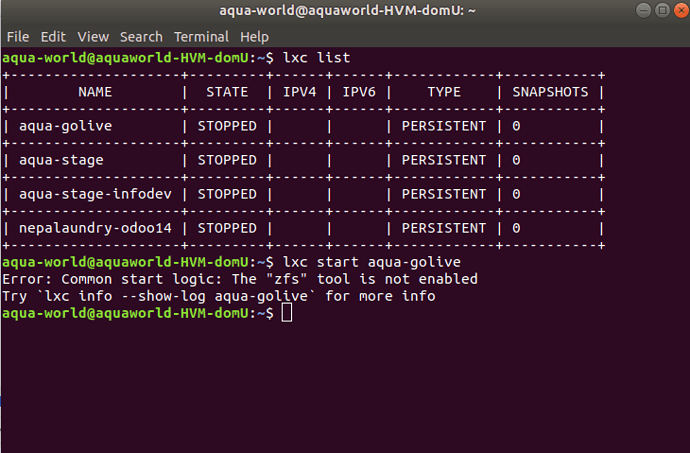

The output of lxc start aqua-golive --debug is

DBUG[09-03|00:43:58] Connecting to a local LXD over a Unix socket

DBUG[09-03|00:43:58] Sending request to LXD method=GET url=http://unix.socket/1.0 etag=

DBUG[09-03|00:43:58] Got response struct from LXD

DBUG[09-03|00:43:58]

{

"config": {},

"api_extensions": [

"storage_zfs_remove_snapshots",

"container_host_shutdown_timeout",

"container_stop_priority",

"container_syscall_filtering",

"auth_pki",

"container_last_used_at",

"etag",

"patch",

"usb_devices",

"https_allowed_credentials",

"image_compression_algorithm",

"directory_manipulation",

"container_cpu_time",

"storage_zfs_use_refquota",

"storage_lvm_mount_options",

"network",

"profile_usedby",

"container_push",

"container_exec_recording",

"certificate_update",

"container_exec_signal_handling",

"gpu_devices",

"container_image_properties",

"migration_progress",

"id_map",

"network_firewall_filtering",

"network_routes",

"storage",

"file_delete",

"file_append",

"network_dhcp_expiry",

"storage_lvm_vg_rename",

"storage_lvm_thinpool_rename",

"network_vlan",

"image_create_aliases",

"container_stateless_copy",

"container_only_migration",

"storage_zfs_clone_copy",

"unix_device_rename",

"storage_lvm_use_thinpool",

"storage_rsync_bwlimit",

"network_vxlan_interface",

"storage_btrfs_mount_options",

"entity_description",

"image_force_refresh",

"storage_lvm_lv_resizing",

"id_map_base",

"file_symlinks",

"container_push_target",

"network_vlan_physical",

"storage_images_delete",

"container_edit_metadata",

"container_snapshot_stateful_migration",

"storage_driver_ceph",

"storage_ceph_user_name",

"resource_limits",

"storage_volatile_initial_source",

"storage_ceph_force_osd_reuse",

"storage_block_filesystem_btrfs",

"resources",

"kernel_limits",

"storage_api_volume_rename",

"macaroon_authentication",

"network_sriov",

"console",

"restrict_devlxd",

"migration_pre_copy",

"infiniband",

"maas_network",

"devlxd_events",

"proxy",

"network_dhcp_gateway",

"file_get_symlink",

"network_leases",

"unix_device_hotplug",

"storage_api_local_volume_handling",

"operation_description",

"clustering",

"event_lifecycle",

"storage_api_remote_volume_handling",

"nvidia_runtime",

"candid_authentication",

"candid_config",

"candid_config_key",

"usb_optional_vendorid",

"id_map_current"

],

"api_status": "stable",

"api_version": "1.0",

"auth": "trusted",

"public": false,

"auth_methods": [

"tls"

],

"environment": {

"addresses": [],

"architectures": [

"x86_64",

"i686"

],

"certificate": "-----BEGIN CERTIFICATE-----\nMIIFZTCCA02gAwIBAgIRAMlH9NNlKLvdI3+u7ijW5WQwDQYJKoZIhvcNAQELBQAw\nQDEcMBoGA1UEChMTbGludXhjb250YWluZXJzLm9yZzEgMB4GA1UEAwwXcm9vdEBh\ncXVhd29ybGQtSFZNLWRvbVUwHhcNMjEwNjI2MDc1NzE2WhcNMzEwNjI0MDc1NzE2\nWjBAMRwwGgYDVQQKExNsaW51eGNvbnRhaW5lcnMub3JnMSAwHgYDVQQDDBdyb290\nQGFxdWF3b3JsZC1IVk0tZG9tVTCCAiIwDQYJKoZIhvcNAQEBBQADggIPADCCAgoC\nggIBANBmVQtJLKTQMfrIc7viViQ2n4lQBfCH1w9Tidm90HsBepdc2mRkOSxfGGK8\nCLR0TyLO6WClpo0Pc5zfR24Otx71ON2CqBEOPTKeQwYqWqyQZtpRudTm6tmHUTMD\nthZ15LZzfHJn+G6XOVlRxOdHxocRaTWxAJYHCCpnIhC4agRG3v2ycPrM9qlikiRm\n5XQtraTGxeM6pXv4BIT+bpKXaGhxStTGFDk0/8qZ+b7B7KCCwnpTc9yI/7G5XhHM\nJgqlYj1tm4TtjoluKRTDRDqq17vs5WqQtbXUhzmKX2gelmfaFNMalq9AlzVaqJfQ\nG3ew+xyIGlbQtS+UXIf+jAXW6RdwuOSR9vM7yCNBaZUR3i9SIbm1hH2DOvLn7zzN\nHxcm2agAWZ80Dzh8U4zHx86od6JHbbnWKOy0XMp8m/vCHxUIFVPamPPMM24ku41L\nFatkN/WlMydjqd1ovR0lvokel4MuMVgcP/vM7q98ZV+I5ariqrT0iktj3DcuJIs4\n9wRb6RZl3Dk9tNXaFQH7Zjav5lykXeZQHOC+A46Ump7GZZr9tMnfFyPTKenExGUk\nx8j1BJbsCg71PcgCqESVQRFaoO017KBldiNhxNCLVtyQcTIZM3dDZJZP+afoIhzP\n2P9D3W3tLUMn6eNu45MK6NDFfofSgtVs0TSlm5pqVql44r9FAgMBAAGjWjBYMA4G\nA1UdDwEB/wQEAwIFoDATBgNVHSUEDDAKBggrBgEFBQcDATAMBgNVHRMBAf8EAjAA\nMCMGA1UdEQQcMBqCEmFxdWF3b3JsZC1IVk0tZG9tVYcErB0BSzANBgkqhkiG9w0B\nAQsFAAOCAgEATvr5raOeS64a0N7qRB1o8s7rxa83kUlKPKynyFcnz5wCX/BEDaWS\nRKGi7X5OdExv7pL0r957JBXxmN8274iyRwaBWzJ4LrKGTcDPlbNyfAP7auztkM0O\nLif/8zkCf1mYSLDQwHnuUK2RnekVf8Ns3YZUNpyqNEMUnMo2fgchtruj4QHIw5Kg\nTOsW6G/mPD12M6Wr7NG2QU4FUrTkqEewjpmtViaGtGTSm7covXMDbW2r5gVhim5C\n2gR7efbY3x5weODTz99DxxEHZtQ/qQKD78clGNOiTw+X+a7uaq5hZGDTdoB8ENzJ\nWPlRup4Vna1DVm2YD3eLd9gvyaQ+ZoyYQwOqTAJbcNKpAs9jxnR5jDWteqXzVRrn\nRO1o/x/AK6+pFUU2GI2q8FoptEjBbvsdkWn1yf5XohJybsQNEPjARrJnX3hSOJdv\nrv5oKEGXmhq73+JD27H6mI7Ex1MDM7myCd28s7nnHV4ltaIQCtoPdhdkdGC5TtvH\nzk1E1lHm99g0sZgJ0fXQMfFNeXntrCOA3bD9jTG5MpFHrc1q128u7As4tgzp1gvI\nzN3Lfk6XOQoZ3IQZNhithlL+gA3qAJLhPzPrLtM5v3drLA/vZWihmTCndPLqMNCB\n5/O2uRDy4Iguy+/oIrtQive63wLmaABpFdfV6gRA0tQ1A7d8suNd+2Y=\n-----END CERTIFICATE-----\n",

"certificate_fingerprint": "a2a2f2afa3d6bb312feca2349aadf3eb34ec5de6b005237ef10c6fa71b4ff3d9",

"driver": "lxc",

"driver_version": "3.0.4",

"kernel": "Linux",

"kernel_architecture": "x86_64",

"kernel_features": null,

"kernel_version": "5.11.0-27-generic",

"lxc_features": null,

"project": "",

"server": "lxd",

"server_clustered": false,

"server_name": "aquaworld-HVM-domU",

"server_pid": 7569,

"server_version": "3.0.4",

"storage": "",

"storage_version": ""

}

}

DBUG[09-03|00:43:58] Sending request to LXD method=GET url=http://unix.socket/1.0/containers/aqua-golive etag=

DBUG[09-03|00:43:58] Got response struct from LXD

DBUG[09-03|00:43:58]

{

"architecture": "x86_64",

"config": {

"image.architecture": "x86_64",

"image.description": "Ubuntu 18.04 LTS server (20190424)",

"image.os": "ubuntu",

"image.release": "bionic",

"volatile.base_image": "b20f0cac0892cee029e5c65e8a36c7684e0d685bd0b22f839af5fd81a51b5f16",

"volatile.eth0.hwaddr": "00:16:3e:cb:59:03",

"volatile.idmap.base": "0",

"volatile.idmap.current": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.idmap.next": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.last_state.idmap": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.last_state.power": "STOPPED"

},

"devices": {},

"ephemeral": false,

"profiles": [

"default"

],

"stateful": false,

"description": "",

"created_at": "2021-06-27T21:50:45+05:45",

"expanded_config": {

"image.architecture": "x86_64",

"image.description": "Ubuntu 18.04 LTS server (20190424)",

"image.os": "ubuntu",

"image.release": "bionic",

"volatile.base_image": "b20f0cac0892cee029e5c65e8a36c7684e0d685bd0b22f839af5fd81a51b5f16",

"volatile.eth0.hwaddr": "00:16:3e:cb:59:03",

"volatile.idmap.base": "0",

"volatile.idmap.current": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.idmap.next": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.last_state.idmap": "[{\"Isuid\":true,\"Isgid\":false,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000},{\"Isuid\":false,\"Isgid\":true,\"Hostid\":1000000,\"Nsid\":0,\"Maprange\":1000000000}]",

"volatile.last_state.power": "STOPPED"

},

"expanded_devices": {

"eth0": {

"name": "eth0",

"nictype": "bridged",

"parent": "lxdbr0",

"type": "nic"

},

"root": {

"path": "/",

"pool": "default",

"type": "disk"

}

},

"name": "aqua-golive",

"status": "Stopped",

"status_code": 102,

"last_used_at": "2021-06-27T21:52:26.80536393+05:45",

"location": ""

}

DBUG[09-03|00:43:58] Connected to the websocket

DBUG[09-03|00:43:58] Sending request to LXD method=PUT url=http://unix.socket/1.0/containers/aqua-golive/state etag=

DBUG[09-03|00:43:58]

{

"action": "start",

"timeout": 0,

"force": false,

"stateful": false

}

DBUG[09-03|00:43:58] Got operation from LXD

DBUG[09-03|00:43:58]

{

"id": "671fdc50-cfd9-4b7c-a389-59ac47f70dc2",

"class": "task",

"description": "Starting container",

"created_at": "2021-09-03T00:43:58.659531156+05:45",

"updated_at": "2021-09-03T00:43:58.659531156+05:45",

"status": "Running",

"status_code": 103,

"resources": {

"containers": [

"/1.0/containers/aqua-golive"

]

},

"metadata": null,

"may_cancel": false,

"err": "",

"location": "none"

}

DBUG[09-03|00:43:58] Sending request to LXD method=GET url=http://unix.socket/1.0/operations/671fdc50-cfd9-4b7c-a389-59ac47f70dc2 etag=

DBUG[09-03|00:43:58] Got response struct from LXD

DBUG[09-03|00:43:58]

{

"id": "671fdc50-cfd9-4b7c-a389-59ac47f70dc2",

"class": "task",

"description": "Starting container",

"created_at": "2021-09-03T00:43:58.659531156+05:45",

"updated_at": "2021-09-03T00:43:58.659531156+05:45",

"status": "Running",

"status_code": 103,

"resources": {

"containers": [

"/1.0/containers/aqua-golive"

]

},

"metadata": null,

"may_cancel": false,

"err": "",

"location": "none"

}

Error: Common start logic: The "zfs" tool is not enabled

Try `lxc info --show-log aqua-golive` for more info