| Project | LXD |

| Status | Implemented |

| Release | 5.15 |

| Internal ID | LX042 |

Abstract

This feature will introduce a new limits.cpu.nodes config key which takes a range or comma separate list of NUMA nodes.

When set, any dynamic value of limits.cpu will trigger our scheduler to only place the instance CPUs within the configured set of NUMA nodes.

Rationale

Memory access is always the fastest, when the CPU can access its local memory. NUMA Nodes are CPU/Memory couples. Typically, the CPU socket and the closest memory banks build a NUMA Node. Whenever a CPU needs to access the memory of another NUMA node, it cannot access it directly but is required to access it through the CPU owning the memory. The performance degradation in a case where the CPU is not being able to access the memory through the local NUMA node can be massive and slow down the application a lot.

In order to avoid such cases, we would like to give the user the option to restrict instance CPUs to a set of specified NUMA nodes. As such, we could have an instance that has an optimized memory locality, or processor load, specifying a set of NUMA nodes that are close to each other.

Specification

Design

In a case where the specified limits.cpu is dynamic (i.e, a given number of CPUs to use) and not pinned (i.e, a given list of CPU thread IDs or range of CPU thread IDs), we will consider reading the value of limits.cpu.nodes which is either a list of NUMA node IDs or a range of NUMA node IDs.

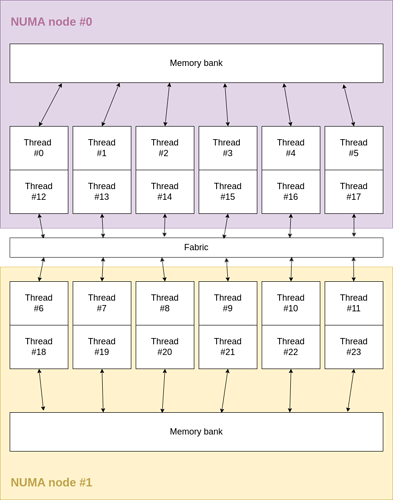

For example, here is a NUMA configuration:

$ lscpu | grep -i numa

NUMA node(s): 2

NUMA node0 CPU(s): 0-5,12-17

NUMA node1 CPU(s): 6-11,18-23

Here is an associated simplified image of the CPU topology:

Then, with my example in mind, if I choose limits.cpu.nodes=0, I will consider load-balancing on CPUs 0-5 and 12-17. If I choose limits.cpu.nodes=0,1 or limits.cpu.nodes=0-1 (it could be limits.cpu.nodes=0-x, x being an integer representing the upper NUMA node ID), in my example, I will consider load-balancing on all my CPUs.

-

What happens if

limits.cpuis a number (let’s call itn) greater than the number of elements in the set of NUMA node ID ? We just chose to pin all the CPUs contained in the NUMA node set and the remainder is load-balanced as usual (not pinned) -

If

nis lesser or equal to the number of elements in the set of NUMA node ID, we pin the NUMA CPUs in ascending order ((NUMA_Node_0.NUMA_CPU_0 -> ... -> NUMA_Node_0.NUMA_CPU_K ) -> ... -> (NUMA_Node_L.NUMA_CPU_0 -> ... -> NUMA_Node_L.NUMA_CPU_M ))

API changes

- New

limits.cpu.nodesconfig key which is a range or comma separate integers (each integer being a NUMA node identifier)

CLI changes

No CLI changes.

Database changes

No database changes.