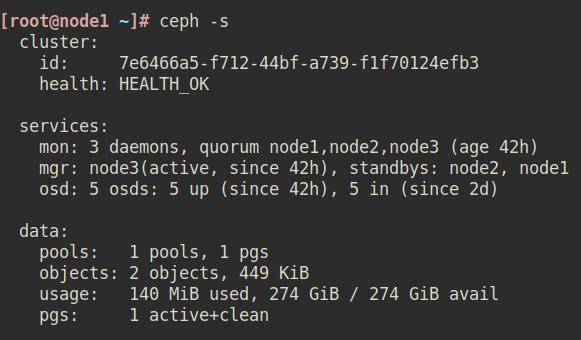

I’ve been experimenting with microceph & more briefly with cephadm (with services running in podman) on a 5 x node “low end” cluster - these details are probably more interesting for people with nodes having single disks who still want to run ceph. TLDR - I’m going to be using microceph for various reasons:

-

For your cluster you want at least 2 x NIC’s ideally with a

10gbor greater internal network forceph -

5 x node clusters will have better performance than 3 x node clusters.

-

microcephuses around1.5gbof RAM on monitor nodes &500mbon the other nodes -

microcephclusters reboot much faster (less than 10 seconds onnvme) - thancephadmnodes (the services inpodmantook much longer to stop -30to60seconds or so) -

cephadmuses a lot more disk (as it’s running a complete Centos 8 stream system in each container) - RAM usage was similar tomicroceph -

cephadmwill not install directly onto partitions - it requireslvmlv’s -

install

podmanthenapt install cephadm --no-install-recommends(to avoid pulling indocker) - ubuntu23.10gives youpodman v4from the official repos now. You probably want to usedockerwithcephadm- mycephadmcluster never became healthy (mgrkept crashing - possibly due to usingpodman?) -

Rather than going by the

microcephdocs - usemicroceph initto initialise every node so you can choose the specific ip address to runcephon (otherwise by default it will use yourpublicinterfaces)

These are the basic steps I use to get microceph running on disk partitions (partition fix from original discussion on Github):

Microceph notes

===============

# opening outbound destination ports

# tcp 3300 6789 6800-6850 7443

# 3300 is the new messenger v2 port (tcp 6789 is v1)

----------------------------------------------------

# put apparmor in complain mode so microceph init works

-------------------------------------------------------

echo -n complain > /sys/module/apparmor/parameters/mode

# installation

---------------

snap install microceph --channel reef/stable

snap refresh --hold microceph

# allow OSD to install on partitions

-------------------------------------------------------

systemctl edit snap.microceph.osd --drop-in=override

[Unit]

ExecStartPre=/path/to/osdfix

-------------------------------------------------------

# the script I use to fix the osd service

-----------------------------------------

* There are actually 3 snap profiles that mention virtio

* (see commented out $PROFILES & $FILES below)

* I found only the osd profile needs changing for partitions to work.

* Just change $ADD to a rule that makes sense for your disks

* As sed is inserting a line you don't need to escape forward slashes in $ADD

#!/bin/sh

TAG="Cephy"

ADD="/dev/vda[4-9] rwk,\t\t\t\t\t # $TAG"

SEARCH="/dev/vd\[a-z\]"

#PROFILES="/var/lib/snapd/apparmor/profiles/snap.microceph*"

#FILES=$(grep -l $SEARCH $PROFILES)

FILES="/var/lib/snapd/apparmor/profiles/snap.microceph.osd"

for file in $FILES; do

if ! grep -q $TAG $file; then

line=$(grep -n $SEARCH $file | cut -d : -f 1)

sed -i "$line i $ADD" $file

echo "Reloading: $file"

apparmor_parser -r $file

else

echo "Already configured: $file"

fi

done

exit 0

-----------------------------------------------------------------------------------

# first node say yes to cluster & add names of additional nodes to see join tokens:

-----------------------------------------------------------------------------------

microceph init

# example partition paths (for a VPS)

-------------------------------------

/dev/disk/by-path/virtio-pci-0000:00:05.0-part4

# additional nodes say no to cluster & provide token from previous step

-----------------------------------------------------------------------

microceph init

------------------------------------------------------------------------------------

# my previous microceph testing - long story short:

# the defaults give good performance

# with an internal network for ceph no need to go crazy on security

https://discuss.linuxcontainers.org/t/introducing-microceph/15457/47?u=itoffshore

------------------------------------------------------------------------------------

# useful commands

-----------------

microceph disk list

microceph cluster list

microceph cluster config list

ceph osd lspools

ceph osd tree

Hopefully these notes save people some time:

-

-

I found

microcephcompletely stable running for weeks on end (until I thought it was a good idea to trycephadm )

) -

I also upgraded

microcephfromquincytoreefwith zero problems

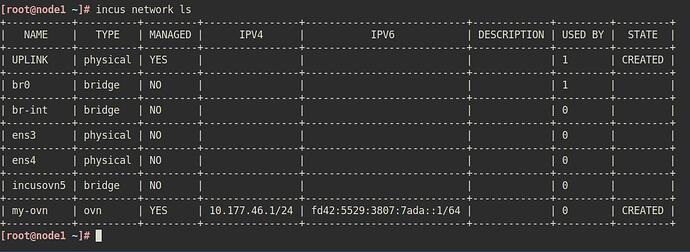

I will be continuing with incus & ovn-central - I did also try microovn but this seems to be better used with microcloud.

I got the cluster uplink network running without too much trouble - I just need to figure out how to give each chassis a memorable name (as microovn does by default)