Hi all. I’m currently testing an Incus cluster setup in some VMs. My host OS is NixOS. I want to have a cluster setup with a set of shared networks which are not NATed so that guests are accessible directly from outside, while using Incus’s DNS and DHCP feature. I got all of this working on a single node, as well as in a cluster, but in a cluster it seems a little jank, and I wanted to check if I’m missing something. There aren’t many addresses involved here but for clarity I’ll give the addresses from the subnet I’ve been testing with, 10.10.4.0/24.

My Incus config is extremely barebones:

config:

cluster.https_address: 10.10.0.230:8443

core.dns_address: 10.10.0.230:53

core.https_address: 10.10.0.230:8443

First of all, I’m coming from Proxmox, and I have noticed I often try to do things in a Proxmox way before discovering the Incus way of doing things, so apologies if I’ve missed something obvious.

I have VLAN interfaces set up on the host which are unconfigured. In Incus, I have created bridge interfaces for each of these VLAN interfaces, and at first left them unconfigured. This works, instances were able to get a DHCP lease from my DHCP server on my router (10.10.4.1) and were accessible from and had access to my network. Then, I tried getting the Incus DNS integration to work. I turned off my router’s DHCP server on that network, and then re-enabled ipv4.dhcp. Suddenly, the instances wouldn’t get an address. I checked with lsof -n -i :67, and nothing was bound. After searching around and finding nothing, I tried giving the bridge interface an IP address (10.10.4.2), and immediately the DHCP server was started, the instances got addresses, and DNS records were populated and handed off to my DNS server.

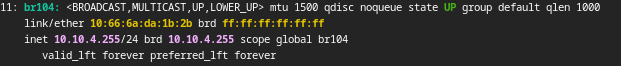

This is all well and good, except for when I want to add another node to my cluster. Now, these 2 nodes have the same IP address on their bridge interfaces, which while I can’t think of any specific problems this would cause, seems not ideal. An idea I tried was setting the address of the bridge to the broadcast address of that subnet, 10.10.4.255, which still works, but seems like a very hacky “fix”.

Network config as of now:

config:

dns.zone.forward: dev.dfsek.com

ipv4.address: 10.10.4.255/24

ipv4.dhcp.gateway: 10.10.4.1

ipv4.dhcp.ranges: 10.10.4.8-10.10.4.99

ipv4.nat: "false"

ipv6.address: none

ipv6.dhcp: "false"

ipv6.nat: "false"

security.acls: test,test-in

description: ""

name: br104

type: bridge

used_by:

- /1.0/instances/test

- /1.0/instances/test2

- /1.0/profiles/net_dev

managed: true

status: Created

locations:

- incus-amd64

- incus2-amd64

project: default

So I suppose my question is, is there a correct way to set the interface to “broadcast only” (as that is all that’s needed for DHCP)? Is my method of just setting the address to the broadcast address correct? It works, but my searching has not led me anywhere indicating whether this is correct practice or not. If this is wrong, is there a way to allow each node to have a separate address on the bridge, or should I use a different network setup in my cluster?