Hello,

Thanks to the feedback from this community (thanks @Tomp), I tried to use the “routed” nictype to solve the following use case.

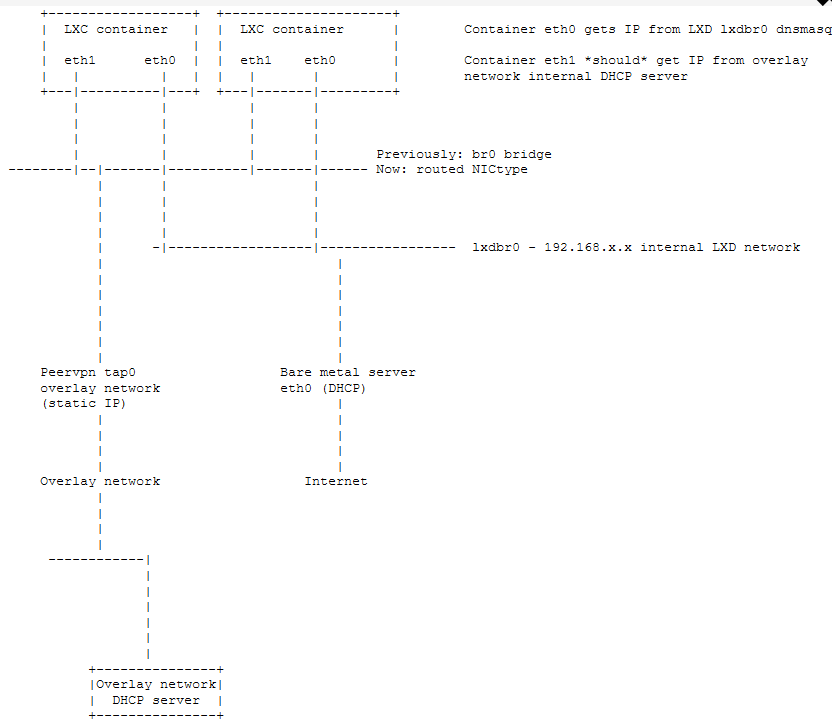

A baremetal server is having 2 network interfaces:

-

eth0 with dynamic IP address provided by the company hosting the server. This enables containers to access the internet through lxdbr0. Containers receive a 192.168.xx.xx IP address on their own eth0 through LXD internal DNSmasq (I think). This is pretty standard and works correctly.

-

tap0 is a peervpn managed interface providing access to an overlay network over which other containers are connected, both locally and remotely (hosted on other baremetal servers). A DHCP server is active on the overlay network to provide dynamic IP address to any computer/container connected to the overlay network.

Each container managed by LXD on this server is therefore having 2 interfaces:

- eth0 connected to LXD bridge, receiving dynamic IP address. This works flawlessly

- eth1 connected to the overlay network, receiving dynamic IP address from the DHCP server connected to the overlay network. It is important to note that the DHCP server is located on another baremetal server.

The previous setup was based on a bridge interface on top of tap0, which provided all containers seamless access to the overlay network. With the deprecation of IP aliasing and bridge-utils, this solution does not work for recent Debian/Ubuntu versions.

Based on previous feedback (Unable to create Slack/Nebula bridge to use as overlay network for LXD containers) we tried skipping the creation of abridge interface on top of tap0 (peervpn) and adding a “routed nictype” to containers to provide eth1 access to tap0 through the following command:

lxc config device add mycontainer eth1 nic nictype=routed parent=tap0

This does not work, meaning the eth1 within the container is not able to receive an IP address from the overlay network DHCP server, nor is able to ping the local tap0 IP address. The DHCP server do not “see” the DHCP request from the container.

Even manually setting the eth1 IP address in the container after starting it won’t help “see” the overlay network.

However, it works when we specify an IP address while adding the eth1 to the container AND manually set the route to the overlay network:

lxc config device add mycontainer eth1 nic nictype=routed parent=tap0 ipv4.address=$overlay_network_static_ip

(in the container) route add -net etc…

In this case the container can see the overlay network, resolve internal hostnames ping tap0 IP address, ping any IP accessible on the VPN. Unfortunately, if we manually change the container IP address after boot, or if we try to claim a dynamic IP address, access to the VPN is lost, as previously. The IP address within the container must be the same IP as the one declared when adding the NIC.

The problem is that we cannot use static IP addresses, so the solution of manually setting each container IP address is not sustainable.

Is it possible through “routed” nictype to send/receive/forward DHCP requests, and if not which nictype would be able to do so?

As mentioned earlier the current setup, based on deprecated Debian/Ubuntu versions, relied on the creation of a br0 bridge to allow all containers to connect to tap0 seamlessly, effectively hiding the fact that tap0 is an overlay VPN shared across multiple servers.

Below is the setup, hopefully the format will be readable (edit: no it’s not, linked to image instead).

Thanks for your help,

Best (and happy new year)