Hi,

With reference to my post in the thread If the shutdown and startup are timed correctly, a zombie listen port will be created:

My container has been running fairly stable for about two weeks but has started mis-behaving.

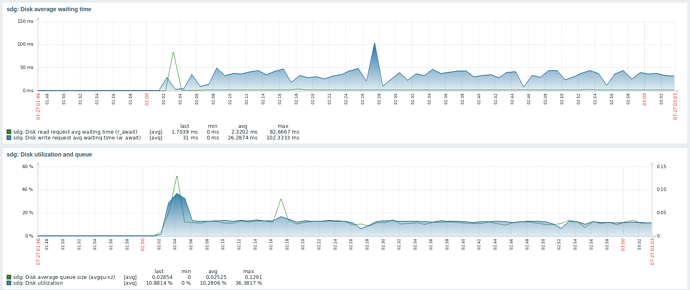

- First noted since the load average is high; currently about 16 when normally about 2. No obvious culprits in

topbut this is the same MO as I have seen on last two occasions of this issue. - The culprit (or at least the most obviously impacted) container is running Plex Media Server

- The Plex web frontend seems to be working for browsing the libraries etc but will not play media and has stopped pulling DVR guide data, etc.

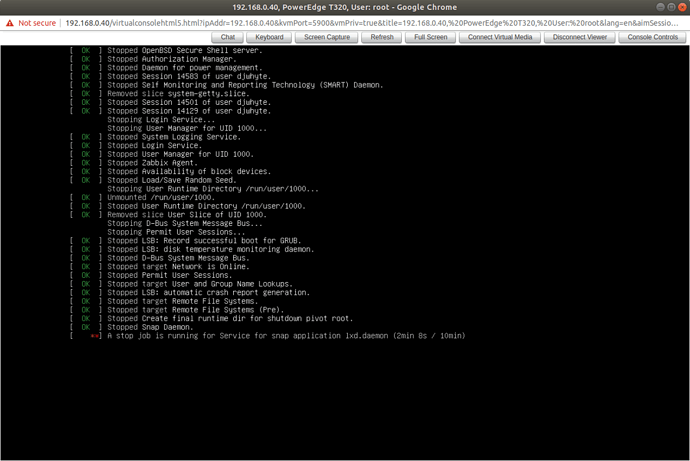

- Attempted a shutdown of the service within the container which failed.

systemctl status plexmediaservershows the service is now in a failed state. - Attempt to stop the container and

lxc stop plexnever returns. After five minutes, +c out. -

lxc infobelow. Note the network interfaces have gone. Log messages are quite benign I think.

Name: plex

Location: none

Remote: unix://

Architecture: x86_64

Created: 2021/06/06 12:50 UTC

Status: Running

Type: container

Profiles: default

Pid: 22671

Resources:

Processes: 6

Disk usage:

root: 16.76GB

CPU usage:

CPU usage (in seconds): 159936

Memory usage:

Memory (current): 230.21MB

Memory (peak): 12.46GB

Snapshots:

snap0 (taken at 2021/06/15 11:41 UTC) (stateless)

Log:

lxc plex 20210731102443.919 WARN conf - conf.c:lxc_map_ids:3389 - newuidmap binary is missing

lxc plex 20210731102443.920 WARN conf - conf.c:lxc_map_ids:3395 - newgidmap binary is missing

lxc plex 20210731103736.799 WARN conf - conf.c:lxc_map_ids:3389 - newuidmap binary is missing

lxc plex 20210731103736.799 WARN conf - conf.c:lxc_map_ids:3395 - newgidmap binary is missing

- Attempt to stop the container with ‘-f’ flag, still seems to run forever. No change to output of

lxc info. - The host is running Ubuntu 20.04.2.

- Running LXD snap.

tracking: 4.0/stable/ubuntu-20.04andinstalled: 4.0.7

Happy to leave this container in a dodgy state for next day or so but will need to recover it for next week. Only way I have to recover is to restart the host.

Regards,

Whytey