Introduction

LXD 3.19 will ship with virtual machine support.

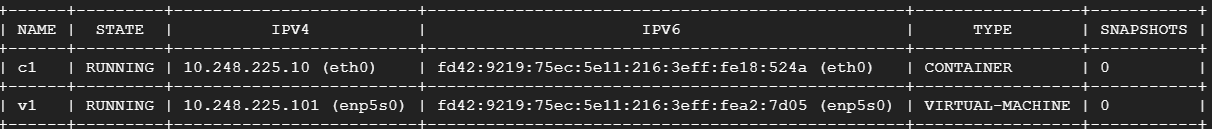

This effectively lets you mix and match containers and virtual machines on the same system based on the workloads you want to run. Those virtual machines use the same profiles, networks and storage pools as the containers.

The VMs are run through qemu using separate VM images. To attempt to get feature parity with LXD containers, an agent is available which when run in the VM makes it possible to use lxc exec, lxc file, … the same way you would with a container.

Trying it

As of earlier today, the LXD edge snap contains everything needed to try the virtual machine feature.

To install it, run snap install lxd --edge on a system which supports virtualization extensions.

Right now, only the dir storage backend works with VMs, so you should configure LXD to use that.

The only images currently available for this are ubuntu:18.04 and ubuntu:19.10.

Here is it being installed and configured, running a container and a VM:

root@lantea:~# snap install lxd --edge && lxd.migrate -yes

2019-11-19T20:35:09Z INFO Waiting for restart...

lxd (edge) git-2b837b6 from Canonical✓ installed

=> Connecting to source server

=> Connecting to destination server

=> Running sanity checks

The source server is empty, no migration needed.

The migration is now complete and your containers should be back online.

All done. You may need to close your current shell and open a new one to have the "lxc" command work.

To migrate your existing client configuration, move ~/.config/lxc to ~/snap/lxd/current/.config/lxc

root@lantea:~# lxd init

Would you like to use LXD clustering? (yes/no) [default=no]:

Do you want to configure a new storage pool? (yes/no) [default=yes]:

Name of the new storage pool [default=default]:

Name of the storage backend to use (btrfs, ceph, dir, lvm, zfs) [default=zfs]: dir

Would you like to connect to a MAAS server? (yes/no) [default=no]:

Would you like to create a new local network bridge? (yes/no) [default=yes]:

What should the new bridge be called? [default=lxdbr0]:

What IPv4 address should be used? (CIDR subnet notation, “auto” or “none”) [default=auto]:

What IPv6 address should be used? (CIDR subnet notation, “auto” or “none”) [default=auto]:

Would you like LXD to be available over the network? (yes/no) [default=no]:

Would you like stale cached images to be updated automatically? (yes/no) [default=yes]

Would you like a YAML "lxd init" preseed to be printed? (yes/no) [default=no]:

root@lantea:~# lxc profile edit default

root@lantea:~# lxc profile show default

config:

user.vendor-data: |

#cloud-config

apt_mirror: http://us.archive.ubuntu.com/ubuntu/

ssh_pwauth: yes

users:

- name: ubuntu

passwd: "$6$s.wXDkoGmU5md$d.vxMQSvtcs1I7wUG4SLgUhmarY7BR.5lusJq1D9U9EnHK2LJx18x90ipsg0g3Jcomfp0EoGAZYfgvT22qGFl/"

lock_passwd: false

groups: lxd

shell: /bin/bash

sudo: ALL=(ALL) NOPASSWD:ALL

description: Default LXD profile

devices:

eth0:

name: eth0

nictype: bridged

parent: lxdbr0

type: nic

root:

path: /

pool: default

type: disk

name: default

used_by: []

root@lantea:~# lxc launch ubuntu:18.04 c1

Creating c1

Starting c1

root@lantea:~# lxc init ubuntu:18.04 v1 --vm

Creating v1

root@lantea:~# lxc config device add v1 config disk source=cloud-init:config

Device config added to v1

root@lantea:~# lxc start v1

root@lantea:~# lxc console v1

To detach from the console, press: <ctrl>+a q

v1 login: ubuntu

Password:

Welcome to Ubuntu 18.04.3 LTS (GNU/Linux 4.15.0-70-generic x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Tue Nov 19 21:53:52 UTC 2019

System load: 0.24 Processes: 110

Usage of /: 10.8% of 8.86GB Users logged in: 0

Memory usage: 12% IP address for enp5s0: 10.86.33.201

Swap usage: 0%

0 packages can be updated.

0 updates are security updates.

The programs included with the Ubuntu system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Ubuntu comes with ABSOLUTELY NO WARRANTY, to the extent permitted by

applicable law.

ubuntu@v1:~$ sudo -i

root@v1:~# mount -t 9p config /mnt/

root@v1:~# cd /mnt/

root@v1:/mnt# ./install.sh

Created symlink /etc/systemd/system/multi-user.target.wants/lxd-agent.service → /lib/systemd/system/lxd-agent.service.

Created symlink /etc/systemd/system/multi-user.target.wants/lxd-agent-9p.service → /lib/systemd/system/lxd-agent-9p.service.

LXD agent has been installed, reboot to confirm setup.

To start it now, unmount this filesystem and run: systemctl start lxd-agent-9p lxd-agent

root@v1:/mnt#

root@lantea:~# lxc restart v1

root@lantea:~# lxc list

+------+---------+-----------------------+-------------------------------------------------+-----------------+-----------+

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

+------+---------+-----------------------+-------------------------------------------------+-----------------+-----------+

| c1 | RUNNING | 10.86.33.59 (eth0) | fd42:6f9e:d112:ebe1:216:3eff:fefc:8d5b (eth0) | CONTAINER | 0 |

+------+---------+-----------------------+-------------------------------------------------+-----------------+-----------+

| v1 | RUNNING | 10.86.33.201 (enp5s0) | fd42:6f9e:d112:ebe1:216:3eff:fea4:d961 (enp5s0) | VIRTUAL-MACHINE | 0 |

+------+---------+-----------------------+-------------------------------------------------+-----------------+-----------+

root@lantea:~# lxc exec v1 bash

root@v1:~# ls /

bin home lib64 opt sbin tmp vmlinuz.old

boot initrd.img lost+found proc snap usr

dev initrd.img.old media root srv var

etc lib mnt run sys vmlinuz

root@v1:~# exit

root@lantea:~#

A few useful notes:

- The password for the

ubuntuuser is set throughuser.vendor-datatoubuntu - Leaving

lxc consoleis done withctrl+a q - The agent needs to be manually installed right now using a script available in the 9p config drive. Once installed, restart the virtual machine and once fully booted, all additional features will be available

- You can create an empty VM and have it do UEFI netboot by using

lxc init v2 --empty --vm - The firmware is UEFI with secureboot enabled, all devices use virtio.

Limitations

There are currently so many limitations to this that listing them all would be futile.

We are actively working on the storage side of things now, making it a release blocker for 3.19.

There are a number of easy issues we’re also working on in parallel to improve the agent integration and speed things up a bit.

We are also working on distrobuilder support for VM image building, once done, we’ll slowly start publishing images for all the Linux distributions we currently support as containers.

Documentation is another blocker for 3.19, we need to update a whole lot of it to reflect the fact that both containers and virtual machines are supported and that not all configuration options apply to both.

Right now, this only works on x86_64, additional architectures will be added soon, starting with aarch64.