As you may know, we’ve been busy building our own immutable Linux image to act as an ideal platform to run Incus.

You can find more details about it here: GitHub - lxc/incus-os: Immutable Linux OS to run Incus

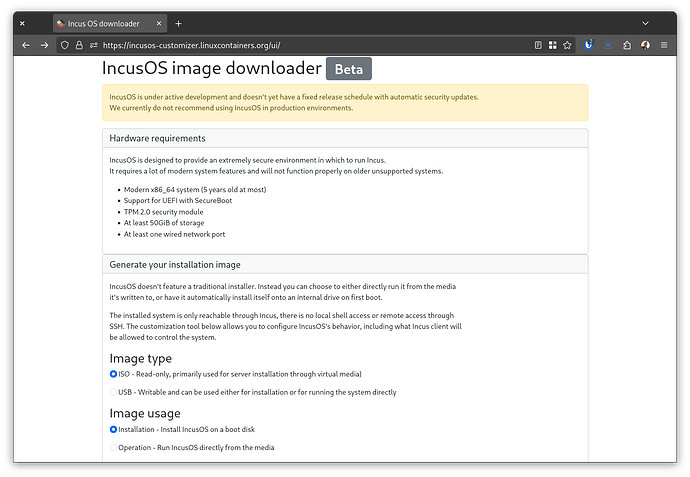

We now have pretty reliable images that can be used to check out this work, though it’s strongly recommended that those not be used in production yet as we don’t have a strict release cadence and handling of security updates at this point in time.

To make it easy for folks to try out, we’ve now released a custom image generator.

This basically allows for very easily creating an Incus OS install or runtime image by just answering a few questions and then downloading your customized image directly from our servers.

This can now be found at: https://incusos-customizer.linuxcontainers.org

If you want to try this out as an Incus VM, we’d recommend downloading an installation ISO with your Incus client certificate added to it.

You can then use that ISO with:

incus create incus-os -c limits.cpu=4 -c limits.memory=4GiB -c security.secureboot=false -d root,size=50GiB --empty --vm

incus config device add incus-os vtpm tpm

incus config device add incus-os install disk source=/PATH/TO/THE/DOWNLOAD.iso boot.priority=10

incus start incus-os

sleep 5s

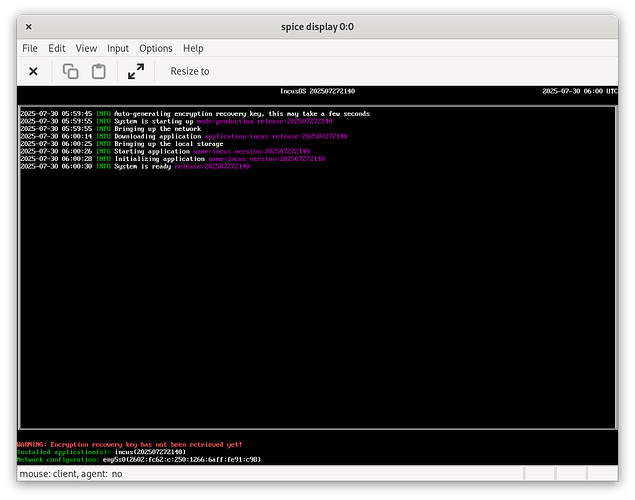

incus console incus-os --type=vga

Once installed, run:

incus stop incus-os

incus config device remove incus-os install

incus start incus-os --console=vga

This will remove the install media and boot the installed system.

Once functional, you can add this server as a remote with incus remote add and start interacting with it.

If installing on real hardware, you’ll need to either switch Secure Boot to Setup Mode so we can perform an automatic key enrollment, or you can manually load our KEK and DB keys which are available in the EFI keys folder as DER files.