Hi, I’ve been trying to set up IncusOS to move my homelab into VMs/containers from Ubuntu this weekend.

I have a number of VLANs and subnets (all 192.168.x.0/24) to keep things isolated, the relevant ones here are:

- VLAN 1010, subnet 192.168.11.0/24 - server access routable from my LAN.

- VLAN 1020, subnet 192.168.20.0/24 - servers with IPs DNAT redirected in from WAN (untagged/primary on the port this system is connected to)

- VLAN 1120, subnet 192.168.120.0/24 - isolated IP cameras

incus admin os system network show looks like the following currently which all works, I can access IncusOS on 192.168.11.30:

config:

interfaces:

- addresses:

- 192.168.20.30/24

- slaac

hwaddr: xx:xx:xx:xx:xx:2d

name: enp3s0

required_for_online: "no"

roles:

- instances

routes:

- to: 0.0.0.0/0

via: 192.168.20.1

vlan_tags:

- 1010

- 1011

- 1110

- 1120

time:

timezone: UTC

vlans:

- addresses:

- 192.168.11.30/24

id: 1010

name: vlan.lansrvs

parent: enp3s0

roles:

- instances

- management

routes:

- to: 0.0.0.0/0

via: 192.168.11.1

- id: 1120

name: vlan.isocctv

parent: enp3s0

roles:

- instances

incus network list looks like this:

$ incus network list

+--------------+--------+---------+---------------+---------------------------+----------------------------+---------+---------+

| NAME | TYPE | MANAGED | IPV4 | IPV6 | DESCRIPTION | USED BY | STATE |

+--------------+--------+---------+---------------+---------------------------+----------------------------+---------+---------+

| enp3s0 | bridge | NO | | | | 1 | |

+--------------+--------+---------+---------------+---------------------------+----------------------------+---------+---------+

| incusbr0 | bridge | YES | 10.100.0.1/16 | fd42:6cd0:eb92:4cbe::1/64 | Local network bridge (NAT) | 5 | CREATED |

+--------------+--------+---------+---------------+---------------------------+----------------------------+---------+---------+

| vlan.isocctv | vlan | NO | | | | 1 | |

+--------------+--------+---------+---------------+---------------------------+----------------------------+---------+---------+

| vlan.lansrvs | vlan | NO | | | | 1 | |

+--------------+--------+---------+---------------+---------------------------+----------------------------+---------+---------+

I have figured out that I can get instances onto my host network through enp3s0 by bridging with the following:

eth1:

nictype: bridged

parent: enp3s0

type: nic

And they get an address in 1020 through DHCP just fine.

I can also add them onto my 1010 VLAN with a static IP by using the routed type, e.g.:

eth1:

ipv4.address: 192.168.10.31

nictype: routed

parent: vlan.lansrvs

type: nic

And that works fine, I can access the instance via this address.

The problem I have is that I can’t add instances to VLANs (e.g. 1120) to get an address with DHCP. I thought the following should work:

eth1:

nictype: bridged

parent: vlan.isocctv

type: nic

But this stops the instance from starting with the following error:

Failed to start device "eth1": Failed to connect to OVS: Failed to connect to OVS: Failed to connect to OVS: failed to connect to unix:/run/openvswitch/db.sock: failed to open connection: dial unix /run/openvswitch/db.sock: connect: no such file or directory

I have instead tried bridging directly from the nic like so which was a suggestion from one of the other threads:

eth1:

nictype: bridged

parent: enp3s0

type: nic

vlan: '1120'

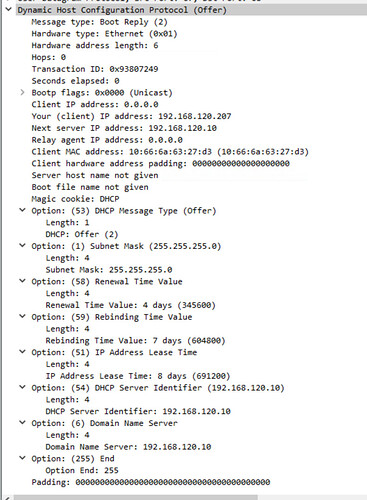

And while this lets the instance start it still doesn’t negotiate an IP, even if I delete the iso-cctv vlan from the network with the cli admin interface.

I’m sure I did have this working at one point so I think I must be doing something stupid here and just haven’t managed to get back to what I had configured previously. Any help appreciated ![]()