When I use FRR router to connect to the bridge network of multiple cluster members in the incus cluster through the bgp protocol, how to set FRR to connect to the virtual machine under the corresponding cluster member, because the bridge network of multiple cluster members has the same ipv4 segment.

The following is the configuration of my frr router:

Building configuration...

Current configuration:

!

frr version 8.2

frr defaults traditional

hostname OpenWrt

log syslog

!

password zebra

!

router bgp 398765

bgp router-id 192.168.1.1

neighbor 192.168.1.101 remote-as 65100

neighbor 192.168.1.102 remote-as 65100

neighbor 192.168.1.103 remote-as 65100

!

address-family ipv4 unicast

neighbor 192.168.1.101 soft-reconfiguration inbound

neighbor 192.168.1.101 prefix-list bgp-everything in

neighbor 192.168.1.101 prefix-list bgp-everything out

neighbor 192.168.1.102 soft-reconfiguration inbound

neighbor 192.168.1.102 prefix-list bgp-everything in

neighbor 192.168.1.102 prefix-list bgp-everything out

neighbor 192.168.1.103 soft-reconfiguration inbound

neighbor 192.168.1.103 prefix-list bgp-everything in

neighbor 192.168.1.103 prefix-list bgp-everything out

exit-address-family

exit

!

access-list vty seq 5 permit 127.0.0.0/8

access-list vty seq 10 deny any

!

ip prefix-list bgp-everything seq 1 permit 10.236.116.0/24

!

line vty

access-class vty

exit

!

end

The following is the configuration of my bridging network

config:

bgp.peers.myfrr.address: 192.168.1.1

bgp.peers.myfrr.asn: "398765"

ipv4.address: 10.236.116.1/24

ipv4.nat: "false"

ipv6.address: fd42:ce2c:fba1:4592::1/64

ipv6.nat: "true"

description: ""

name: bgp-br

type: bridge

used_by: []

managed: true

status: Created

locations:

- hci01

- hci02

- hci03

The following is the configuration of my inus cluster:

config:

bgp.peers.myfrr.address: 192.168.1.1

bgp.peers.myfrr.asn: "398765"

ipv4.address: 10.236.116.1/24

ipv4.nat: "false"

ipv6.address: fd42:ce2c:fba1:4592::1/64

ipv6.nat: "true"

description: ""

name: bgp-br

type: bridge

used_by: []

managed: true

status: Created

locations:

- hci01

- hci02

- hci03

root@hci02:~# incus config show

config:

cluster.https_address: hci02.service:8443

core.bgp_asn: "65100"

core.https_address: hci02.service:8443

images.auto_update_interval: "0"

loki.api.url: http://192.168.1.106:3100

loki.instance: incus:clus-cZW0CNlrO3

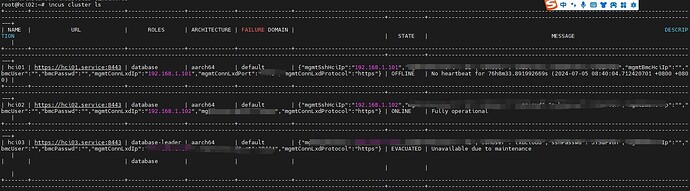

The following is a list of my inus cluster members: