Hi

I want know how lxd route traffic to external and what is topology of float ip in lxd with ovn.

Can anyone explain this?

Floating IPs (called Network forwards in our documentation) are effectively IPs configured on the OVN logical router’s external port on the uplink network and behave as DNAT rules towards IPs in the internal OVN network.

In terms of how LXD gets the external listen IP of a network forward into OVN, the short answer is it doesn’t, at least not anymore.

We used to rely on OVN’s functionality to respond to IP neighbour requests for the network forward’s listen IP on the uplink network. However in OVN 21.06 they broke/removed that functionality if the network forwarder’s listen IP isn’t in the same subnet as the uplink network.

See Can't make OVN network forward working in cluster environment - #40 by tomp

So now you need to ensure that the traffic for the network forward’s listen address is forwarded to the OVN router’s external IP (this can be gathered using lxc network get <ovn network> volatile.network.ipv4.address).

They did add back in an option to re-enable the IP neighbour responders for load balancers using the neighbor_responder option (see https://github.com/ovn-org/ovn/blob/main/ovn-nb.xml#L1895-L1902) but LXD doesn’t use this option at the moment.

Hi,

what is your opinion on routing to multiple public ip outbound and inbound?

in your tutorial outbound traffic is on host external ip address.

I want to route with multiple public ip in multiple ovn networks.

If you can route external IPs/subnets to the OVN network’s volatile.network.ipv{n}.address and specify them for use in the uplink network’s ipv{n}.routes, then you can use them in the following ways:

- As the listen address for a network forward or network load balancer on the OVN virtual router.

- As the subnet used for an

ovnnetwork (e.g. settingipv{n}.addressandipv{n}.nat=falsesee Linux Containers - LXD - Has been moved to Canonical). - As a direct routed IP into an instance using the

ipv{n}.routes.externalsetting on an instance’sovnNIC (see Linux Containers - LXD - Has been moved to Canonical).

If you have a spare interface that is connected to the actual uplink network then you don’t need to use the private bridge lxdbr0 as the uplink network, and instead can a physical uplink network that specifies the settings to use for OVN’s purposes.

See Linux Containers - LXD - Has been moved to Canonical

This will then connect OVN networks directly to the uplink network.

Finally, if your uplink network has BGP support on its router then you can get LXD to advertise the external IPs/subnets its using to it (which removes the need to manually route these addresses to the OVN router’s volatile.network.ipv{n}.address address.

See:

Thank you @tomp

In my case, i have two /30 public ips and each ip has a mac address.

I want to use one dedicate ip for each ovn network for routing instances to internet. and use some public ips as float ip to access instances from the internet.

In my case do you prefer I create a specific bridge interface for each ip and set it as parent for ovn networks?

Do you mean you want to use these IPs as the NAT source address on the OVN network, i.e by setting lxc network set <network> ipv{n}.nat.address?

Are you using a LXD cluster or a single node?

How are the IPs routed (do they need IP ARP resolution to arrive at the host)?

yes exactly

lxd cluster

They are in ovh datacenter. and each subnet are routed to one bare metal server and not on all servers.

OK so thats not really acceptable from an OVN HA perspective. In LXD each OVN network can potentially use any of the LXD cluster members as the gateway onto the uplink network. When the network is created each cluster member is assigned a random priority, and the highest active member becomes the active gateway chassis. If one cluster member fails the member with the next highest priority becomes the active gateway chassis etc.

So that means that OVN needs a shared L2 uplink network to operate in, as the external IP cannot be routed to only one cluster member (well it can, but if that turns out to be not the activate gateway chassis at some point in the future then connectivity will stop working).

What I suggest you do is leave OVN using the private local lxdbr0 bridge as its uplink, and then add firewall rules on each LXD cluster member to perform custom SNAT to the correct source IP for that machine based on the source address of the OVN network’s virtual router ( volatile.network.ipv{n}.address).

You’ll need to bind the external IPs to the specific LXD host’s external interface.

This setup will still be problematic as if you only have 2 IPs then what happens when the active chassis becomes a different server?

The key thing to understand is that OVN networks in LXD clusters do not belong on a specific LXD server (unlike bridge networks). That means their router’s external connectivity dynamic and can move between LXD servers. This is why a shared L2 uplink network is basically essential when you want to use external IPs on OVN networks.

Hi again

If i want to use one node with ovn standalone is it ok?

I have set multiple public ip on main interface in host and the ips can ping from outside of host.

When i forward them on specific container inside host it reaches to host instead of container.

lxc network forward create ovntest publicip target-address=10.193.195.3

Yes that is fine. See Linux Containers - LXD - Has been moved to Canonical

When creating a network forward on an OVN network, OVN will create a DNAT forward on the OVN virtual router’s external port (that is connected to the uplink network) forwarding to the internal destination specified.

But in recent versions of OVN, they stopped OVN’s virtual router from responding to IP neighbour requests for IPs outside of the virtual router’s external subnet. See What is topology of lxd in creating ovn logical switches and router? - #3 by tomp

This means you need to setup routes on the LXD host that route the external IPs of the network forward to the OVN virtual router. See lxc network get <ovn_network> volatile.network.ipv{n}.address for the target IP of the OVN virtual router to route to.

Finally, if your upstream ISP hasn’t routed the external IPs directly to the LXD host’s external interface then you will need to “attract” packets for those IPs towards the interface. If you do this by setting up the IPs on the LXD host itself, then that will work in getting the packets onto the LXD host, but as you’ve found the LXD host will then not forward them on as it thinks it is responsible for them.

Instead you should use an IP neighbour proxy on the LXD host’s external entry using sudo ip neigh proxy add command.

Thank you very much.

I could set float ip on my instances like this:

my container ip in ovntest network is 10.193.195.2

lxc network forward create ovntest publicIP target_address=10.193.195.2

Now i can reach to my container via public ip.

but now container traffic is routed via main lxd host ip and i want to be publicIP. i should write static route?

I could solve my problem by switch to physical network and nat address.

Did you try this?

Yes @tomp

And did it not work? Can you show me the specific steps you took?

No it works

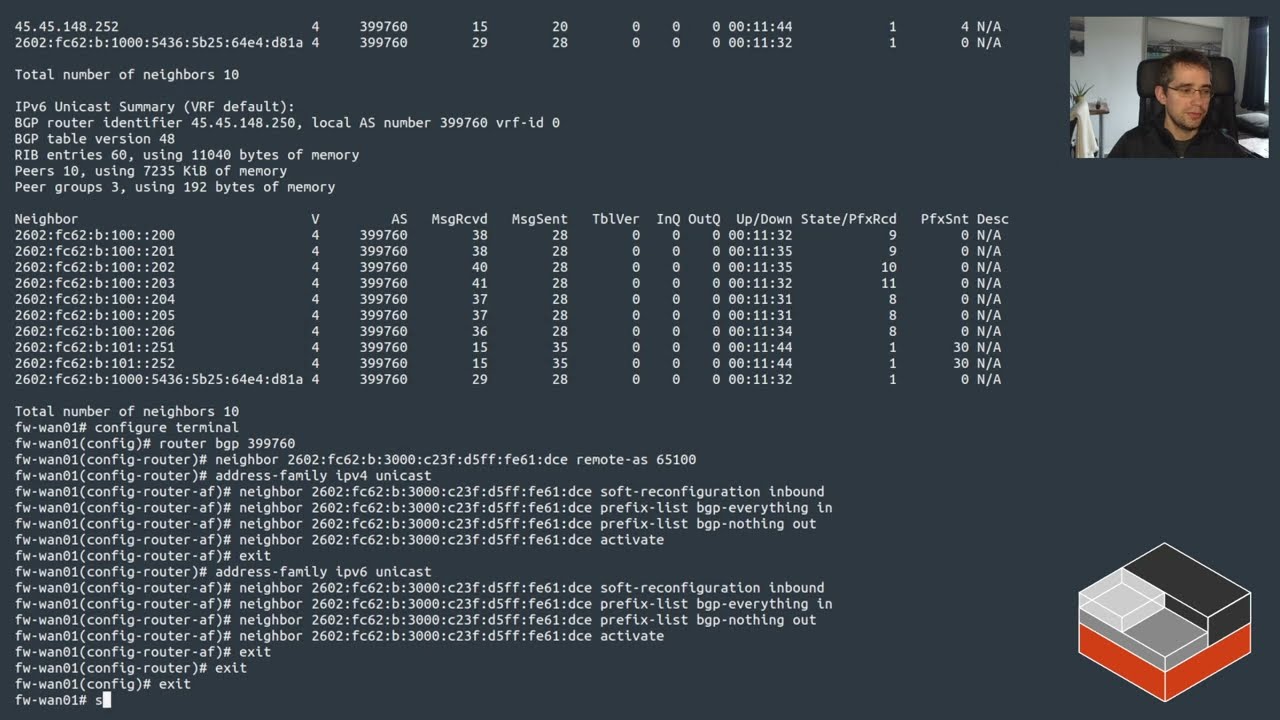

ip link add dummybr0 type bridge # Create dummy uplink bridge.

ip address add 192.0.2.1/24 dev dummybr0

ip address add 2001:db8:1:1::1/64 dev dummybr0

ip link set dummybr0 up

lxc network create dummy --type=physical \

parent=dummybr0 \

ipv4.gateway=192.0.2.1/24 \

ipv4.ovn.ranges=192.0.2.10-192.0.2.19 \

ipv4.routes=publicip-range \

ovn.ingress_mode=routed

lxc network create ovn0 --type=ovn network=dummy

I’m using ubuntu focal and lxc forward works without static routes