It seems zfs is reccomended storage for incus. I have used zfs long back.

When you setup incus, it asks you to choose parotion/disk for ZFS.

1 Even If I choose block device, it just creats zpool with 1 device. Same thing again, whats the point of using zfs with only 1 device mirror?

2 I tried setting up k8s (with containerd as CRI) and it failed with zfs backend storage

filesystem on '/var/lib/containerd/io.containerd.snapshotter.v1.overlayfs/snapshots/48/fs' not supported as upperdir

I saw @stgraber video where he recommended adding another disk (e.g. on btrfs storage) to mount CRI’s directory on this device. Is there any more improved approach now?

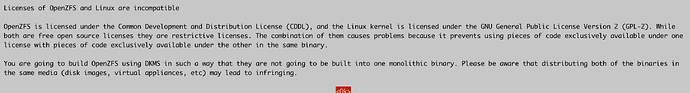

3 I read somewhere that zfs 2.2 now supports overlayfs by default. I am not sure how to get latest opnezfs on ubuntu 20LTS. Any idea how that can be done?

4 I checekd info incus info --verbose and found below for zfs. does this means that incus 6.4 comes with this zfs by default or its just picking default values offered by OS? I guess zfs is part of linux kernel for some time now.

storage_supported_drivers:

- name: zfs

version: 0.8.3-1ubuntu12.18

remote: false