penM000

June 21, 2021, 4:23am

1

If a container with a listen port is started immediately after it is stopped, it cannot be started and the listen port will remain.

https://youtu.be/o1pGYWdCo2M

lxc info --show-log c1

Name: c1

Location: none

Remote: unix://

Architecture: x86_64

Created: 2021/06/21 04:04 UTC

Status: Running

Type: container

Profiles: default

Pid: 16395

Ips:

lo: inet 127.0.0.1

lo: inet6 ::1

eth0: inet 10.58.160.215 veth39aeb650

eth0: inet6 fd42:3ece:a9d9:7476:216:3eff:fe62:8924 veth39aeb650

eth0: inet6 fe80::216:3eff:fe62:8924 veth39aeb650

Resources:

Processes: 40

Disk usage:

root: 22.35MB

CPU usage:

CPU usage (in seconds): 6

Memory usage:

Memory (current): 131.38MB

Memory (peak): 168.90MB

Network usage:

eth0:

Bytes received: 12.30kB

Bytes sent: 5.73kB

Packets received: 48

Packets sent: 50

lo:

Bytes received: 1.04kB

Bytes sent: 1.04kB

Packets received: 12

Packets sent: 12

Log:

lxc c1 20210621040651.271 ERROR utils - utils.c:lxc_can_use_pidfd:1772 - Kernel does not support pidfds

lxc c1 20210621040651.271 WARN conf - conf.c:lxc_map_ids:3007 - newuidmap binary is missing

lxc c1 20210621040651.271 WARN conf - conf.c:lxc_map_ids:3013 - newgidmap binary is missing

lxc c1 20210621040651.273 WARN conf - conf.c:lxc_map_ids:3007 - newuidmap binary is missing

lxc c1 20210621040651.274 WARN conf - conf.c:lxc_map_ids:3013 - newgidmap binary is missing

lxc c1 20210621040651.274 WARN cgfsng - cgroups/cgfsng.c:fchowmodat:1293 - No such file or directory - Failed to fchownat(41, memory.oom.group, 65536, 0, AT_EMPTY_PATH | AT_SYMLINK_NOFOLLOW )

stgraber

June 21, 2021, 8:19am

2

@penM000 what LXD version is that?

tomp

June 21, 2021, 8:25am

3

Its at the end of the video:

https://youtu.be/o1pGYWdCo2M?t=189

LXD 4.15

stgraber

June 21, 2021, 8:26am

4

@tomp can you try to reproduce it and see what’s going on? This may match what we’ve seen on and off on the forum.

penM000

June 21, 2021, 8:49am

6

Sorry. I forgot to describe the environment as a sentence.

server@server1:~$ lxc info

config:

core.https_address: '[::]'

core.trust_password: true

api_extensions:

- storage_zfs_remove_snapshots

- container_host_shutdown_timeout

- container_stop_priority

- container_syscall_filtering

- auth_pki

- container_last_used_at

- etag

- patch

- usb_devices

- https_allowed_credentials

- image_compression_algorithm

- directory_manipulation

- container_cpu_time

- storage_zfs_use_refquota

- storage_lvm_mount_options

- network

- profile_usedby

- container_push

- container_exec_recording

- certificate_update

- container_exec_signal_handling

- gpu_devices

- container_image_properties

- migration_progress

- id_map

- network_firewall_filtering

- network_routes

- storage

- file_delete

- file_append

- network_dhcp_expiry

- storage_lvm_vg_rename

- storage_lvm_thinpool_rename

- network_vlan

- image_create_aliases

- container_stateless_copy

- container_only_migration

- storage_zfs_clone_copy

- unix_device_rename

- storage_lvm_use_thinpool

- storage_rsync_bwlimit

- network_vxlan_interface

- storage_btrfs_mount_options

- entity_description

- image_force_refresh

- storage_lvm_lv_resizing

- id_map_base

- file_symlinks

- container_push_target

- network_vlan_physical

- storage_images_delete

- container_edit_metadata

- container_snapshot_stateful_migration

- storage_driver_ceph

- storage_ceph_user_name

- resource_limits

- storage_volatile_initial_source

- storage_ceph_force_osd_reuse

- storage_block_filesystem_btrfs

- resources

- kernel_limits

- storage_api_volume_rename

- macaroon_authentication

- network_sriov

- console

- restrict_devlxd

- migration_pre_copy

- infiniband

- maas_network

- devlxd_events

- proxy

- network_dhcp_gateway

- file_get_symlink

- network_leases

- unix_device_hotplug

- storage_api_local_volume_handling

- operation_description

- clustering

- event_lifecycle

- storage_api_remote_volume_handling

- nvidia_runtime

- container_mount_propagation

- container_backup

- devlxd_images

- container_local_cross_pool_handling

- proxy_unix

- proxy_udp

- clustering_join

- proxy_tcp_udp_multi_port_handling

- network_state

- proxy_unix_dac_properties

- container_protection_delete

- unix_priv_drop

- pprof_http

- proxy_haproxy_protocol

- network_hwaddr

- proxy_nat

- network_nat_order

- container_full

- candid_authentication

- backup_compression

- candid_config

- nvidia_runtime_config

- storage_api_volume_snapshots

- storage_unmapped

- projects

- candid_config_key

- network_vxlan_ttl

- container_incremental_copy

- usb_optional_vendorid

- snapshot_scheduling

- snapshot_schedule_aliases

- container_copy_project

- clustering_server_address

- clustering_image_replication

- container_protection_shift

- snapshot_expiry

- container_backup_override_pool

- snapshot_expiry_creation

- network_leases_location

- resources_cpu_socket

- resources_gpu

- resources_numa

- kernel_features

- id_map_current

- event_location

- storage_api_remote_volume_snapshots

- network_nat_address

- container_nic_routes

- rbac

- cluster_internal_copy

- seccomp_notify

- lxc_features

- container_nic_ipvlan

- network_vlan_sriov

- storage_cephfs

- container_nic_ipfilter

- resources_v2

- container_exec_user_group_cwd

- container_syscall_intercept

- container_disk_shift

- storage_shifted

- resources_infiniband

- daemon_storage

- instances

- image_types

- resources_disk_sata

- clustering_roles

- images_expiry

- resources_network_firmware

- backup_compression_algorithm

- ceph_data_pool_name

- container_syscall_intercept_mount

- compression_squashfs

- container_raw_mount

- container_nic_routed

- container_syscall_intercept_mount_fuse

- container_disk_ceph

- virtual-machines

- image_profiles

- clustering_architecture

- resources_disk_id

- storage_lvm_stripes

- vm_boot_priority

- unix_hotplug_devices

- api_filtering

- instance_nic_network

- clustering_sizing

- firewall_driver

- projects_limits

- container_syscall_intercept_hugetlbfs

- limits_hugepages

- container_nic_routed_gateway

- projects_restrictions

- custom_volume_snapshot_expiry

- volume_snapshot_scheduling

- trust_ca_certificates

- snapshot_disk_usage

- clustering_edit_roles

- container_nic_routed_host_address

- container_nic_ipvlan_gateway

- resources_usb_pci

- resources_cpu_threads_numa

- resources_cpu_core_die

- api_os

- container_nic_routed_host_table

- container_nic_ipvlan_host_table

- container_nic_ipvlan_mode

- resources_system

- images_push_relay

- network_dns_search

- container_nic_routed_limits

- instance_nic_bridged_vlan

- network_state_bond_bridge

- usedby_consistency

- custom_block_volumes

- clustering_failure_domains

- resources_gpu_mdev

- console_vga_type

- projects_limits_disk

- network_type_macvlan

- network_type_sriov

- container_syscall_intercept_bpf_devices

- network_type_ovn

- projects_networks

- projects_networks_restricted_uplinks

- custom_volume_backup

- backup_override_name

- storage_rsync_compression

- network_type_physical

- network_ovn_external_subnets

- network_ovn_nat

- network_ovn_external_routes_remove

- tpm_device_type

- storage_zfs_clone_copy_rebase

- gpu_mdev

- resources_pci_iommu

- resources_network_usb

- resources_disk_address

- network_physical_ovn_ingress_mode

- network_ovn_dhcp

- network_physical_routes_anycast

- projects_limits_instances

- network_state_vlan

- instance_nic_bridged_port_isolation

- instance_bulk_state_change

- network_gvrp

- instance_pool_move

- gpu_sriov

- pci_device_type

- storage_volume_state

- network_acl

- migration_stateful

- disk_state_quota

- storage_ceph_features

- projects_compression

- projects_images_remote_cache_expiry

- certificate_project

- network_ovn_acl

- projects_images_auto_update

- projects_restricted_cluster_target

- images_default_architecture

- network_ovn_acl_defaults

- gpu_mig

- project_usage

- network_bridge_acl

- warnings

- projects_restricted_backups_and_snapshots

- clustering_join_token

- clustering_description

- server_trusted_proxy

api_status: stable

api_version: "1.0"

auth: trusted

public: false

auth_methods:

- tls

environment:

addresses:

architectures:

- x86_64

- i686

certificate: |

-----BEGIN CERTIFICATE-----

-----END CERTIFICATE-----

certificate_fingerprint:

driver: qemu | lxc

driver_version: 5.2.0 | 4.0.9

firewall: xtables

kernel: Linux

kernel_architecture: x86_64

kernel_features:

netnsid_getifaddrs: "false"

seccomp_listener: "false"

seccomp_listener_continue: "false"

shiftfs: "false"

uevent_injection: "true"

unpriv_fscaps: "true"

kernel_version: 4.19.0-17-amd64

lxc_features:

cgroup2: "true"

devpts_fd: "true"

idmapped_mounts_v2: "false"

mount_injection_file: "true"

network_gateway_device_route: "true"

network_ipvlan: "true"

network_l2proxy: "true"

network_phys_macvlan_mtu: "true"

network_veth_router: "true"

pidfd: "true"

seccomp_allow_deny_syntax: "true"

seccomp_notify: "true"

seccomp_proxy_send_notify_fd: "true"

os_name: Debian GNU/Linux

os_version: "10"

project: default

server: lxd

server_clustered: false

server_name: server1

server_pid: 2118

server_version: "4.15"

storage: zfs

storage_version: 2.0.3-1~bpo10+1

tomp

June 21, 2021, 8:58am

7

Thanks.

Yes I’ve recreated the issue with this in one terminal:

#!/bin/bash

for i in {1..100}; do

lxc start c1

done

and this is another

lxc exec c1 -- shutdown now

The root of the problem is that container stop and start operations are overlapping, preventing the stop hook clean up from the original running container from running:

INFO[06-21|09:48:58] Starting container instanceType=container instance=c1 project=test action=start created=2021-06-21T09:43:25+0100 ephemeral=false used=2021-06-21T09:47:44+0100 stateful=false

EROR[06-21|09:48:58] The stop hook failed instance=c1 err="Container is already running a start operation"

DBUG[06-21|09:48:58]

{

"error": "Container is already running a start operation",

"error_code": 500,

"type": "error"

}

I have one container (it runs my Plex media server) that seems to go a bit rogue over the last couple of weeks. Things I see:

Plex is unresponsive

The load average is a bit higher on my server, sitting about 5 instead of 2-3.

Within the container, try to restart Plex - doesn’t work.

Within the container, try and execute a reboot - doesn’t appear to work.

From the host, try to stop the container - runs infinitely. Ctrl+C 3 times to go back to the prompt.

lxc list shows that the container is running but no IP address.

Can no longer get to a container bash prompt (lxc exec plex bash)

lxc info brought me here:

djwhyte@server1:~$ lxc info --show-log plex

Name: plex

Location: none

Remote: unix://

Architecture: x86_64

Created: 2021/06/06 12:50 UTC

Status: Running

Type: container

Profiles: default

Pid: 3732

Resources:

Processes: 4

Disk usage:

root: 15.57GB

CPU usage:

CPU usage (in seconds): 28321

Memory usage:

Memory (current): 213.93MB

Memory (peak): 9.36GB

Snapshots:

snap0 (taken at 2021/06/15 11:41 UTC) (stateless)

Log:

lxc plex 20210704111427.962 WARN conf - conf.c:lxc_map_ids:3007 - newuidmap binary is missing

lxc plex 20210704111427.963 WARN conf - conf.c:lxc_map_ids:3013 - newgidmap binary is missing

lxc plex 20210704111842.627 WARN conf - conf.c:lxc_map_ids:3007 - newuidmap binary is missing

lxc plex 20210704111842.627 WARN conf - conf.c:lxc_map_ids:3013 - newgidmap binary is missing

lxc plex 20210704112147.244 WARN conf - conf.c:lxc_map_ids:3007 - newuidmap binary is missing

lxc plex 20210704112147.244 WARN conf - conf.c:lxc_map_ids:3013 - newgidmap binary is missing

Running LXD 4.0.6 from snap.

Only fix ends up being a host reboot.

Will the above fix rectify this issue? Or should I be looking elsewhere? Anything else I can do?

Cheers,

tomp

July 4, 2021, 12:02pm

10

No that sounds entirely unrelated.

Did you try using the ‘–force’ or ‘-f’ flag with ‘lxc stop’ that is how you stop an unresponsive instance.

Hi Tom,

I am pretty sure I tried that a couple of weeks ago to no effect. Unfortunately, I have done a server reboot so can’t try now.

Of note, the server reboot takes forever, something causes things to get terribly locked up!

I expect you want me to start a new thread here or issue on GitHub for it?

Cheers,

tomp

July 5, 2021, 7:59am

12

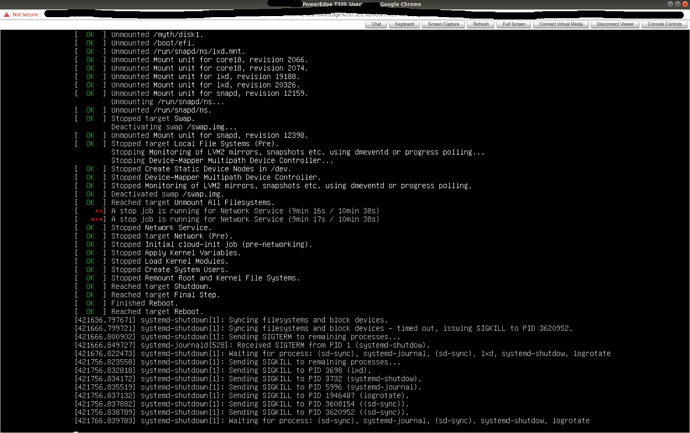

Yes a new thread would be good. It doesn’t look like LXD is causing the delay in shutdown in that image, but the network service, so might not be LXD related.

penM000

July 11, 2021, 1:29pm

13

Thank you for fixing this in lxd4.16!

1 Like