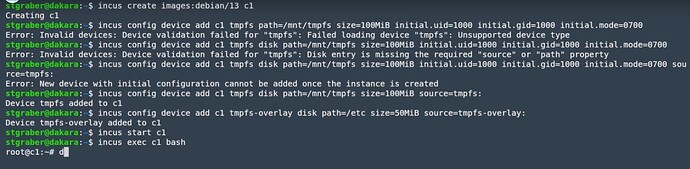

I followed the video instruction on Incus 6.16 has been released trying to setup a tmpfs-overlay device but it does not work. And incus doesn’t provide useful error message for me to findout what is going on.

$ incus image list

+--------------+--------------+--------+-------------------------------------+--------------+-----------+-----------+----------------------+

| ALIAS | FINGERPRINT | PUBLIC | DESCRIPTION | ARCHITECTURE | TYPE | SIZE | UPLOAD DATE |

+--------------+--------------+--------+-------------------------------------+--------------+-----------+-----------+----------------------+

| nixos/25.05 | 2a0aa6ad82fa | no | Nixos 25.05 amd64 (20251020_01:00) | x86_64 | CONTAINER | 151.24MiB | 2025/10/22 16:43 CST |

+--------------+--------------+--------+-------------------------------------+--------------+-----------+-----------+----------------------+

| ubuntu/24.04 | d328b74ad3a8 | no | Ubuntu noble amd64 (20251025_07:42) | x86_64 | CONTAINER | 133.61MiB | 2025/10/26 15:20 CST |

+--------------+--------------+--------+-------------------------------------+--------------+-----------+-----------+----------------------+

$ incus create ubuntu/24.04 c1

Creating c1

$ incus config device add c1 tmpfs-overlay disk path=/etc size=50MiB source=tmpfs-overlay:

Device tmpfs-overlay added to c1

$ incus start c1

Error: Failed to start device "tmpfs-overlay": Cannot find rootfs path for container

Try `incus info --show-log c1` for more info

$ incus info --show-log c1

Name: c1

Description:

Status: STOPPED

Type: container

Architecture: x86_64

Created: 2026/01/06 09:57 CST

Last Used: 1970/01/01 08:00 CST

Log:

$ incus version

Client version: 6.20

Server version: 6.20

The ubuntu/24.04 is the official image pulled from images.linuxcontainers.org. And I think I do basically the same as that showed in the video.

More info about c1 container and storage pool:

$ incus config show c1

architecture: x86_64

config:

image.architecture: amd64

image.description: Ubuntu noble amd64 (20251025_07:42)

image.os: Ubuntu

image.release: noble

image.requirements.cgroup: v2

image.serial: "20251025_07:42"

image.type: squashfs

image.variant: default

volatile.apply_template: create

volatile.base_image: d328b74ad3a8aa27095e81537ef025d29ffaf8e7b108c9f09141064045f67117

volatile.cloud-init.instance-id: a55a3d07-6f51-4ccb-a0b6-bfca94ea3e09

volatile.eth0.hwaddr: 10:66:6a:8f:3c:25

volatile.idmap.base: "0"

volatile.idmap.current: '[{"Isuid":true,"Isgid":false,"Hostid":1000000,"Nsid":0,"Maprange":1000000000},{"Isuid":false,"Isgid":true,"Hostid":1000000,"Nsid":0,"Maprange":1000000000}]'

volatile.idmap.next: '[{"Isuid":true,"Isgid":false,"Hostid":1000000,"Nsid":0,"Maprange":1000000000},{"Isuid":false,"Isgid":true,"Hostid":1000000,"Nsid":0,"Maprange":1000000000}]'

volatile.last_state.idmap: '[]'

volatile.uuid: c9a4b46b-d1d7-4d5d-ad16-35f931f7f615

volatile.uuid.generation: c9a4b46b-d1d7-4d5d-ad16-35f931f7f615

devices:

tmpfs-overlay:

path: /etc

size: 50MiB

source: 'tmpfs-overlay:'

type: disk

ephemeral: false

profiles:

- default

stateful: false

description: ""

$ incus profile show default

config: {}

description: Default Incus profile

devices:

eth0:

name: eth0

network: incusbr0

type: nic

root:

path: /

pool: default_zfs

type: disk

name: default

used_by:

- ...

- /1.0/instances/c1

project: default

$ incus storage show default_zfs

config:

source: zinfra/incus_pool

volatile.initial_source: zinfra/incus_pool

zfs.pool_name: zinfra/incus_pool

description: ""

name: default_zfs

driver: zfs

used_by:

- /1.0/images/2a0aa6ad82fac372437f8941120d6fe675908ae0cbde942a626b649565659eb9

- /1.0/images/d328b74ad3a8aa27095e81537ef025d29ffaf8e7b108c9f09141064045f67117

- /1.0/instances/c1

- ...

- /1.0/profiles/default

- ...

status: Created

locations:

- none

System info (if it matters):

$ incus info

config:

core.https_address: :8443

images.auto_update_cached: "false"

api_extensions:

- storage_zfs_remove_snapshots

- container_host_shutdown_timeout

- container_stop_priority

- container_syscall_filtering

- auth_pki

- container_last_used_at

- etag

- patch

- usb_devices

- https_allowed_credentials

- image_compression_algorithm

- directory_manipulation

- container_cpu_time

- storage_zfs_use_refquota

- storage_lvm_mount_options

- network

- profile_usedby

- container_push

- container_exec_recording

- certificate_update

- container_exec_signal_handling

- gpu_devices

- container_image_properties

- migration_progress

- id_map

- network_firewall_filtering

- network_routes

- storage

- file_delete

- file_append

- network_dhcp_expiry

- storage_lvm_vg_rename

- storage_lvm_thinpool_rename

- network_vlan

- image_create_aliases

- container_stateless_copy

- container_only_migration

- storage_zfs_clone_copy

- unix_device_rename

- storage_lvm_use_thinpool

- storage_rsync_bwlimit

- network_vxlan_interface

- storage_btrfs_mount_options

- entity_description

- image_force_refresh

- storage_lvm_lv_resizing

- id_map_base

- file_symlinks

- container_push_target

- network_vlan_physical

- storage_images_delete

- container_edit_metadata

- container_snapshot_stateful_migration

- storage_driver_ceph

- storage_ceph_user_name

- resource_limits

- storage_volatile_initial_source

- storage_ceph_force_osd_reuse

- storage_block_filesystem_btrfs

- resources

- kernel_limits

- storage_api_volume_rename

- network_sriov

- console

- restrict_dev_incus

- migration_pre_copy

- infiniband

- dev_incus_events

- proxy

- network_dhcp_gateway

- file_get_symlink

- network_leases

- unix_device_hotplug

- storage_api_local_volume_handling

- operation_description

- clustering

- event_lifecycle

- storage_api_remote_volume_handling

- nvidia_runtime

- container_mount_propagation

- container_backup

- dev_incus_images

- container_local_cross_pool_handling

- proxy_unix

- proxy_udp

- clustering_join

- proxy_tcp_udp_multi_port_handling

- network_state

- proxy_unix_dac_properties

- container_protection_delete

- unix_priv_drop

- pprof_http

- proxy_haproxy_protocol

- network_hwaddr

- proxy_nat

- network_nat_order

- container_full

- backup_compression

- nvidia_runtime_config

- storage_api_volume_snapshots

- storage_unmapped

- projects

- network_vxlan_ttl

- container_incremental_copy

- usb_optional_vendorid

- snapshot_scheduling

- snapshot_schedule_aliases

- container_copy_project

- clustering_server_address

- clustering_image_replication

- container_protection_shift

- snapshot_expiry

- container_backup_override_pool

- snapshot_expiry_creation

- network_leases_location

- resources_cpu_socket

- resources_gpu

- resources_numa

- kernel_features

- id_map_current

- event_location

- storage_api_remote_volume_snapshots

- network_nat_address

- container_nic_routes

- cluster_internal_copy

- seccomp_notify

- lxc_features

- container_nic_ipvlan

- network_vlan_sriov

- storage_cephfs

- container_nic_ipfilter

- resources_v2

- container_exec_user_group_cwd

- container_syscall_intercept

- container_disk_shift

- storage_shifted

- resources_infiniband

- daemon_storage

- instances

- image_types

- resources_disk_sata

- clustering_roles

- images_expiry

- resources_network_firmware

- backup_compression_algorithm

- ceph_data_pool_name

- container_syscall_intercept_mount

- compression_squashfs

- container_raw_mount

- container_nic_routed

- container_syscall_intercept_mount_fuse

- container_disk_ceph

- virtual-machines

- image_profiles

- clustering_architecture

- resources_disk_id

- storage_lvm_stripes

- vm_boot_priority

- unix_hotplug_devices

- api_filtering

- instance_nic_network

- clustering_sizing

- firewall_driver

- projects_limits

- container_syscall_intercept_hugetlbfs

- limits_hugepages

- container_nic_routed_gateway

- projects_restrictions

- custom_volume_snapshot_expiry

- volume_snapshot_scheduling

- trust_ca_certificates

- snapshot_disk_usage

- clustering_edit_roles

- container_nic_routed_host_address

- container_nic_ipvlan_gateway

- resources_usb_pci

- resources_cpu_threads_numa

- resources_cpu_core_die

- api_os

- container_nic_routed_host_table

- container_nic_ipvlan_host_table

- container_nic_ipvlan_mode

- resources_system

- images_push_relay

- network_dns_search

- container_nic_routed_limits

- instance_nic_bridged_vlan

- network_state_bond_bridge

- usedby_consistency

- custom_block_volumes

- clustering_failure_domains

- resources_gpu_mdev

- console_vga_type

- projects_limits_disk

- network_type_macvlan

- network_type_sriov

- container_syscall_intercept_bpf_devices

- network_type_ovn

- projects_networks

- projects_networks_restricted_uplinks

- custom_volume_backup

- backup_override_name

- storage_rsync_compression

- network_type_physical

- network_ovn_external_subnets

- network_ovn_nat

- network_ovn_external_routes_remove

- tpm_device_type

- storage_zfs_clone_copy_rebase

- gpu_mdev

- resources_pci_iommu

- resources_network_usb

- resources_disk_address

- network_physical_ovn_ingress_mode

- network_ovn_dhcp

- network_physical_routes_anycast

- projects_limits_instances

- network_state_vlan

- instance_nic_bridged_port_isolation

- instance_bulk_state_change

- network_gvrp

- instance_pool_move

- gpu_sriov

- pci_device_type

- storage_volume_state

- network_acl

- migration_stateful

- disk_state_quota

- storage_ceph_features

- projects_compression

- projects_images_remote_cache_expiry

- certificate_project

- network_ovn_acl

- projects_images_auto_update

- projects_restricted_cluster_target

- images_default_architecture

- network_ovn_acl_defaults

- gpu_mig

- project_usage

- network_bridge_acl

- warnings

- projects_restricted_backups_and_snapshots

- clustering_join_token

- clustering_description

- server_trusted_proxy

- clustering_update_cert

- storage_api_project

- server_instance_driver_operational

- server_supported_storage_drivers

- event_lifecycle_requestor_address

- resources_gpu_usb

- clustering_evacuation

- network_ovn_nat_address

- network_bgp

- network_forward

- custom_volume_refresh

- network_counters_errors_dropped

- metrics

- image_source_project

- clustering_config

- network_peer

- linux_sysctl

- network_dns

- ovn_nic_acceleration

- certificate_self_renewal

- instance_project_move

- storage_volume_project_move

- cloud_init

- network_dns_nat

- database_leader

- instance_all_projects

- clustering_groups

- ceph_rbd_du

- instance_get_full

- qemu_metrics

- gpu_mig_uuid

- event_project

- clustering_evacuation_live

- instance_allow_inconsistent_copy

- network_state_ovn

- storage_volume_api_filtering

- image_restrictions

- storage_zfs_export

- network_dns_records

- storage_zfs_reserve_space

- network_acl_log

- storage_zfs_blocksize

- metrics_cpu_seconds

- instance_snapshot_never

- certificate_token

- instance_nic_routed_neighbor_probe

- event_hub

- agent_nic_config

- projects_restricted_intercept

- metrics_authentication

- images_target_project

- images_all_projects

- cluster_migration_inconsistent_copy

- cluster_ovn_chassis

- container_syscall_intercept_sched_setscheduler

- storage_lvm_thinpool_metadata_size

- storage_volume_state_total

- instance_file_head

- instances_nic_host_name

- image_copy_profile

- container_syscall_intercept_sysinfo

- clustering_evacuation_mode

- resources_pci_vpd

- qemu_raw_conf

- storage_cephfs_fscache

- network_load_balancer

- vsock_api

- instance_ready_state

- network_bgp_holdtime

- storage_volumes_all_projects

- metrics_memory_oom_total

- storage_buckets

- storage_buckets_create_credentials

- metrics_cpu_effective_total

- projects_networks_restricted_access

- storage_buckets_local

- loki

- acme

- internal_metrics

- cluster_join_token_expiry

- remote_token_expiry

- init_preseed

- storage_volumes_created_at

- cpu_hotplug

- projects_networks_zones

- network_txqueuelen

- cluster_member_state

- instances_placement_scriptlet

- storage_pool_source_wipe

- zfs_block_mode

- instance_generation_id

- disk_io_cache

- amd_sev

- storage_pool_loop_resize

- migration_vm_live

- ovn_nic_nesting

- oidc

- network_ovn_l3only

- ovn_nic_acceleration_vdpa

- cluster_healing

- instances_state_total

- auth_user

- security_csm

- instances_rebuild

- numa_cpu_placement

- custom_volume_iso

- network_allocations

- zfs_delegate

- storage_api_remote_volume_snapshot_copy

- operations_get_query_all_projects

- metadata_configuration

- syslog_socket

- event_lifecycle_name_and_project

- instances_nic_limits_priority

- disk_initial_volume_configuration

- operation_wait

- image_restriction_privileged

- cluster_internal_custom_volume_copy

- disk_io_bus

- storage_cephfs_create_missing

- instance_move_config

- ovn_ssl_config

- certificate_description

- disk_io_bus_virtio_blk

- loki_config_instance

- instance_create_start

- clustering_evacuation_stop_options

- boot_host_shutdown_action

- agent_config_drive

- network_state_ovn_lr

- image_template_permissions

- storage_bucket_backup

- storage_lvm_cluster

- shared_custom_block_volumes

- auth_tls_jwt

- oidc_claim

- device_usb_serial

- numa_cpu_balanced

- image_restriction_nesting

- network_integrations

- instance_memory_swap_bytes

- network_bridge_external_create

- network_zones_all_projects

- storage_zfs_vdev

- container_migration_stateful

- profiles_all_projects

- instances_scriptlet_get_instances

- instances_scriptlet_get_cluster_members

- instances_scriptlet_get_project

- network_acl_stateless

- instance_state_started_at

- networks_all_projects

- network_acls_all_projects

- storage_buckets_all_projects

- resources_load

- instance_access

- project_access

- projects_force_delete

- resources_cpu_flags

- disk_io_bus_cache_filesystem

- instance_oci

- clustering_groups_config

- instances_lxcfs_per_instance

- clustering_groups_vm_cpu_definition

- disk_volume_subpath

- projects_limits_disk_pool

- network_ovn_isolated

- qemu_raw_qmp

- network_load_balancer_health_check

- oidc_scopes

- network_integrations_peer_name

- qemu_scriptlet

- instance_auto_restart

- storage_lvm_metadatasize

- ovn_nic_promiscuous

- ovn_nic_ip_address_none

- instances_state_os_info

- network_load_balancer_state

- instance_nic_macvlan_mode

- storage_lvm_cluster_create

- network_ovn_external_interfaces

- instances_scriptlet_get_instances_count

- cluster_rebalance

- custom_volume_refresh_exclude_older_snapshots

- storage_initial_owner

- storage_live_migration

- instance_console_screenshot

- image_import_alias

- authorization_scriptlet

- console_force

- network_ovn_state_addresses

- network_bridge_acl_devices

- instance_debug_memory

- init_preseed_storage_volumes

- init_preseed_profile_project

- instance_nic_routed_host_address

- instance_smbios11

- api_filtering_extended

- acme_dns01

- security_iommu

- network_ipv4_dhcp_routes

- network_state_ovn_ls

- network_dns_nameservers

- acme_http01_port

- network_ovn_ipv4_dhcp_expiry

- instance_state_cpu_time

- network_io_bus

- disk_io_bus_usb

- storage_driver_linstor

- instance_oci_entrypoint

- network_address_set

- server_logging

- network_forward_snat

- memory_hotplug

- instance_nic_routed_host_tables

- instance_publish_split

- init_preseed_certificates

- custom_volume_sftp

- network_ovn_external_nic_address

- network_physical_gateway_hwaddr

- backup_s3_upload

- snapshot_manual_expiry

- resources_cpu_address_sizes

- disk_attached

- limits_memory_hotplug

- disk_wwn

- server_logging_webhook

- storage_driver_truenas

- container_disk_tmpfs

- instance_limits_oom

- backup_override_config

- network_ovn_tunnels

- init_preseed_cluster_groups

- usb_attached

- backup_iso

- instance_systemd_credentials

- cluster_group_usedby

- bpf_token_delegation

- file_storage_volume

- network_hwaddr_pattern

- storage_volume_full

- storage_bucket_full

- device_pci_firmware

- resources_serial

- ovn_nic_limits

- storage_lvmcluster_qcow2

api_status: stable

api_version: "1.0"

auth: trusted

public: false

auth_methods:

- tls

auth_user_name: ...

auth_user_method: unix

environment:

addresses:

- ...

architectures:

- x86_64

- i686

certificate: |

-----BEGIN CERTIFICATE-----

...

-----END CERTIFICATE-----

certificate_fingerprint: ...

driver: lxc | qemu

driver_version: 6.0.5 | 10.1.2

firewall: nftables

kernel: Linux

kernel_architecture: x86_64

kernel_features:

idmapped_mounts: "true"

netnsid_getifaddrs: "true"

seccomp_listener: "true"

seccomp_listener_continue: "true"

uevent_injection: "true"

unpriv_binfmt: "true"

unpriv_fscaps: "true"

kernel_version: 6.12.63

lxc_features:

cgroup2: "true"

core_scheduling: "true"

devpts_fd: "true"

idmapped_mounts_v2: "true"

mount_injection_file: "true"

network_gateway_device_route: "true"

network_ipvlan: "true"

network_l2proxy: "true"

network_phys_macvlan_mtu: "true"

network_veth_router: "true"

pidfd: "true"

seccomp_allow_deny_syntax: "true"

seccomp_notify: "true"

seccomp_proxy_send_notify_fd: "true"

os_name: NixOS

os_version: "25.11"

project: default

server: incus

server_clustered: false

server_event_mode: full-mesh

server_name: ...

server_pid: 3602569

server_version: "6.20"

storage: zfs | dir

storage_version: 2.3.5-1 | 1

storage_supported_drivers:

- name: lvm

version: 2.03.35(2) (2025-09-09) / 1.02.209 (2025-09-09) / 4.48.0

remote: false

- name: zfs

version: 2.3.5-1

remote: false

- name: btrfs

version: 6.17.1

remote: false

- name: dir

version: "1"

remote: false

Do I need to provide something else?