I’m encountering a very strange problem:

I have 2 sets of LXD clusters, 3 node, zfs storage, the free and top command is warning me the server is running out of memory:

root@qa-physical-5-3:~# free -h

total used free shared buff/cache available

Mem: 251Gi 204Gi 17Gi 12Gi 30Gi 34Gi

Swap: 0B 0B 0B

root@qa-physical-5-3:~# top

top - 11:38:39 up 34 days, 10:46, 1 user, load average: 28.10, 32.12, 32.97

Tasks: 3378 total, 6 running, 3367 sleeping, 0 stopped, 5 zombie

%Cpu(s): 17.6 us, 13.6 sy, 0.0 ni, 65.9 id, 1.6 wa, 0.0 hi, 1.3 si, 0.0 st

MiB Mem : 257838.6 total, 26016.3 free, 201662.1 used, 30160.2 buff/cache

MiB Swap: 0.0 total, 0.0 free, 0.0 used. 42566.1 avail Mem

I checkout the /proc/meminfo , the output of free seems correct:

root@qa-physical-5-3:~# cat /proc/meminfo

MemTotal: 264026704 kB

MemFree: 25744088 kB

MemAvailable: 42763824 kB

Buffers: 654944 kB

Cached: 27856168 kB

SwapCached: 0 kB

Active: 19678860 kB

Inactive: 80063128 kB

Active(anon): 11324024 kB

Inactive(anon): 71923408 kB

Active(file): 8354836 kB

Inactive(file): 8139720 kB

Unevictable: 22896 kB

Mlocked: 19824 kB

SwapTotal: 0 kB

SwapFree: 0 kB

Dirty: 3852 kB

Writeback: 0 kB

AnonPages: 71252024 kB

Mapped: 12299104 kB

Shmem: 12601404 kB

KReclaimable: 2374472 kB

Slab: 18922000 kB

SReclaimable: 2374472 kB

SUnreclaim: 16547528 kB

KernelStack: 408624 kB

PageTables: 903144 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 132013352 kB

Committed_AS: 282097004 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 7971784 kB

VmallocChunk: 0 kB

Percpu: 2263968 kB

HardwareCorrupted: 0 kB

AnonHugePages: 32768 kB

ShmemHugePages: 0 kB

ShmemPmdMapped: 0 kB

FileHugePages: 0 kB

FilePmdMapped: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

Hugetlb: 0 kB

DirectMap4k: 26174288 kB

DirectMap2M: 216999936 kB

DirectMap1G: 27262976 kB

But the htop command has a different opinion, it’s telling me the rss of server is 100GiB.

Avg: 20.9% sys: 13.1% low: 0.0% vir: 0.4% Hostname: qa-physical-5-3

1[||||||||| 10.1%] Tasks: 2271, 23166 thr; 38 running

2[||||||||||||||||| 23.8%] Load average: 38.88 35.59 34.67

3[|||||||||||||||||||||||||||||||||||||||||||||||||||| 76.9%] Uptime: 34 days, 10:52:29

4[||||||||||||||||||||||||||||||||||||||||| 59.3%] Mem[||||||||||||||||||||||||||||||||||||||||||||||||||||||||||100G/252G]

5[|||||||||||||| 18.5%] Swp[ 0K/0K]

I checkout all the process of VMRSS and realize the htop is correct:

root@qa-physical-5-3:~# echo > /tmp/rss && for i in `ls /proc/*[0-9]/status`;do cat $i | grep VmRSS | awk '{print$2}' >> /tmp/rss;done && awk '{sum += $1};END {print sum/1024/1024}' /tmp/rss

106.055

This problem occurred on 2 sets of the lxd clusters, what they have in common is the use of lxd cluster mode and zfs storage pools. I also have manny other standalone lxd servers adn they are all seem ok.

The environment information of lxd cluster :

root@qa-physical-5-3:~# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 21.10

Release: 21.10

Codename: impish

root@qa-physical-5-3:~# uname -a

Linux qa-physical-5-3 5.13.0-41-generic #46-Ubuntu SMP Thu Apr 14 20:06:04 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

root@qa-physical-5-3:~# snap version

snap 2.54.3+21.10.1ubuntu0.2

snapd 2.54.3+21.10.1ubuntu0.2

series 16

ubuntu 21.10

kernel 5.13.0-41-generic

root@qa-physical-5-3:~# snap list lxd

Name Version Rev Tracking Publisher Notes

lxd 5.2-79c3c3b 23155 latest/stable/… canonical✓ in-cohort

root@qa-physical-5-3:~# lxc cluster list

+-----------------+-------------------------+-----------------+--------------+----------------+-------------+--------+-------------------+

| NAME | URL | ROLES | ARCHITECTURE | FAILURE DOMAIN | DESCRIPTION | STATE | MESSAGE |

+-----------------+-------------------------+-----------------+--------------+----------------+-------------+--------+-------------------+

| qa-physical-5-2 | https://172.30.5.2:8443 | database | x86_64 | default | | ONLINE | Fully operational |

+-----------------+-------------------------+-----------------+--------------+----------------+-------------+--------+-------------------+

| qa-physical-5-3 | https://172.30.5.3:8443 | database-leader | x86_64 | default | | ONLINE | Fully operational |

| | | database | | | | | |

+-----------------+-------------------------+-----------------+--------------+----------------+-------------+--------+-------------------+

| qa-physical-5-4 | https://172.30.5.4:8443 | database | x86_64 | default | | ONLINE | Fully operational |

+-----------------+-------------------------+-----------------+--------------+----------------+-------------+--------+-------------------+

root@qa-physical-5-3:~#

Where has the missing memory been gone?

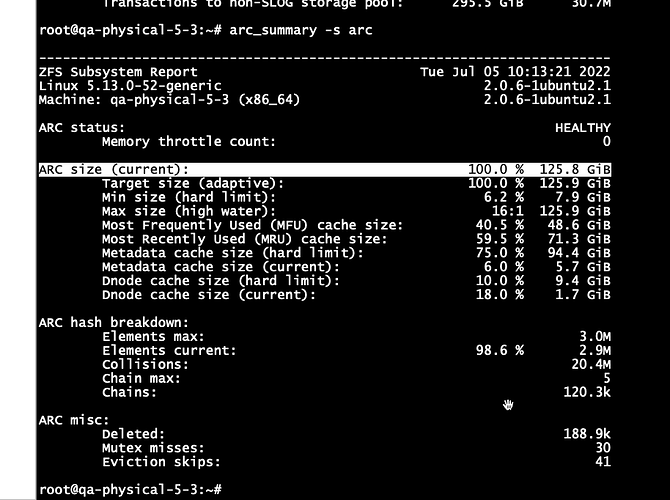

. finally the missing memory has been found:

. finally the missing memory has been found: